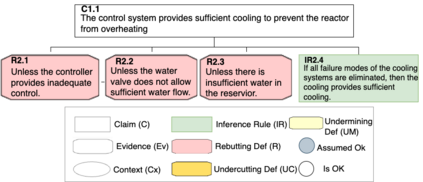

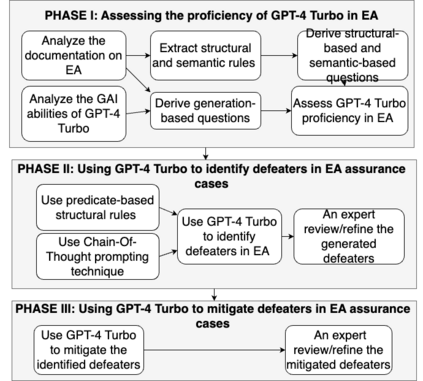

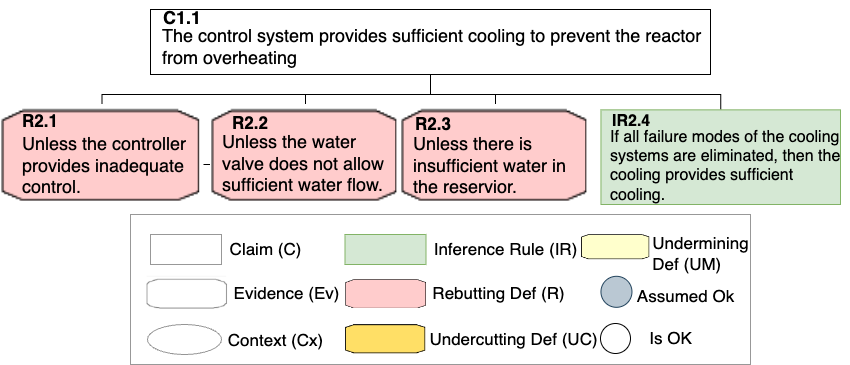

Assurance cases (ACs) are structured arguments that support the verification of the correct implementation of systems' non-functional requirements, such as safety and security, thereby preventing system failures which could lead to catastrophic outcomes, including loss of lives. ACs facilitate the certification of systems in accordance with industrial standards, for example, DO-178C and ISO 26262. Identifying defeaters arguments that refute these ACs is essential for improving the robustness and confidence in ACs. To automate this task, we introduce a novel method that leverages the capabilities of GPT-4 Turbo, an advanced Large Language Model (LLM) developed by OpenAI, to identify defeaters within ACs formalized using the Eliminative Argumentation (EA) notation. Our initial evaluation gauges the model's proficiency in understanding and generating arguments within this framework. The findings indicate that GPT-4 Turbo excels in EA notation and is capable of generating various types of defeaters.

翻译:暂无翻译