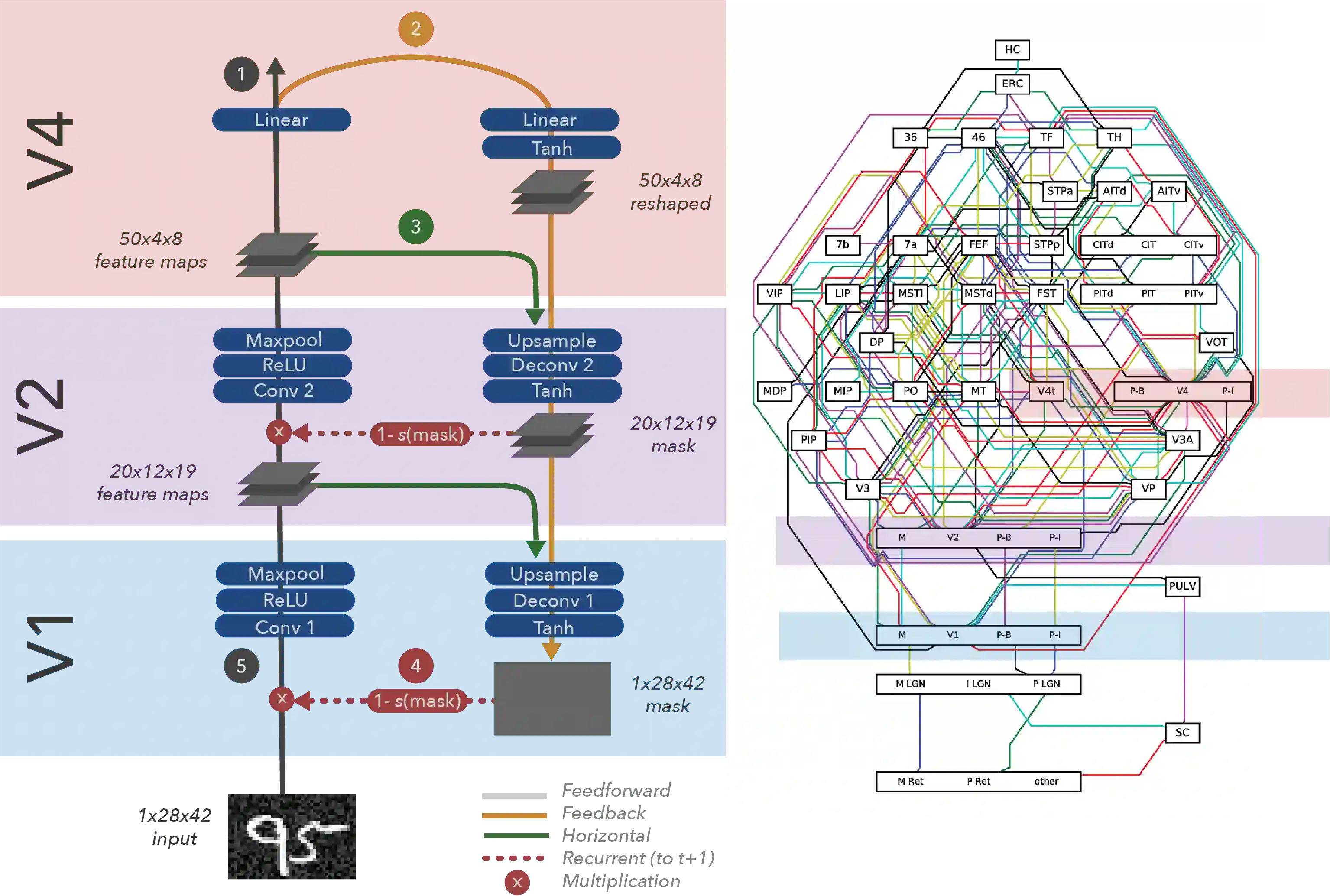

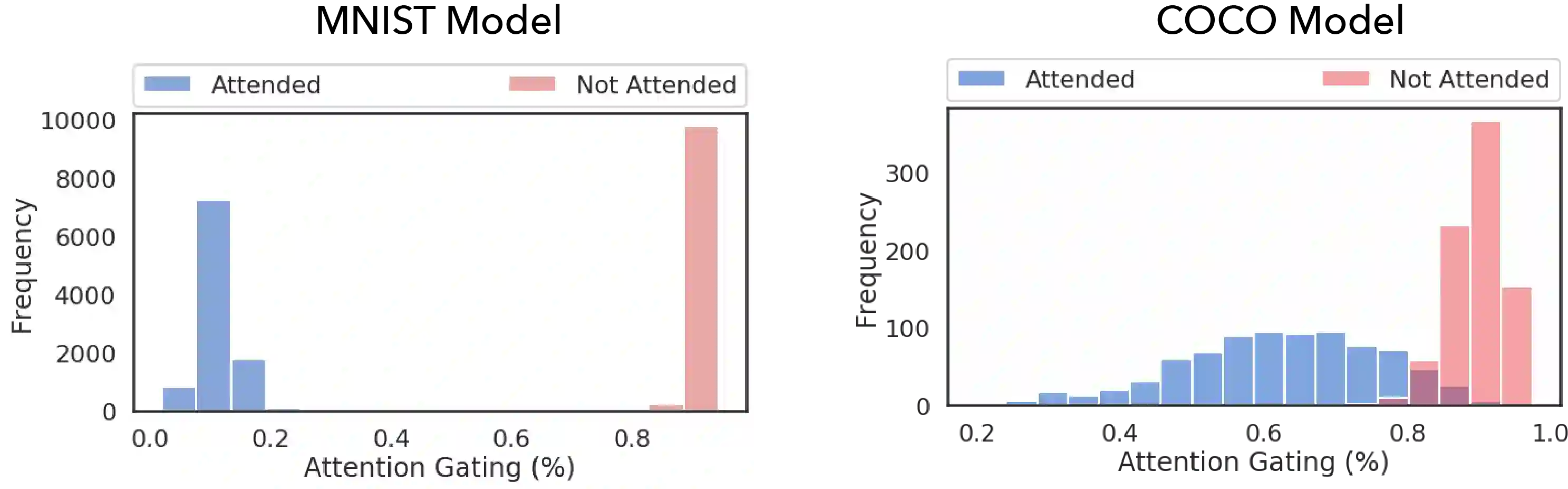

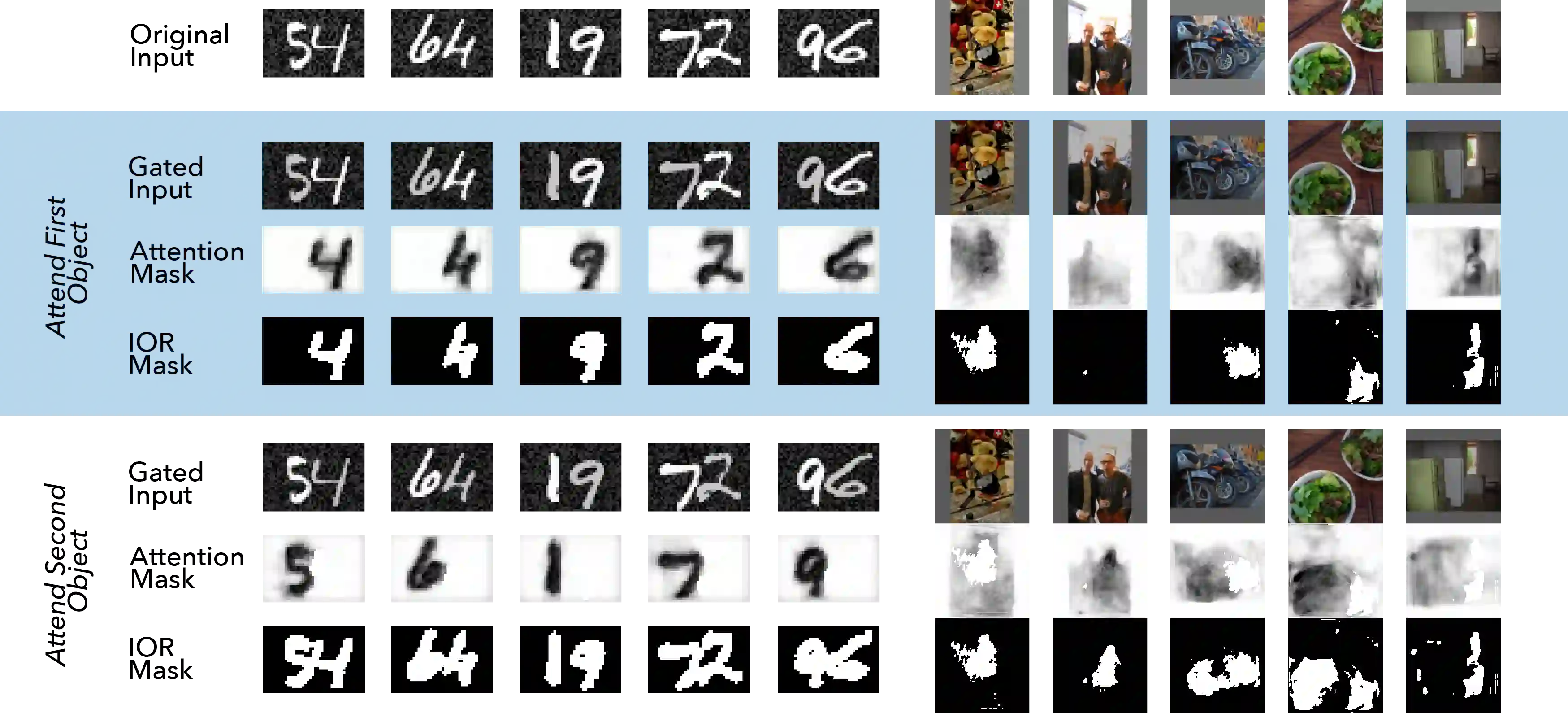

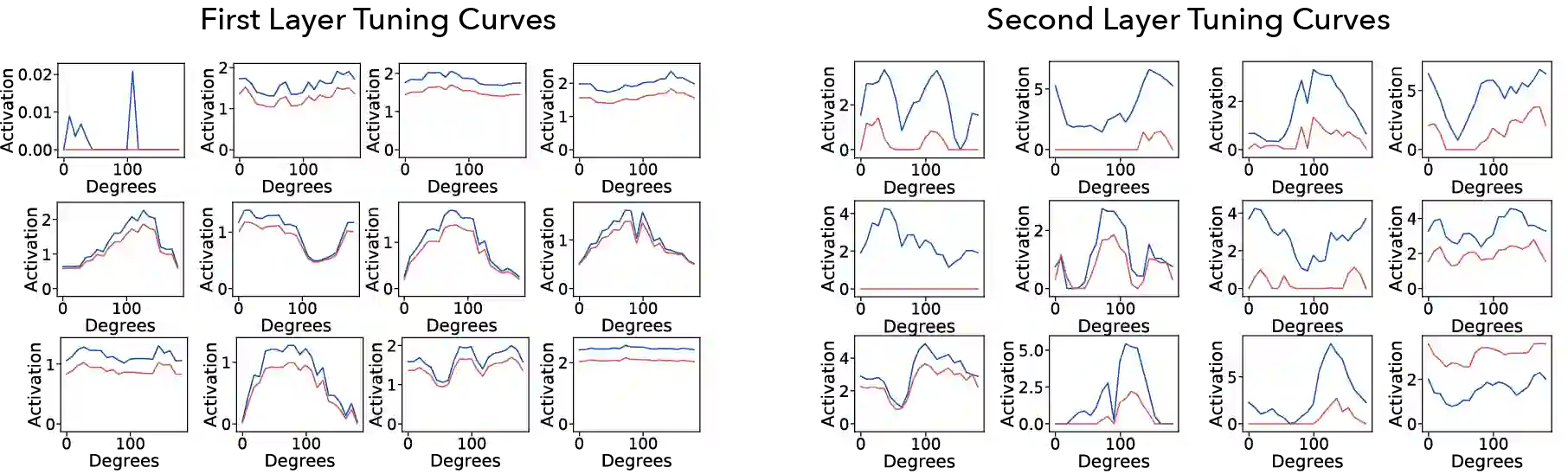

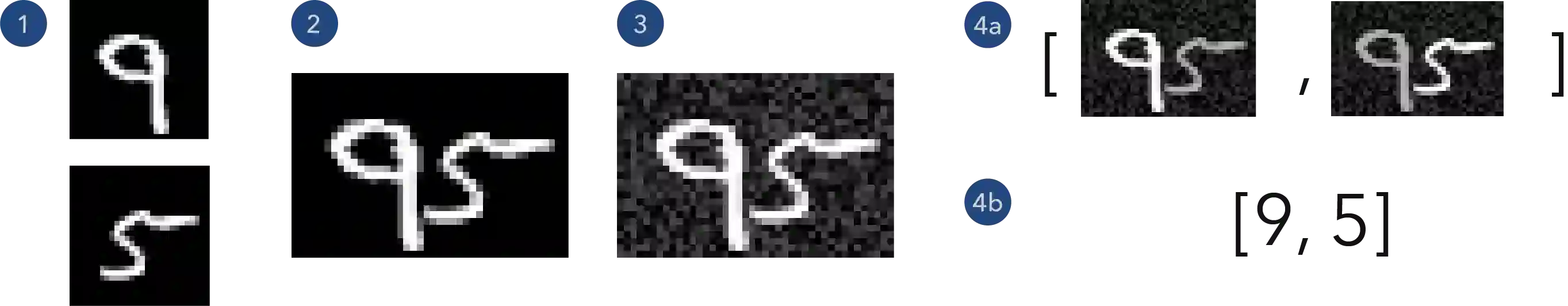

Object-based attention is a key component of the visual system, relevant for perception, learning, and memory. Neurons tuned to features of attended objects tend to be more active than those associated with non-attended objects. There is a rich set of models of this phenomenon in computational neuroscience. However, there is currently a divide between models that successfully match physiological data but can only deal with extremely simple problems and models of attention used in computer vision. For example, attention in the brain is known to depend on top-down processing, whereas self-attention in deep learning does not. Here, we propose an artificial neural network model of object-based attention that captures the way in which attention is both top-down and recurrent. Our attention model works well both on simple test stimuli, such as those using images of handwritten digits, and on more complex stimuli, such as natural images drawn from the COCO dataset. We find that our model replicates a range of findings from neuroscience, including attention-invariant tuning, inhibition of return, and attention-mediated scaling of activity. Understanding object based attention is both computationally interesting and a key problem for computational neuroscience.

翻译:以对象为基础的关注是视觉系统的一个关键组成部分,与感知、学习和记忆相关。 受注意对象的特点调整的神经网络模型往往比与非受注意对象有关的特点更加活跃。 在计算神经科学中存在大量这种现象的模型。 但是,目前,在成功地匹配生理数据的模型和计算机视觉中使用的非常简单的关注问题和模型之间存在差异。 例如, 大脑中的注意力已知取决于自上而下的处理, 而深层次学习中的自我意识则不然。 在这里, 我们提议了一个基于对象的注意的人工神经网络模型, 以捕捉注意力自上而下和经常性的方式。 我们的注意模型在简单的测试刺激方面运作良好, 例如使用手写数字图像的模型, 以及更复杂的刺激, 例如从COCO数据集中提取的自然图像。 我们发现, 我们的模型复制了神经科学的一系列发现, 包括注意力变量的调适、 抑制返回和注意力调节活动缩放的缩放。 理解基于对象的注意是计算性、 关键的神经学的计算问题。