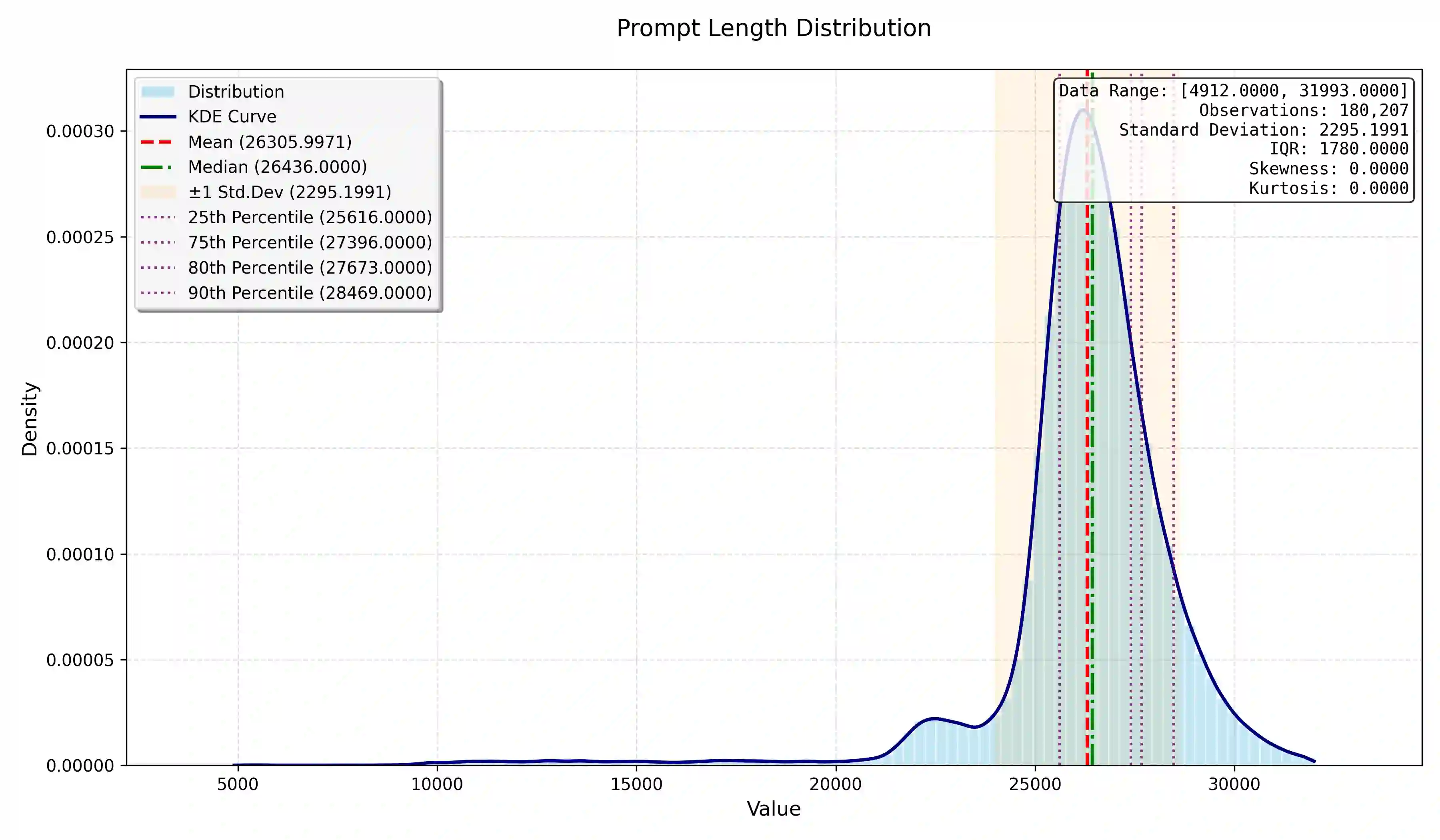

Recently, large language models (LLMs) have demonstrated outstanding reasoning capabilities on mathematical and coding tasks. However, their application to financial tasks-especially the most fundamental task of stock movement prediction-remains underexplored. We study a three-class classification problem (up, hold, down) and, by analyzing existing reasoning responses, observe that: (1) LLMs follow analysts' opinions rather than exhibit a systematic, independent analytical logic (CoTs). (2) LLMs list summaries from different sources without weighing adversarial evidence, yet such counterevidence is crucial for reliable prediction. It shows that the model does not make good use of its reasoning ability to complete the task. To address this, we propose Reflective Evidence Tuning (RETuning), a cold-start method prior to reinforcement learning, to enhance prediction ability. While generating CoT, RETuning encourages dynamically constructing an analytical framework from diverse information sources, organizing and scoring evidence for price up or down based on that framework-rather than on contextual viewpoints-and finally reflecting to derive the prediction. This approach maximally aligns the model with its learned analytical framework, ensuring independent logical reasoning and reducing undue influence from context. We also build a large-scale dataset spanning all of 2024 for 5,123 A-share stocks, with long contexts (32K tokens) and over 200K samples. In addition to price and news, it incorporates analysts' opinions, quantitative reports, fundamental data, macroeconomic indicators, and similar stocks. Experiments show that RETuning successfully unlocks the model's reasoning ability in the financial domain. Inference-time scaling still works even after 6 months or on out-of-distribution stocks, since the models gain valuable insights about stock movement prediction.

翻译:近年来,大语言模型(LLMs)在数学与编程任务上展现出卓越的推理能力。然而,其在金融任务——尤其是最基础的股票走势预测任务——中的应用仍待深入探索。本研究聚焦于三分类问题(上涨、持平、下跌),通过分析现有推理响应发现:(1)LLMs倾向于遵循分析师观点,而非展现出系统化、独立的分析逻辑(思维链,CoTs)。(2)LLMs仅罗列不同来源的总结性信息,而未对对立证据进行权衡,然而此类反证对于可靠预测至关重要。这表明模型未能有效利用其推理能力完成任务。为解决此问题,我们提出反思性证据调优(Reflective Evidence Tuning, RETuning),一种在强化学习前实施的冷启动方法,以增强预测能力。在生成思维链时,RETuning鼓励模型动态构建来自多元信息源的分析框架,基于该框架(而非上下文观点)组织并评估支持股价上涨或下跌的证据并予以评分,最终通过反思推导出预测结果。该方法最大程度地将模型与其习得的分析框架对齐,确保独立的逻辑推理,并减少来自上下文的过度影响。我们还构建了一个大规模数据集,涵盖2024年全年5,123只A股股票,包含长上下文(32K词元)及超过20万条样本。除价格与新闻外,该数据集整合了分析师观点、量化报告、基本面数据、宏观经济指标及相似股票信息。实验表明,RETuning成功释放了模型在金融领域的推理能力。即使经过六个月或面对分布外股票,推理时缩放依然有效,因为模型已获得关于股票走势预测的宝贵洞见。