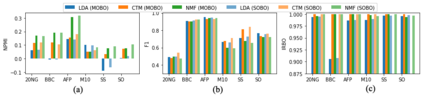

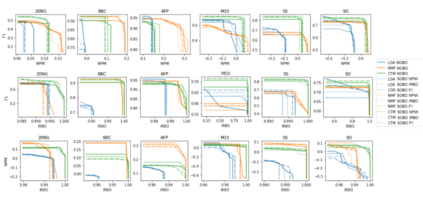

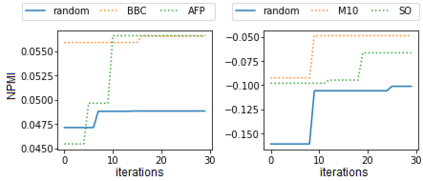

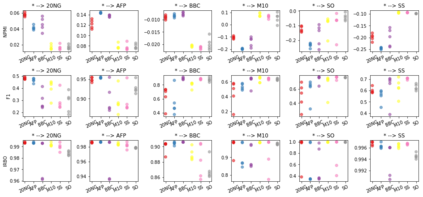

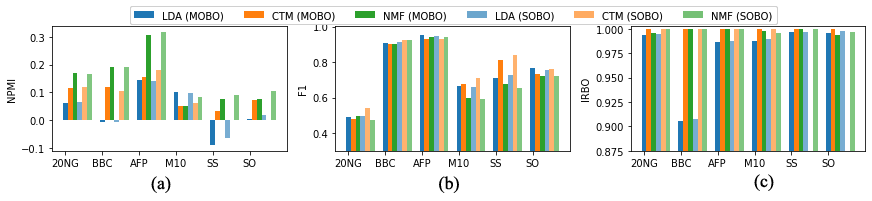

Topic models are statistical methods that extract underlying topics from document collections. When performing topic modeling, a user usually desires topics that are coherent, diverse between each other, and that constitute good document representations for downstream tasks (e.g. document classification). In this paper, we conduct a multi-objective hyperparameter optimization of three well-known topic models. The obtained results reveal the conflicting nature of different objectives and that the training corpus characteristics are crucial for the hyperparameter selection, suggesting that it is possible to transfer the optimal hyperparameter configurations between datasets.

翻译:专题模型是从文件收集中提取基本专题的统计方法。在进行专题建模时,用户通常希望主题相互一致、互不相同,为下游任务(如文件分类)提供良好的文件表述。在本文中,我们对三个众所周知的专题模型进行多目标超参数优化。获得的结果揭示了不同目标的矛盾性质,而培训实体的特征对于选择超参数至关重要,这表明有可能在数据集之间转换最佳超参数配置。