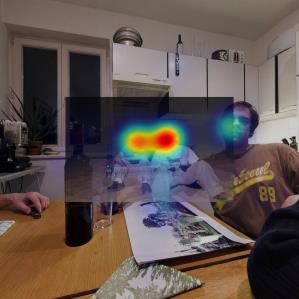

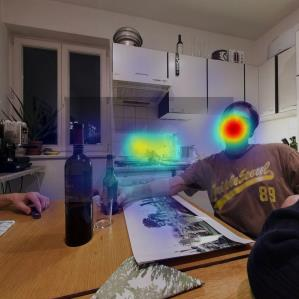

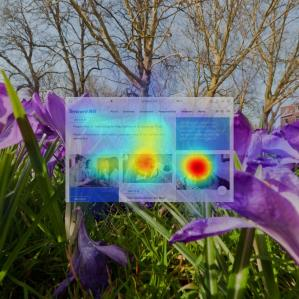

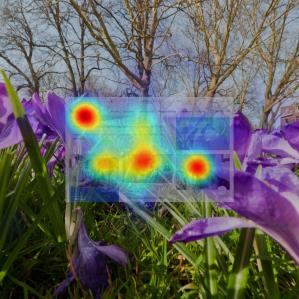

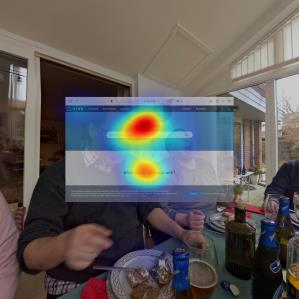

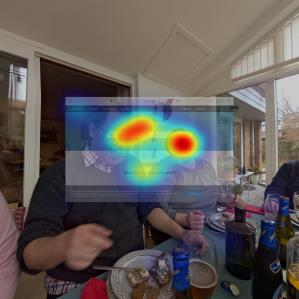

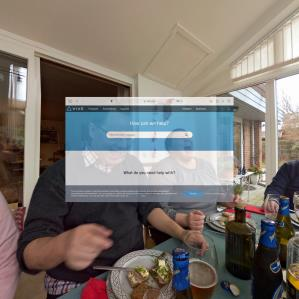

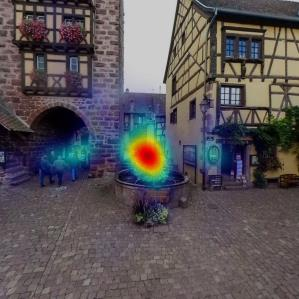

With the rapid development of multimedia technology, Augmented Reality (AR) has become a promising next-generation mobile platform. The primary theory underlying AR is human visual confusion, which allows users to perceive the real-world scenes and augmented contents (virtual-world scenes) simultaneously by superimposing them together. To achieve good Quality of Experience (QoE), it is important to understand the interaction between two scenarios, and harmoniously display AR contents. However, studies on how this superimposition will influence the human visual attention are lacking. Therefore, in this paper, we mainly analyze the interaction effect between background (BG) scenes and AR contents, and study the saliency prediction problem in AR. Specifically, we first construct a Saliency in AR Dataset (SARD), which contains 450 BG images, 450 AR images, as well as 1350 superimposed images generated by superimposing BG and AR images in pair with three mixing levels. A large-scale eye-tracking experiment among 60 subjects is conducted to collect eye movement data. To better predict the saliency in AR, we propose a vector quantized saliency prediction method and generalize it for AR saliency prediction. For comparison, three benchmark methods are proposed and evaluated together with our proposed method on our SARD. Experimental results demonstrate the superiority of our proposed method on both of the common saliency prediction problem and the AR saliency prediction problem over benchmark methods. Our data collection methodology, dataset, benchmark methods, and proposed saliency models will be publicly available to facilitate future research.

翻译:随着多媒体技术的迅速发展,增强现实(AR)已成为一个充满希望的下一代移动平台,因此,在本文中,我们主要分析背景(BG)场景和AR内容之间的互动效应,并研究AR中突出的研究预测问题。具体地说,我们首先在AR数据集(SARD)中构建一个清晰度(SARD),其中包含450 BG图像、450 AR图像,以及1 350 图像,由超演BG和AR图像结合三个混合级别生成。然而,在60个主题中开展一个大规模的眼睛跟踪实验,以收集视觉关注度。因此,我们主要分析背景(BG)场景和AR内容之间的互动效应,研究AR中突出的研究预测问题。具体地说,我们首先在AR数据集中构建一个清晰度(SARD)(SAR)(SAR) (SAR) (SAR) ) (SAR) (SAR) (S) (SAR) (S) (SAR) (S(SAR) (S) (S) (S) (S(S) (S) (SAR) (S(S) (S) (S) (SD) (S) (SD) (S(SD) (S(S) (SD) (S) (S) (SD) (S) (SD) (SD) (SD) (SD) (S) (S(S) (S) (S) (S) (S(S) (S) (SD) (S) (S) (SD) (SD) (SD) (SD) (SD) (SD) (S(S) (S) (SD) (S) (S) (S) (S) (S) (S) (S) (S) (S) (S) (S) (S) (S(S) (S) (S) (S) (S(S) (S) (S) (S) (S) (S) (S(S) (S) (S) (S(S) (S) (S) (S) (S) (S) (S) (S) (S) (S) (S) (S) (S) (S) (S) (S(S