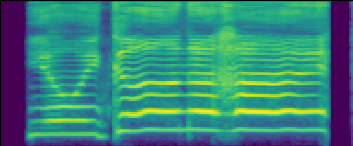

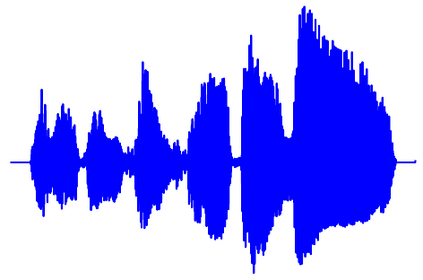

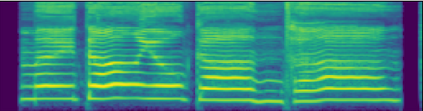

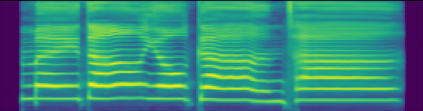

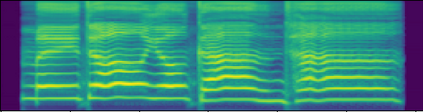

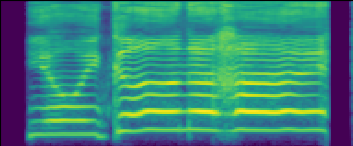

This paper aims to introduce a robust singing voice synthesis (SVS) system to produce high-quality singing voices efficiently by leveraging the adversarial training strategy. On one hand, we designed simple but generic random area conditional discriminators to help supervise the acoustic model, which can effectively avoid the over-smoothed spectrogram prediction by the duration-allocated Transformer-based acoustic model. On the other hand, we subtly combined the spectrogram with the frame-level linearly-interpolated F0 sequence as the input for the neural vocoder, which is then optimized with the help of multiple adversarial discriminators in the waveform domain and multi-scale distance functions in the frequency domain. The experimental results and ablation studies concluded that, compared with our previous auto-regressive work, our new system can produce high-quality singing voices efficiently by fine-tuning on different singing datasets covering several minutes to a few hours. Some synthesized singing samples are available online [https://zzw922cn.github.io/wesinger2 ].

翻译:本文旨在引入一个强大的歌声合成系统(SVS),通过利用对抗性培训策略,高效生成高质量的歌声。一方面,我们设计了简单但通用的随机区域有条件歧视器,以帮助监督声学模型,这可以有效避免由时间定位变异器基于声学模型进行的超移动光谱预测。另一方面,我们将光谱与框架级线性线性互动F0序列相合并,作为神经电动电动器的输入器,然后在波形域和频率域多尺度远程功能的多个对抗性歧视器的帮助下优化。实验结果和反动研究得出结论,与我们以往的自动反向工作相比,我们的新系统可以通过对不同歌声数据集进行长达几分钟至几个小时的微调,产生高质量的歌声声音。一些合成的歌样可以在线查阅[https://zzw922cn.github.io/wesinger2]。