SPN 图片可视化 代码 应用 model 等介绍

sum-product networks

http://proceedings.mlr.press/v97/tan19b/tan19b.pdf

Hierarchical Decompositional Mixtures of Variational Autoencoders

https://github.com/cambridge-mlg/SPVAE

We also observed in our experiments that SPVAEs allowed larger learning rates than VAEs (up to 0.1) during training. Investigating this effect is also subject for future research;

A current downside is that the decomposed nature of SPVAEs cause run-times approximately a factor 5 slower than for VAEs.

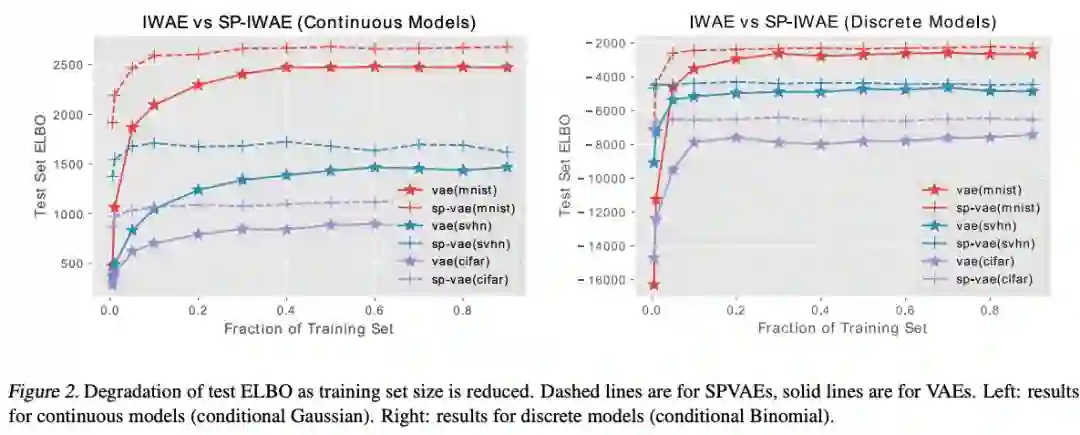

Abstract Variational autoencoders (VAEs) have received considerable attention, since they allow us to learn expressive neural density estimators effectively and efficiently. However, learning and inference in VAEs is still problematic due to the sensitive interplay between the generative model and the inference network. Since these problems become generally more severe in high dimensions, we propose a novel hierarchical mixture model over low-dimensional VAE experts. Our model decomposes the overall learning problem into many smaller problems, which are coordinated by the hierarchical mixture, represented by a sum-product network. In experiments we show that our models outperform classical VAEs on almost all of our experimental benchmarks. Moreover, we show that our model is highly data efficient and degrades very gracefully in extremely low data regimes.

The key observation used in this paper is that SPNs allow arbitrary representations for the leaves – also intractable ones like VAE distributions. The idea of this hybrid model class, which we denote as sum-product VAE (SPVAE), is to combine the best of both worlds: On the one hand, it extends the model capabilities of SPNs by using flexible VAEs as leaves; one the other hand, it applies the above mentioned divide-and-conquer approach to VAEs, which can be expected to lead to an easier learning problem. Since SPNs can be interpreted as structured latent variable models (Zhao et al., 2015; Peharz et al., 2017), the generative process of SPVAEs can be depicted as in Fig. 1 (solid lines).

5. Conclusion We presented SPVAEs, a novel structured model which combines a tractable model (SPNs) and an intractable model (VAEs) in a natural way. As shown in our experiments, this leads to i) better density estimates, ii) smaller models, and iii) improved data efficiency when compared to classical VAEs. Future work includes more extensive experiments with SPN structures and VAE variants. Furthermore, the decompositional structure of SPVAEs naturally lends itself towards distributed model learning and inference. We also observed in our experiments that SPVAEs allowed larger learning rates than VAEs (up to 0.1) during training. Investigating this effect is also subject for future research. A current downside is that the decomposed nature of SPVAEs cause run-times approximately a factor 5 slower than for VAEs. However, the execution time SPVAEs could be reduced by more elaborate designs, facilitating a higher degree of parallelism, for example using vectorization and distributed learning.

https://github.com/stelzner/supair

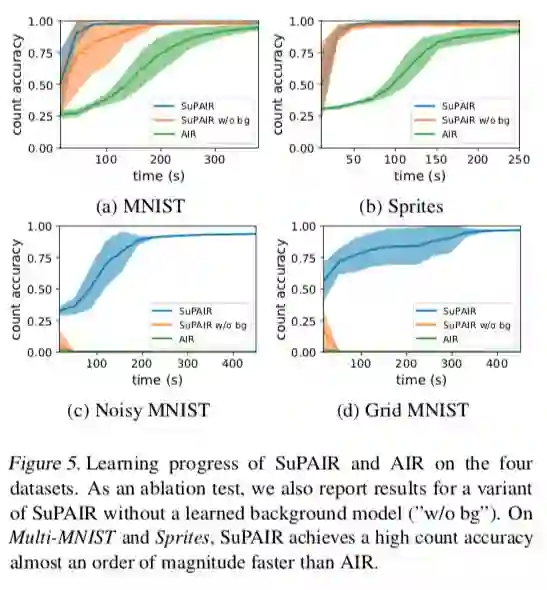

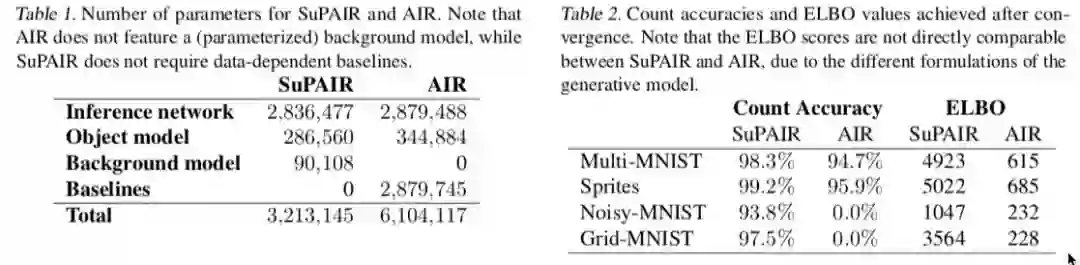

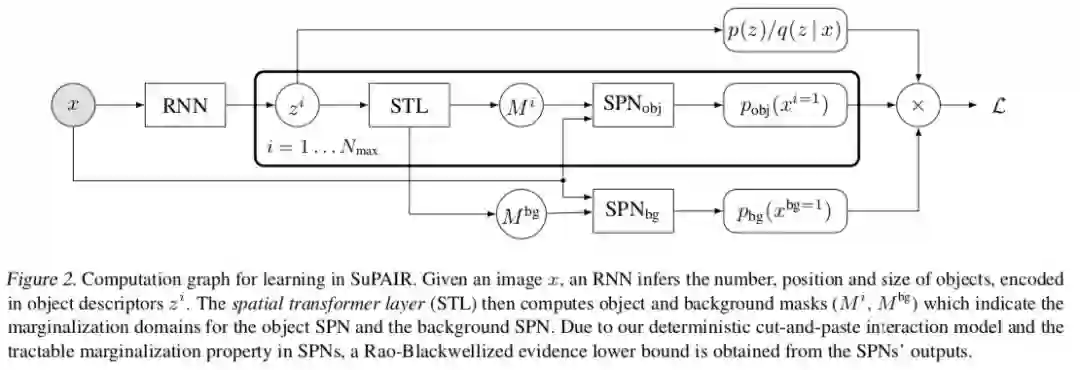

Abstract

The recent Attend-Infer-Repeat (AIR) framework

marks a milestone in structured probabilistic modeling, as it tackles the challenging problem of

unsupervised scene understanding via Bayesian

inference. AIR expresses the composition of visual scenes from individual objects, and uses variational autoencoders to model the appearance of

those objects. However, inference in the overall

model is highly intractable, which hampers its

learning speed and makes it prone to suboptimal

solutions. In this paper, we show that the speed

and robustness of learning in AIR can be considerably improved by replacing the intractable

object representations with tractable probabilistic

models. In particular, we opt for sum-product

networks (SPNs), expressive deep probabilistic

models with a rich set of tractable inference routines. The resulting model, called SuPAIR, learns

an order of magnitude faster than AIR, treats object occlusions in a consistent manner, and allows

for the inclusion of a background noise model,

improving the robustness of Bayesian scene understanding.

https://github.com/stelzner/supair

http://www.mlmi.eng.cam.ac.uk/foswiki/pub/Main/ClassOf2018/thesis_PingLiangTan.pdf

“An SPN is a probabilistic model designed for efficient inference."

http://www.di.uniba.it/~ndm/pubs/vergari18mlj.pdf

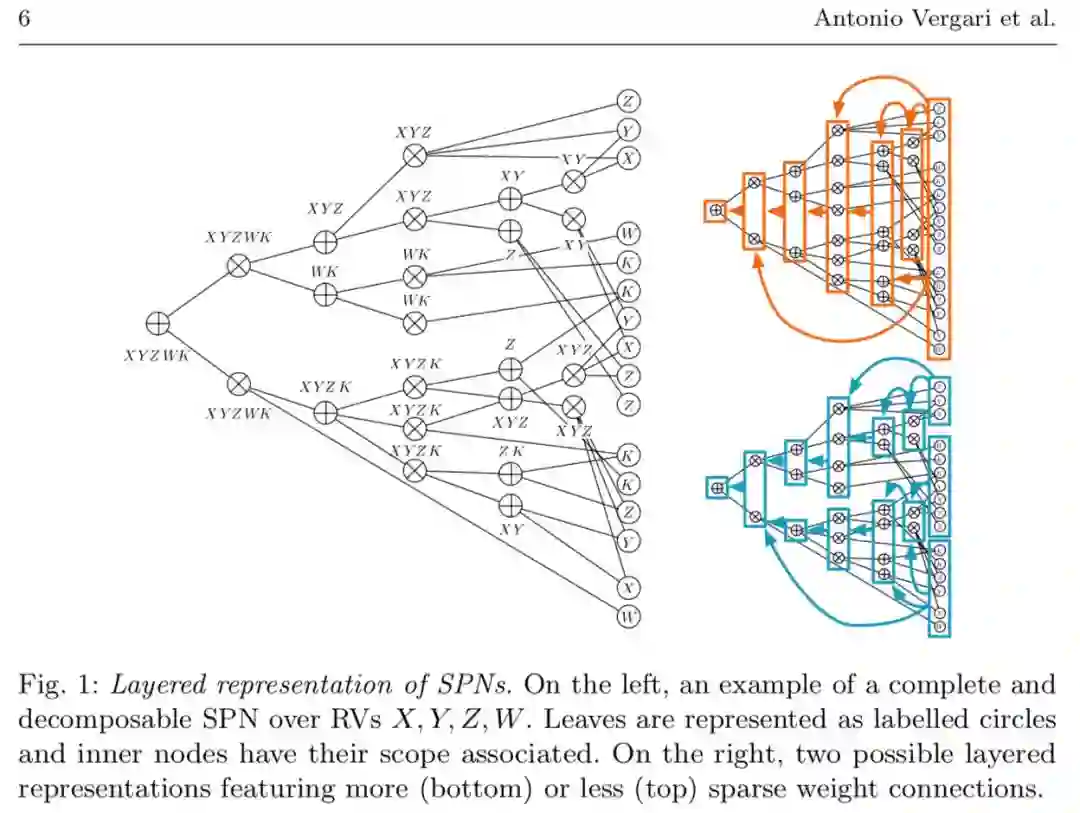

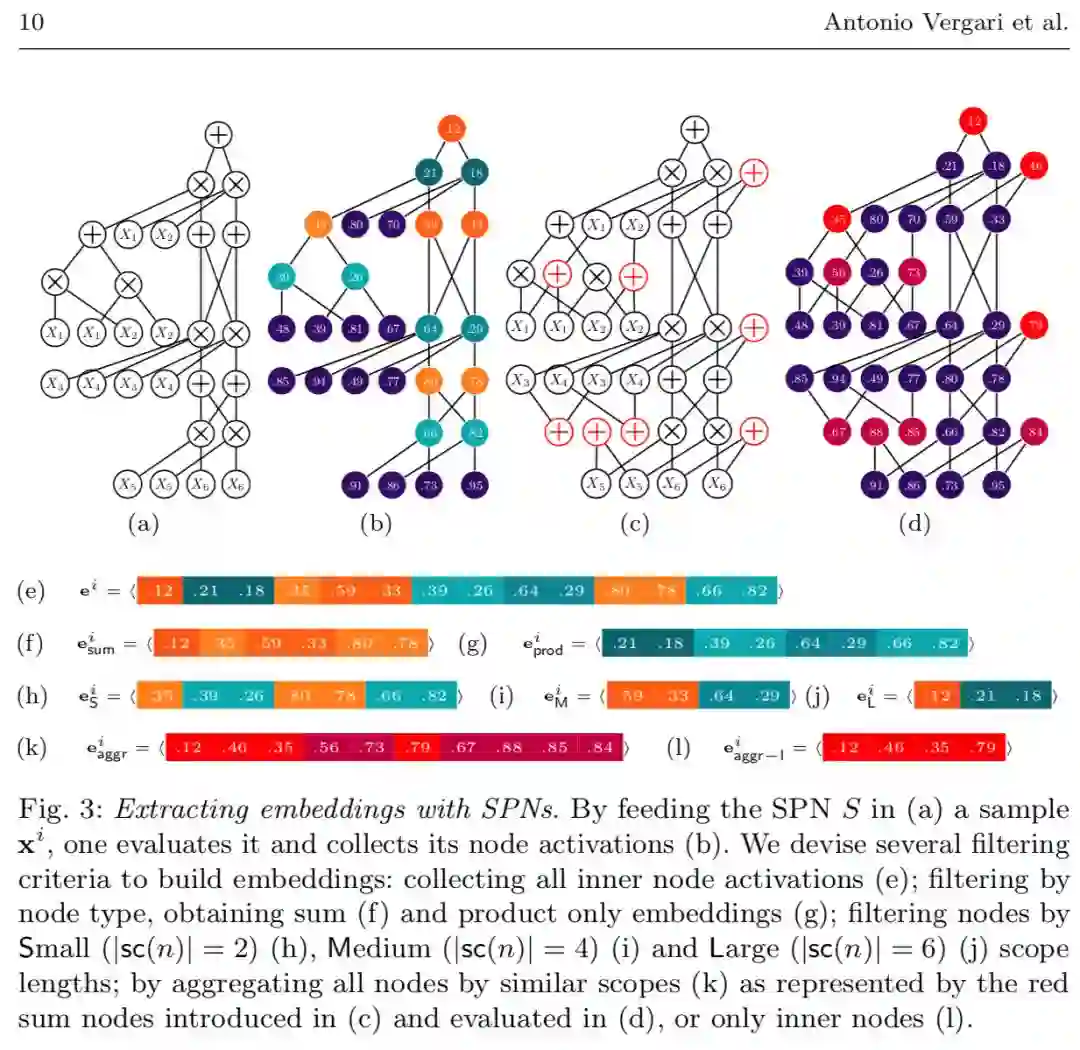

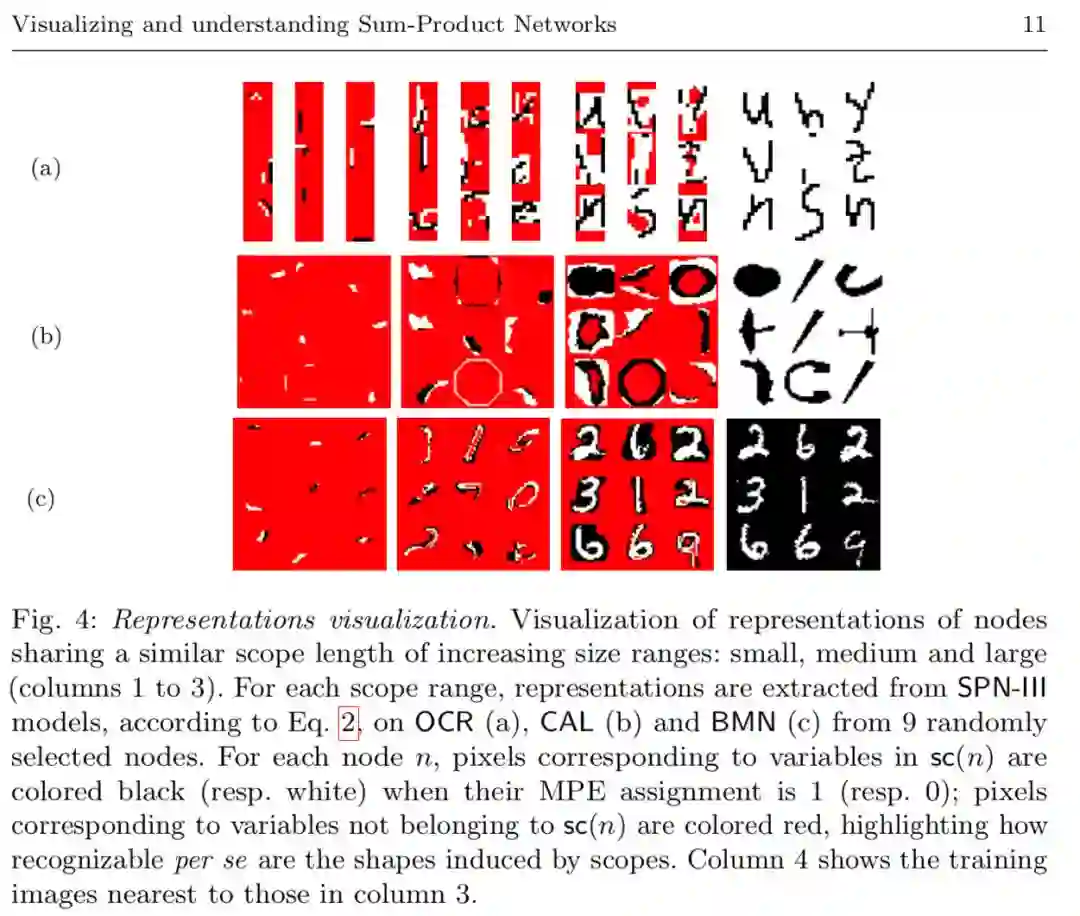

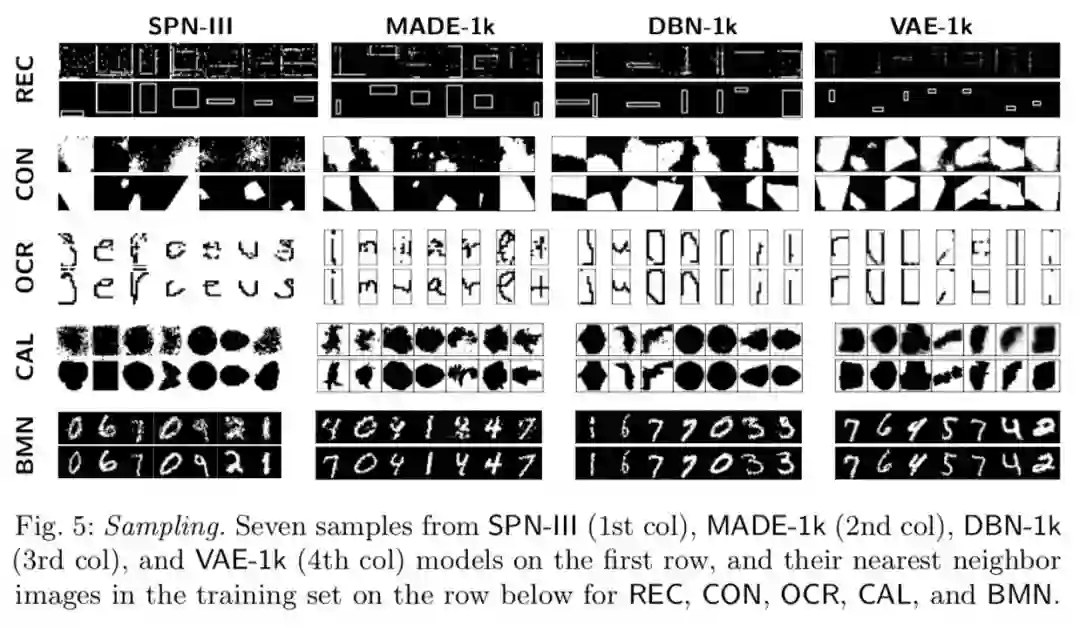

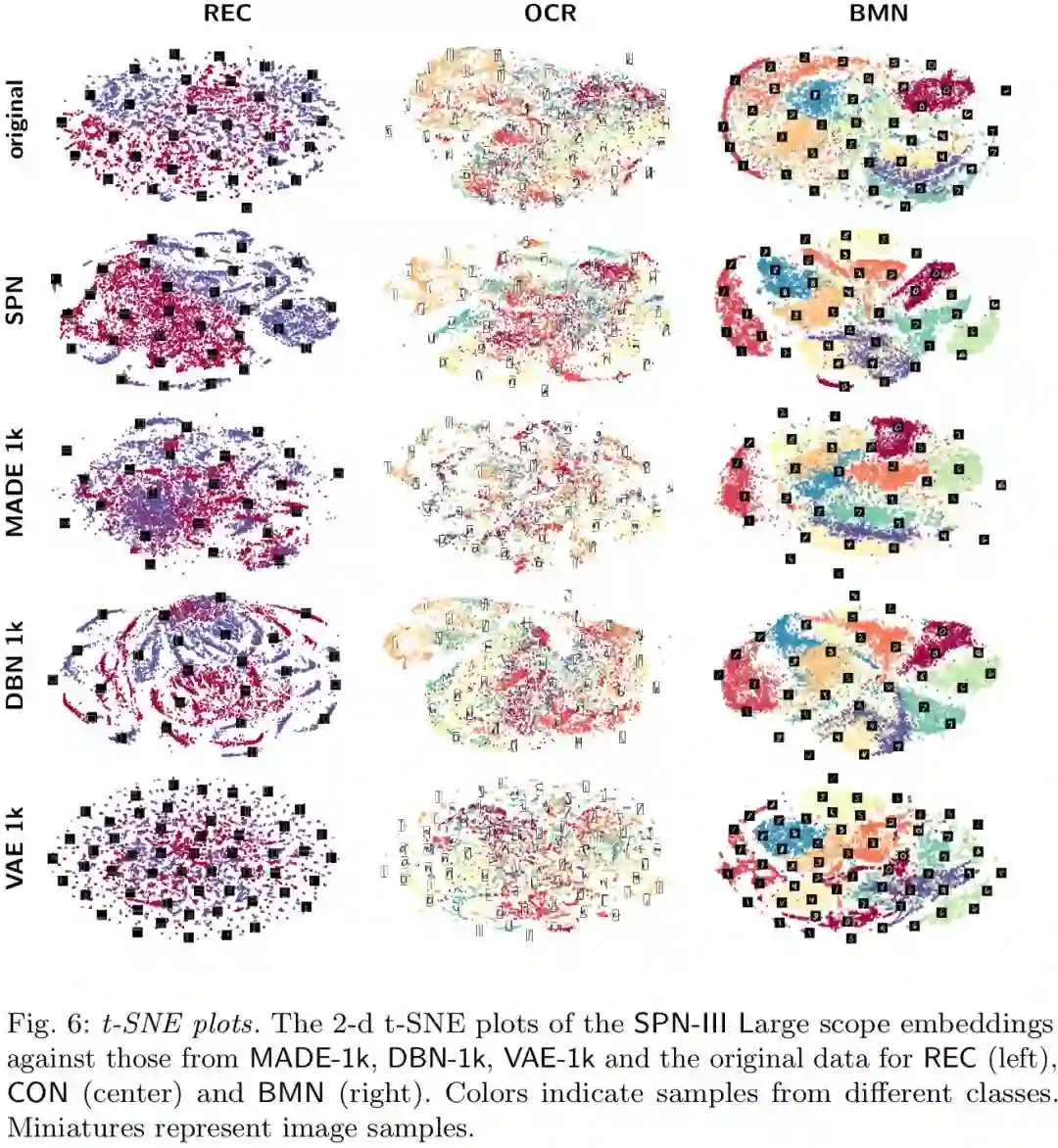

Visualizing and understanding Sum-Product Networks

s (VAEs) [19] are generative autoencoders, but differently from MADEs they are tailored towards compressing and learning untangled representations of the data through a variational approach to Bayesian inference. While VAEs have recently gained momentum as generative models, their inference capabilities, contrary to SPNs, are limited and restricted to Monte Carlo estimates relying on the generated samples. W.r.t. all the above mentioned neural models, one can learn one SPN structure from data and obtain a highly versatile probabilistic model capable of performing a wide variety of inference queries efficiently and at the same time providing very informative feature representations, as we will see in the following sections.

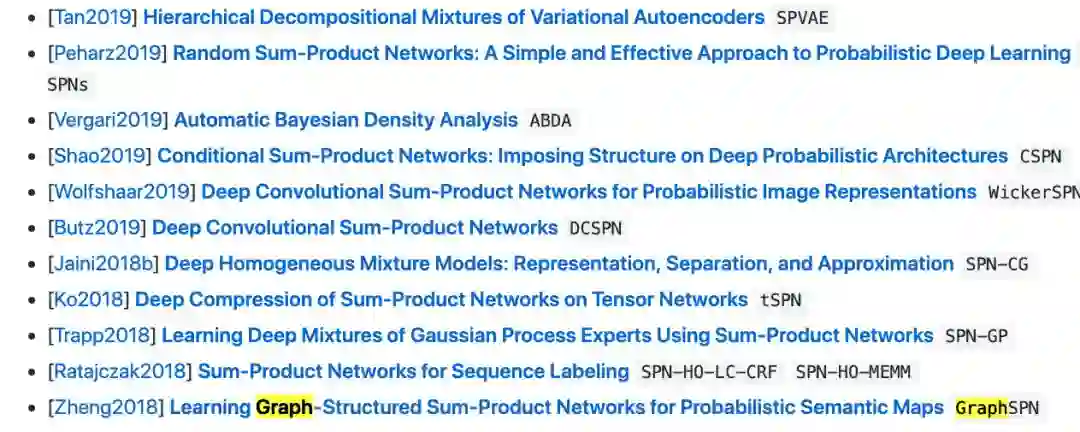

awesome spn

https://github.com/arranger1044/awesome-spn#structure-learning

内置图网络?