面向工程师的最佳统计机器学习课程,Fall 2017 美国圣母大学,28章节详细讲述(附PPT下载,课程目录视频)

【导读】美国圣母大学2017年新开课程《给科学家和工程师的统计学习》Statistical Computing for Scientists and Engineers 涵盖了统计学习中的几乎所有重要知识,包括《概率与统计、信息论、多维高斯分布、最大后验估计、贝叶斯统计、指数族分布、贝叶斯线性回归、蒙特卡洛方法、重要性采样、吉布斯采样、状态空间模型、EM算法、主成分分析、连续隐变量模型、核方法与高斯过程等》,并提供视频,PPT,课程作业及其参考答案与代码,还有大量参考学习资源,是不可多得的统计学习课程。

统计学习是关于计算机基于数据构建概率统计模型并运用模型对数据进行预测与分析的一门学科。它和现在火热的机器学习和深度学习的目的一样都是为了找到或者能够无限接近一个“上帝函数” —— 一个能够完美利用数据解决现实各种问题的模型或者方法。

统计学习依托背后的数学理论,在远早于机器学习大爆发的这十年,率先从解释因果的能力的角度,努力寻找上帝函数。 统计学习相较于机器学习最大的优势就是可解释性好,比如在小样本下,逻辑回归作为基础的线性分类器预测效果通常不比神经网络和其他ensembled算法差,且解释能力更强。当数据量越大,神经网络的预测能力就越强大,类似回归的统计推断方法越力不从心。在样本量不大的情况下,我们往往会比较重视模型的解释能力,因为数据量有限,特征之间是否有共线性不难发现,特征选择也只是在较少的维度下进行,模型的预测能力在我们的可控范围内不难做到最好。

圣母大学Nicholas Zabaras 教授曾就职于美国康奈尔大学(Cornell)材料过程设计与控制实验室主任、德国 ,慕尼黑工业大学(TMU) Institute of Advanced Study 高级会士(Hans Fisher Senior Fellow)、 英国华威大学(Warick)测建模中心主任,美国机械工程学会(ASME)会士。作为不确定性 量化的奠基人之一,他创办并担任第一个 UQ 学术期刊《International Journal on Uncertainty Quantification》主编。他的研究包涵计算材料、大数据与深度学习、图概率 模型等。

▌相关资源汇总

链接:https://www.zabaras.com/statisticalcomputing

讲课课件和视频(Lecture notes and videos)

作业(Homework)

课程信息和推荐教材(Course info and references)

大纲(Syllabus)

“贝叶斯计算年度研讨会”(Annual Notre Dame Symposium on "Bayesian Computing")

请关注专知公众号(扫一扫最下面专知二维码,或者点击上方蓝色专知),

后台回复“SCPPT” 就可以获取讲课课件 PPT下载~

▌讲课课件和视频

1. 统计计算,概率与统计导论(Introduction to Statistical Computingand Probability and Statistics)

o Introduction to the course, books andreferences, objectives, organization; Fundamentals of probability and statistics,laws of probability, independency, covariance, correlation; The sum and productrules, marginal and conditional distributions; Random variables, moments,discrete and continuous distributions; The univariate Gaussian distribution.

[Video-Lecture] [Lecture Notes]

2. 概率与统计导论(第一节延续)Introduction to Probability andStatistics (Continued)

o Binomial, Bernoulli, Multinomial,Multinoulli, Poisson, Student's-T, Laplace, Gamma, Beta, Pareto,multivariate-Gaussian and Dirichlet distributions; Joint probabilitydistributions; Transformation of random variables; Central limit theorem andbasic Monte Carlo approximations; Probability inequalities; Information theoryreview, KL Divergence, Entropy, Mutual Information, Jensen's inequality.

[Video-Lecture] [Lecture Notes]

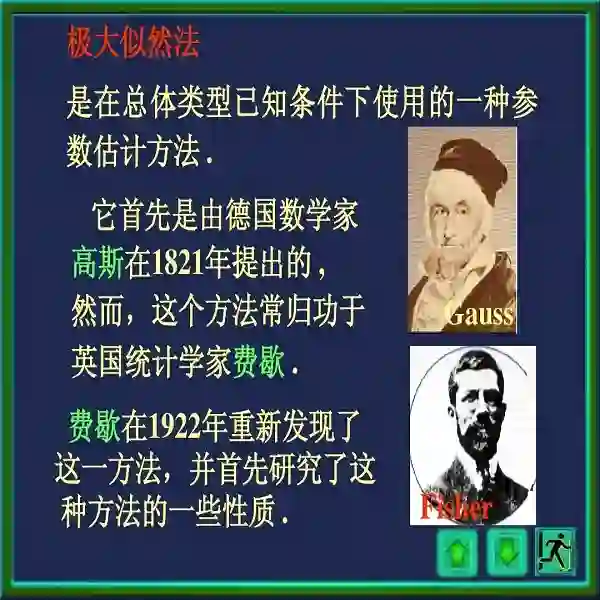

3. 信息论,多维高斯分布,最大似然估计,罗宾斯 - 门罗算法Information Theory, MultivariateGaussian, MLE Estimation, Robbins-Monro algorithm

o Information theory, KL divergence,entropy, mutual information, Jensen's inequality (continued); Central limittheorem examples, Checking the Gaussian nature of a data set; MultivariateGaussian, Mahalanobis distance, geometric interpretation; Maximum LikelihoodEstimation (MLE) for the univariate and multivariate Gaussian, sequential MLEestimation; Robbins-Monro algorithm for sequential MLE estimation.

[Video-Lecture] [Lecture Notes]

4. 基于罗宾斯 - 门罗的序列最大似然估计,条件与边缘高斯分布 Robbins-Monro for Sequential MLE, Curseof Dimensionality, Conditional and Marginal Gaussian Distributions

o Sequential MLE for the Gaussian,Robbins-Monro algorithm (continued); Back to the multivariate Gaussian,Mahalanobis distance, geometric interpretation, mean and moments, restrictedforms; Curse of dimensionality, challenges in polynomial regression in high-dimensions,volume/area of a sphere and hypercube in high-dimensions, Gaussian distributionin high-dimensions; Conditional and marginal Gaussian distributions, completingthe square, Woodbury matrix inversion Lemma, examples in interpolatingnoise-free data and data imputation, information form of the Gaussian.

[Video-Lecture] [Lecture Notes]

5. 似然计算,最大后验估计,正则化最小二乘,线性高斯模型 Likelihood calculations, MAP estimateand Regularized Least Squares, Linear Gaussian Models

o Information Form of the Gaussian(continued); Bayesian inference and likelihood function calculation, additiveand multiplicative errors; MAP estimate and regularized least squares;Estimating the mean of a Gaussian with a Gaussian Prior; Applications to sensorfusion; Smoothness prior and interpolating noisy data.

[Video-Lecture] [Lecture Notes]

6. 贝叶斯统计简介,指数族分布 Introduction to Bayesian Statistics,Exponential Family of Distributions

o Parametric modeling, Sufficiencyprinciple, Likelihood principle, Stopping rules, Conditionality principle,p-values and issues with frequentist statistics, MLE and the likelihood andconditionality principles; Inference in a Bayesian setting, posterior andpredictive distributions, MAP estimate, Evidence, Sequential nature of Bayesianinference, Examples; Exponential family of distributions, Examples, Computingmoments, Sufficiency and Neymann factorization, Sufficient statistics and MLEestimate.

[Video-Lecture] [Lecture Notes]

7. 指数族分布和广义线性模型,多维高斯分布的贝叶斯推断 Exponential Family of Distributions and Generalized Linear Models, Bayesian Inference for the Multivariate Gaussian

o Exponential family of distributions,Computing moments, Neymann factorization, Sufficient statistics and MLEestimate (continued); Generalized Linear Models, Canonical Response, Batch andsequential IRLS algorithms; Bayesian inference for mean and variance/precisionfor the multivariate Gaussian, Wishart and inverse-Wishart distributions, MAPestimates and posterior marginals.

[Video-Lecture] [Lecture Notes]

8. 先验与层次模型 Prior and Hierarchical Models

o Conjugate priors (continued) andlimitations, mixture of conjugate priors; Non-informative priors, maximumentropy priors; Translation and scale invariant priors; Improper priors;Jeffrey's priors; Hierarchical Bayesian Models, and empirical Bayes/type IImaximum likelihood, Stein estimator.

[Video-Lecture] [Lecture Notes]

9. 贝叶斯线性回归简介,模型比较与选型 Introduction to Bayesian Linear Regression,Model Comparison and Selection

o Overfitting and MLE, Point estimates andleast squares, posterior and predictive distributions, model evidence; Bayesianinformation criterion, Bayes factors, Occam's Razor, Bayesian model comparisonand selection.

[Video-Lecture] [Lecture Notes]

10. 贝叶斯线性回归 Bayesian Linear Regression

o Linear basis function models, sequentiallearning, multiple outputs, data centering, Bayesian inference when σ2 isunknown, Zellner's g-prior, uninformative semi-conjugate priors, introductionto relevance determination for Bayesian regression.

[Video-Lecture] [Lecture Notes]

11. 贝叶斯线性回归(续) Bayesian Linear Regression (continued)

o The evidence approximation, Limitationsof fixed basis functions, equivalent kernel approach to regression, Gibb'ssampling for variable selection, variable and model selection.

[Video-Lecture] [Lecture Notes]

12. 贝叶斯回归和变量选择的实现 Implementation of Bayesian Regressionand Variable Selection

o The caterpillar regression problem;Conjugate priors, conditional and marginal posteriors, predictive distribution,influence of the conjugate prior; Zellner's G prior, marginal posterior meanand variance, credible intervals; Jeffrey's non-informative prior, Zellner'snon-informative G prior, point null hypothesis and calculation of Bayes factorsfor the selection of explanatory input variables; Variable selection, modelcomparison, variable selection prior, sampling search for the most probablemodel, Gibb's sampling for variable selection; Implementation details.

[Video-Lecture] [Lecture Notes]

13. 蒙特卡洛方法简介,离散与连续分布采样 Introduction to Monte Carlo Methods,Sampling from Discrete and Continuum Distributions

o Review of the Central Limit Theorem, Lawof Large Numbers; Calculation of π, Indicator functions and Monte Carlo errorestimates; Monte Carlo estimators, properties, coefficient of variation,convergence, MC and the curse of dimensionality; MC Integration in highdimensions, optimal number of MC samples; Sample representation of the MCestimator; Bayes factors estimation with Monte Carlo; Sampling from discretedistributions; Reverse sampling from continuous distributions; Transformationmethods, the Box-Muller algorithm, sampling from the multivariate Gaussian.

[Video-Lecture] [Lecture Notes]

14. 逆采样,变换采样,接受-拒绝方法,分层/系统抽样 Reverse Sampling, TransformationMethods, Composition Methods, Accept-Reject Methods, Stratified/SystematicSampling

o Sampling from a discrete distribution;Reverse sampling for continuous distributions; Transformation methods,Box-Muller algorithm, sampling from the multivariate Gaussian; Simulation bycomposition, accept-reject sampling; Conditional Monte Carlo; Stratifiedsampling and systematic sampling.

[Video-Lecture] [Lecture Notes]

15. 重要性采样 Importance Sampling

o Importance sampling methods, samplingfrom a Gaussian mixture; Optimal importance sampling distribution, normalizedimportance sampling; Asymptotic variance/Delta method, asymptotic bias;Applications to Bayesian inference; Importance sampling in high dimensions,importance sampling vs rejection sampling; Solving Ax=b with importance sampling,computing integrals with singularities, other examples.

[Video-Lecture] [Lecture Notes]

16. 吉布斯采样 Gibbs Sampling

o Review of Importance sampling, SolvingAx=b with importance sampling, Sampling Importance Resampling (Continued);Gibbs Sampling, Systematic and Random scans, Block and Metropolized Gibbs,Application to Variable Selection in Bayesian Regression; MCMC,Metropolis-Hastings, Examples.

[Video-Lecture] [Lecture Notes]

17. 马尔科夫蒙特卡洛MCMC算法 Markov Chain Monte Carlo andMetropolis-Hasting Algorithm

o MCMC, Averaging along the chain, ErgodicMarkov chains; Metropolis algorithm, Metropolis-Hastings, Examples; Random WalkMetropolis-Hastings, Independent Metropolis-Hastings; Metropolis-adjustedLangevin algorithm; Combinations of Transition Kernels, Simulated Annealing.

[Video-Lecture] [Lecture Notes]

18. 状态空间模型与顺序重要性采样简介 Introduction to State Space Models andSequential Importance Sampling

o The state space model; Examples,Tracking problem, Speech enhancement, volatility model; The state space modelwith observations, examples; Bayesian inference in state space models, forwardfiltering, forward-backward filtering; Online parameter estimation; Monte Carlofor the state space model, optimal importance distribution, sequentialimportance sampling.

[Video-Lecture] [Lecture Notes]

19. 顺序重要性重采样 Sequential Importance Sampling withResampling

o Sequential importance sampling(Continued); Optimal Importance distribution, locally optimal importancedistribution, suboptimal importance distribution; Examples, Robot localization,Tracking, Stochastic volatility; Resampling, Effective sample size, multinomialresampling, sequential importance sampling with resampling, Various examples;Rao-Blackwellised particle filter, mixture of Kalman filters, switchingLG-SSMs, Fast Slam; Error estimates, degeneracy, convergence.

[Video-Lecture][Lecture Notes]

20. 顺序重要性重采样(续) Sequential Importance Sampling withResampling (Continued)

o General framework for SequentialImportance Sampling Resampling; Growing a polymer in two dimensions; SequentialMonte Carlo for Static Problems; Online parameter estimation; SMC forSmoothing.

[Video-Lecture] [Lecture Notes]

21. 序列蒙特卡洛与条件线性高斯模型 Sequential Monte Carlo (Continued) andConditional Linear Gaussian Models

o Online parameter estimation; SMC forSmoothing; Kalman filter review for linear Gaussian models; Sequential MonteCarlo for conditional linear Gaussian models, Rao-Blackwellized particlefilter, applications; Time Series models; Partially observed linear Gaussianmodels; Dynamic Tobit and Dynamic Probit models.

[Video-Lecture] [Lecture Notes]

22. 逆跳跃马尔科夫链蒙特卡洛Reversible Jump MCMC

o Trans-dimensional MCMC, Motivation withAutoregression and finite mixture of Gaussians models; Designingtrans-dimensional moves, Birth/Death moves, Split/Merge moves, mixture ofmoves; Bayesian RJ-MCMC models for autoregressions, and Gaussian mixtures.

[Video-Lecture] [Lecture Notes]

23. 期望最大算法简介 Introduction to Expectation-Maximization(EM)

o Latent variable models; K-Means, imagecompression; Mixture of Gaussians, posterior responsibilities and latentvariable view; Mixture of Bernoulli distributions; Generalization of EM,Variational inference perspective.

[Video-Lecture] [Lecture Notes]

24. 期望最大算法(续)Expectation-Maximization (continued)

o Mixture of Gaussians; Mixture ofBernoulli distributions; EM for Bayesian Linear Regression; MAP estimation andEM; Incremental EM; Fitting with missing data using EM; Variational inferenceperspective.

[Video-Lecture] [Lecture Notes]

25. 主成分分析 Principal Component Analysis

o Continuous latent variable models,low-dimensional manifold of a data set, generative point of view,unidentifiability; Principal component analysis (PCA), Maximum varianceformulation, minimum error formulation, PCA versus SVD; Canonical correlationanalysis; Applications, Off-line Digit images, Whitening of the data with PCA,PCA for visualization; PCA for high-dimensional data; Probabilistic PCA,Maximum likelihood solution, EM algorithm, model selection.

[Video-Lecture] [Lecture Notes]

26. 连续隐变量模型 Continuous Latent Variable Models

o Probabilistic PCA, Maximum likelihoodsolution, EM algorithm, Bayesian PCA, Kernel PCA.

[Video-Lecture] [Lecture Notes]

27. 核方法与高斯过程 Kernel Methods and Introduction toGaussian Processes

o Dual representation for regression,kernel functions; Kernel design, combining kernels, Gaussian kernels,probabilistic kernels, Fisher kernel; Radial basis functions, Nadaraya-Watsonmodel; Gaussian processes, GPs for regression vs. a basis function approach,learning parameters, automatic relevance determination; Gaussian Processclassification, Laplace approximation, connection to Bayesian neural nets.

[Video-Lecture] [Lecture Notes]

28. 基于高斯过程的分类问题,课程总结 Gaussian Processes for ClassificationProblems, Course Summary

o Gaussian Process classification,connection of GPs to Bayesian neural nets; Summary of the Course - Probabilityinequalities, Law of large numbers, MLE estimates and Bias, Bayes' theorem andposterior exploration, predictive distribution, marginal likelihood,exponential family and conjugate priors, Empirical Bayes and evidenceapproximation, Sampling methods, Rejection methods, importance sampling, MCMC,Gibbs sampling, Sequential importance sampling and particle methods, reversiblejump MCMC, Latent variables and expectation maximization, Model reduction,probabilistic PCA and generative models.

[Video-Lecture] [Lecture Notes]

▌作业(Homework)

· Sept. 13, Homework 1

o Working with multivariate Gaussians,exponential family distributions, posterior for (μ,σ2) for aGaussian likelihood with conjugate prior, Bayesian information criterion (BIC),whitening vs standardizing the data.

[Homework][Solution][Software]

· Sept. 20, Homework 2

o Computing HPD intervals for posteriordistributions, Monte Carlo approximations for Bayes' factors, regularized MAPestimation in multivariate Gaussians, Bayesian inference in sensor fusion,Jeffrey's priors, hierarchical prior models.

[Homework][Solution][Software]

· Oct. 4, Homework 3

o Bayesian linear regression, Variable andmodel selection, Gibbs sampling for variable selection, Informative Zellner's GPrior, Jeffreys' non-informative Prior, Zellner's non-informative G Prior.

[Homework][Solution][Software]

· Oct. 23, Homework 4

o Monte Carlo Methods, Accept-RejectSampling, Sampling from the Gamma distribution with Cauchy distribution asproposal, Metropolis-Hastings and Gibbs sampling, Hamiltonian MC methods,applications to Bayesian Regression.

[Homework][Solution][Software]

· Nov. 6, Homework 5

o Sequential Monte Carlo Methods, SMC forthe Volatility Model, SMC for modeling a polymer chain (self-avoiding paths),SMC for solving integral equations, particle filters for linear Gaussianstate-space models and comparison with Kalman filtering.

[Homework][Solution][Software]

· Nov. 27, Homework 6

o Principal Component Analysis, BayesianPCA, EM Algorithm for PCA, Expectation Maximization, Gaussian Process Modeling.

[Homework][Solution][Software]

▌课程信息和推荐教材(Course info and references)

Credit: 3 Units

Lectures: Mond. & Wedn. 3:30 -- 4:45 pm, DeBartolo Hall 126.

Recitation: Friday. 3:30 -- 4:45 pm, DeBartolo Hall 126.

Professor: Nicholas Zabaras, 311 I Cushing Hall, nzabaras@gmail.com

Teaching Assistant (Volunteer): Jize Zhang, Jize.Zhang.204@nd.edu

Office hours: Mond. & Wedn. 5:00 -- 6:00 p.m., 311I Cushing.

Course description: The course covers selective topics on Bayesian scientific computing relevant to high-dimensional data-driven engineering and scientific applications. An overview of Bayesian computational statistics methods will be provided including Monte Carlo methods, exploration of posterior distributions, model selection and validation, MCMC and Sequential MC methods and inference in probabilistic graphical models. Bayesian techniques for building surrogate models of expensive computer codes will be introduced including regression methods for uncertainty quantification, Gaussian process modeling and others. The course will demonstrate these techniques with a variety of scientific and engineering applications including among others inverse problems, dynamical system identification, tracking and control, uncertainty quantification of complex multiscale systems, physical modeling in random media, and optimization/design in the presence of uncertainties. The students will be encouraged to integrate the course tools with their own research topics.

Intended audience: Graduate Students in Mathematics/Statistics, Computer Science, Engineering, Physical/Chemical/Biological/Life Sciences.

References of General Interest: The course lectures will become available on the course web site. For in depth study, a list of articles and book chapters from the current literature will also be provided to enhance the material of the lectures. There is no required text for this course. Some important books that can be used for general background reading in the subject areas of the course include the following:

C.P. Robert and G. Casella, The Bayesian Choice: from Decision-Theoretic Motivations to Computational Implementation, Springer-Verlag, New York, 2001 (also available as ebook, complete list of slides based on the book also available).

A Gelman, JB Carlin, HS Stern and DB Rubin, Bayesian Data Analysis, Chapman & Hall CRC, 2nd edition, 2003.

References on Bayesian fundamentals:

JS Liu, Monte Carlo Strategies in Scientific Computing, Springer Series in Statistics, 2001.

CP. Robert, Monte Carlo Statistical Methods, Springer Series in Statistics, 2nd edition, 2004 (complete list of slides based on the book also available).

W. R. Gilks, et al., Markov Chain Monte Carlo in Practice, Chapman & Hall/CRC, 1995.

J. Kaipio and E. Somersalo, Statistical and Computational Inverse Problems, Springer, 2004 (for Notre Dame students an ebook can be downloaded from here).

References on Bayesian computation:

E T Jaynes, Probability Theory: The Logic of Science, Cambridge University Press, 2003 (a free ebook is also available).

David JC MacKay, Information Theory: Inference and Learning Algorithms, Cambridge University Press, 2003 (a free ebook is also available from the author's web site).

References on Probability Theory and Information Science:

C. M. Bishop, Pattern Recognition and Machine Learning, Springer, 2007.

Kevin P. Murphy, Machine Learning: A Probabilistic Perspective, MIT Press, 2012 (a free ebook is also available from the author's web site).

David Barber, Bayesian Reasoning and Machine Learning, Cambridge University Press, 2012 (a free ebook is also available from the author's web site).

C. E. Rasmussen & C. K. I. Williams, Gaussian Processes for Machine Learning, MIT Press, 2006 (a free ebook is also available from the Gaussian Processes web site).

Michael I. Jordan, Learning in Graphical Models, MIT Press, 1998.

Daphne Koller and Nir Friedman, Probabilistic Graphical Models, MIT Press, 2009.

References on (Bayesian) Machine Learning:

Homework: assigned every three to four lectures. Most of the homework will require implementation and application of algorithms discussed in class. We anticipate between five to seven homework sets. All homework solutions and affiliated computer programs should be mailed by midnight of the due date to this Email address. All attachments should arrive on an appropriately named zipped directory (e.g. HW1_Submission_YourName.rar). We would prefer typed homework (include in your submission all original files e.g. Latex and a Readme file for compiling and testing your software).

Term project: A project is required in mathematical or computational aspects of the course. Students are encouraged to investigate aspects of Bayesian computing relevant to their own research. A short written report (in the format of NIPS papers) is required as well as a presentation. Project presentations will be given at the end of the semester as part of a day or two long symposium.

Grading: Homework 60% and Project 40%.

Prerequisites: Linear Algebra, Probability theory, Introduction to Statistics and Programming (any language). The course will require significant effort especially from those not familiar with computational statistics. It is a course intended for those that value the role of Bayesian inference and machine learning on their research.

▌大纲(Syllabus)

Review of probability and statistics

Laws of probability, Bayes' Theorem, Independency, Covariance, Correlation, Conditional probability, Random variables, Moments

Markov and Chebyshev Inequalities, transformation of PDFs, Central Limit Theorem, Law of Large Numbers

Parametric and non-parametric estimation

Operations on Multivariate Gaussians, computing marginals and conditional distributions, curse of dimensionality

Introduction to Bayesian Statistics

Bayes' rule, estimators and loss functions

Bayes' factors, prior/likelihood & posterior distributions

Density estimation methods

Bayesian model validation.

Introduction to Monte Carlo Methods

Importance sampling

Variance reduction techniques

Markov Chain Monte Carlo Methods

Metropolis-Hastings

Gibbs sampling

Hybrid algorithms

Trans-dimensional sampling

Sequential Monte Carlo Methods and applications

Target tracking/recognition

Estimation of signals under uncertainty

Inverse problems

Optimization (simulated annealing)

Uncertainty Quantification Methods

Regression models in high dimensions

Gaussian process modeling

Forward uncertainty propagation

Uncertainty propagation in time dependent problems

Bayesian surrogate models

Inverse/Design uncertainty characterization

Optimization and optimal control problems under uncertainty

Uncertainty Quantification using Graph Theoretic Approaches

Data-driven multiscale modeling

Nonparametric Bayesian formulations

参考链接:

https://www.zabaras.com/statisticalcomputing

-END-

专 · 知

人工智能领域主题知识资料查看获取:【专知荟萃】人工智能领域26个主题知识资料全集(入门/进阶/论文/综述/视频/专家等)

同时欢迎各位用户进行专知投稿,详情请点击:

【诚邀】专知诚挚邀请各位专业者加入AI创作者计划!了解使用专知!

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请扫一扫如下二维码关注我们的公众号,获取人工智能的专业知识!

请加专知小助手微信(Rancho_Fang),加入专知主题人工智能群交流!

点击“阅读原文”,使用专知!