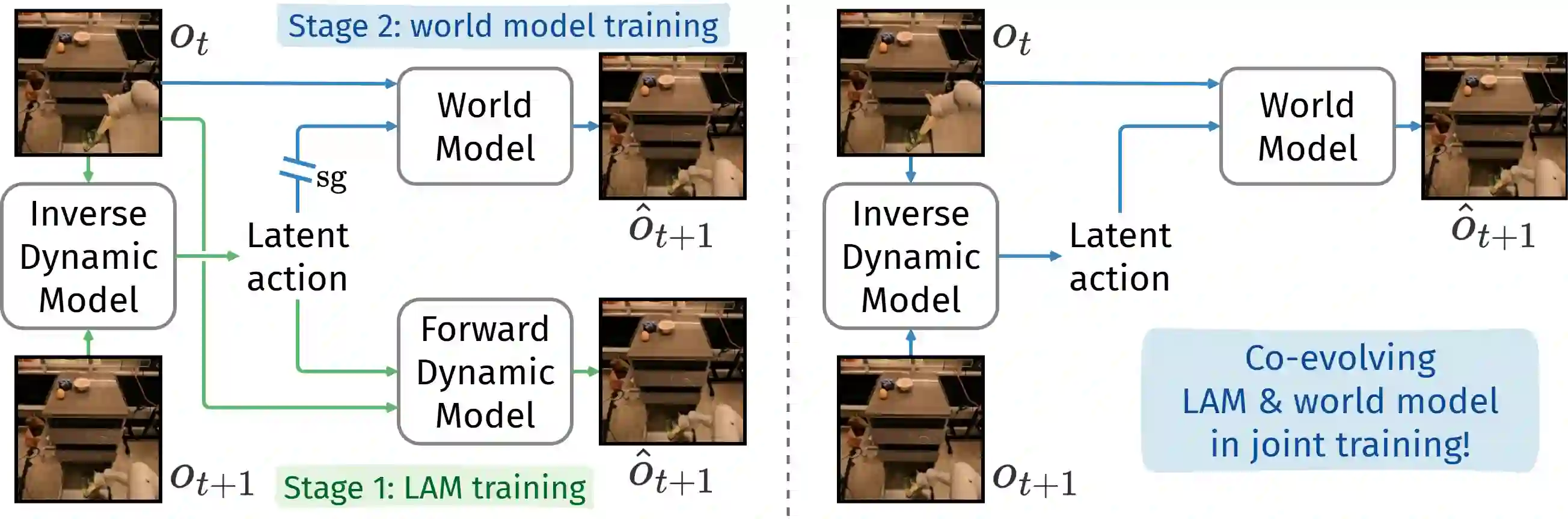

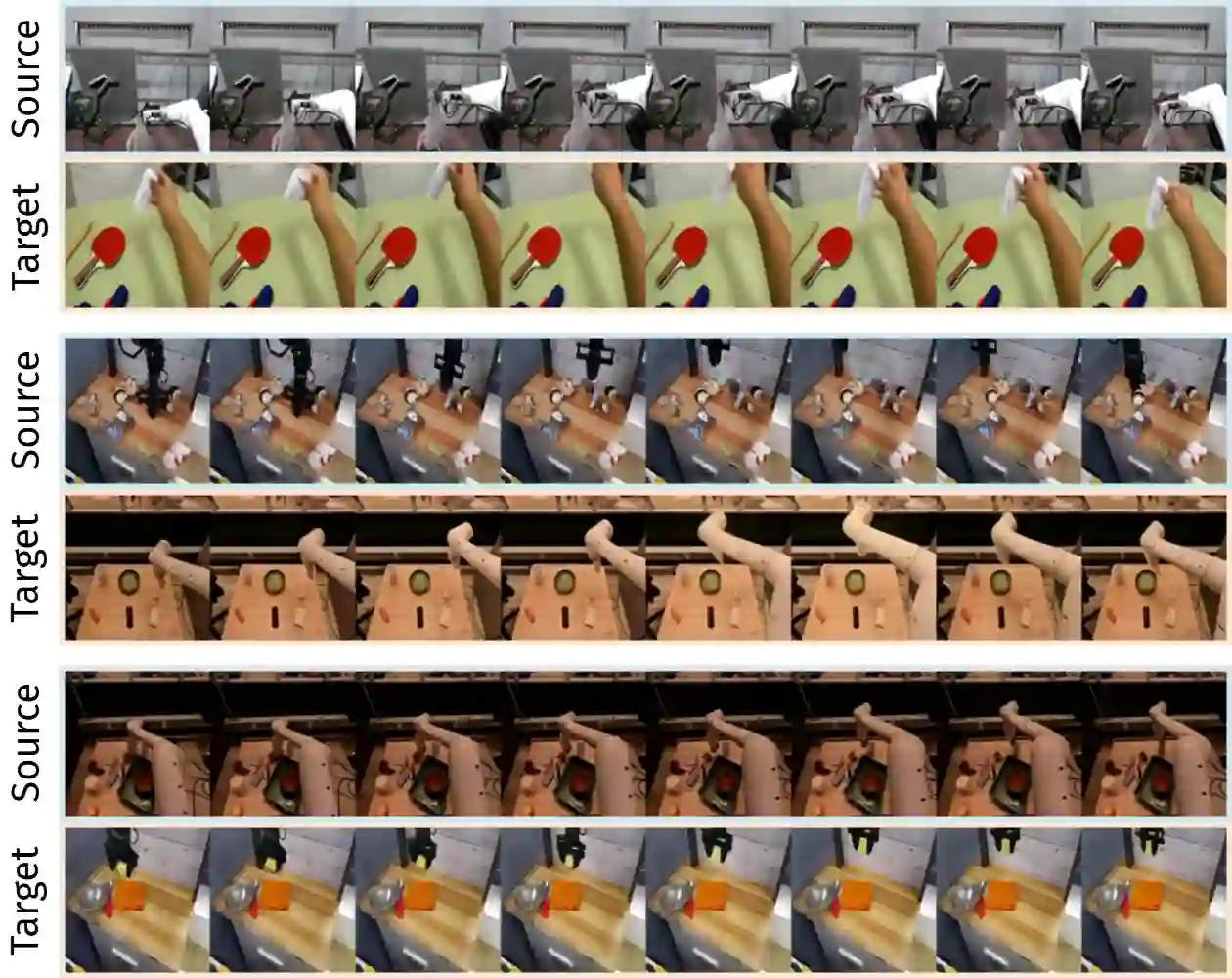

Adapting pre-trained video generation models into controllable world models via latent actions is a promising step towards creating generalist world models. The dominant paradigm adopts a two-stage approach that trains latent action model (LAM) and the world model separately, resulting in redundant training and limiting their potential for co-adaptation. A conceptually simple and appealing idea is to directly replace the forward dynamic model in LAM with a powerful world model and training them jointly, but it is non-trivial and prone to representational collapse. In this work, we propose CoLA-World, which for the first time successfully realizes this synergistic paradigm, resolving the core challenge in joint learning through a critical warm-up phase that effectively aligns the representations of the from-scratch LAM with the pre-trained world model. This unlocks a co-evolution cycle: the world model acts as a knowledgeable tutor, providing gradients to shape a high-quality LAM, while the LAM offers a more precise and adaptable control interface to the world model. Empirically, CoLA-World matches or outperforms prior two-stage methods in both video simulation quality and downstream visual planning, establishing a robust and efficient new paradigm for the field.

翻译:通过潜在动作将预训练的视频生成模型适配为可控世界模型,是构建通用世界模型的一个有前景的步骤。主流范式采用两阶段方法,分别训练潜在动作模型和世界模型,这导致了冗余的训练并限制了它们协同适应的潜力。一个概念上简单且吸引人的想法是直接用强大的世界模型替换潜在动作模型中的前向动态模型并进行联合训练,但这并非易事,且容易导致表征崩溃。在本工作中,我们提出了CoLA-World,首次成功实现了这种协同范式,通过一个关键的热身阶段解决了联合学习的核心挑战,该阶段有效地将从零开始的潜在动作模型表征与预训练的世界模型对齐。这开启了一个协同演化循环:世界模型充当知识渊博的导师,提供梯度以塑造高质量的潜在动作模型,而潜在动作模型则为世界模型提供了一个更精确、适应性更强的控制接口。实验表明,CoLA-World在视频模拟质量和下游视觉规划任务上均匹配或超越了先前的两阶段方法,为该领域建立了一个稳健且高效的新范式。