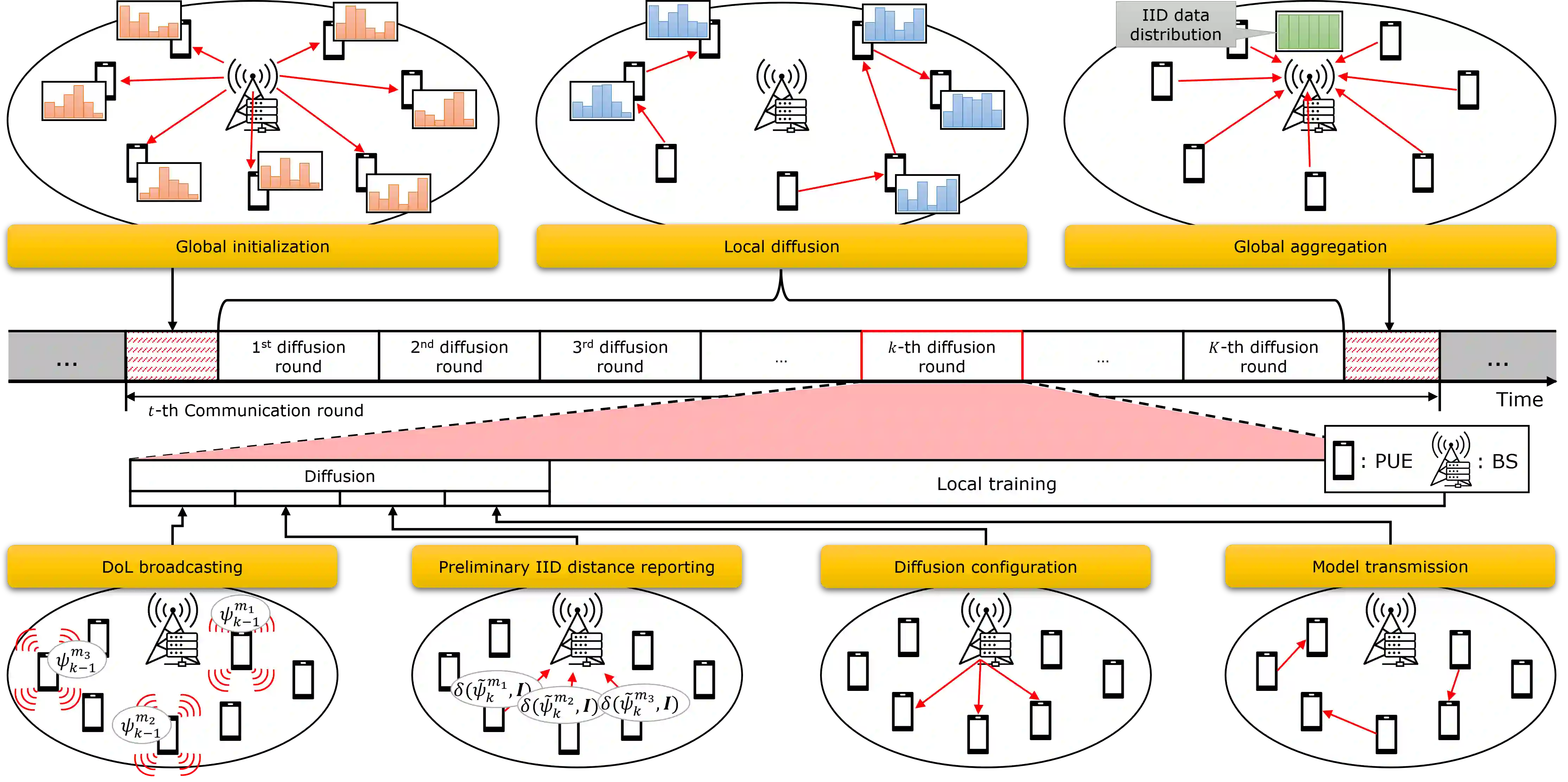

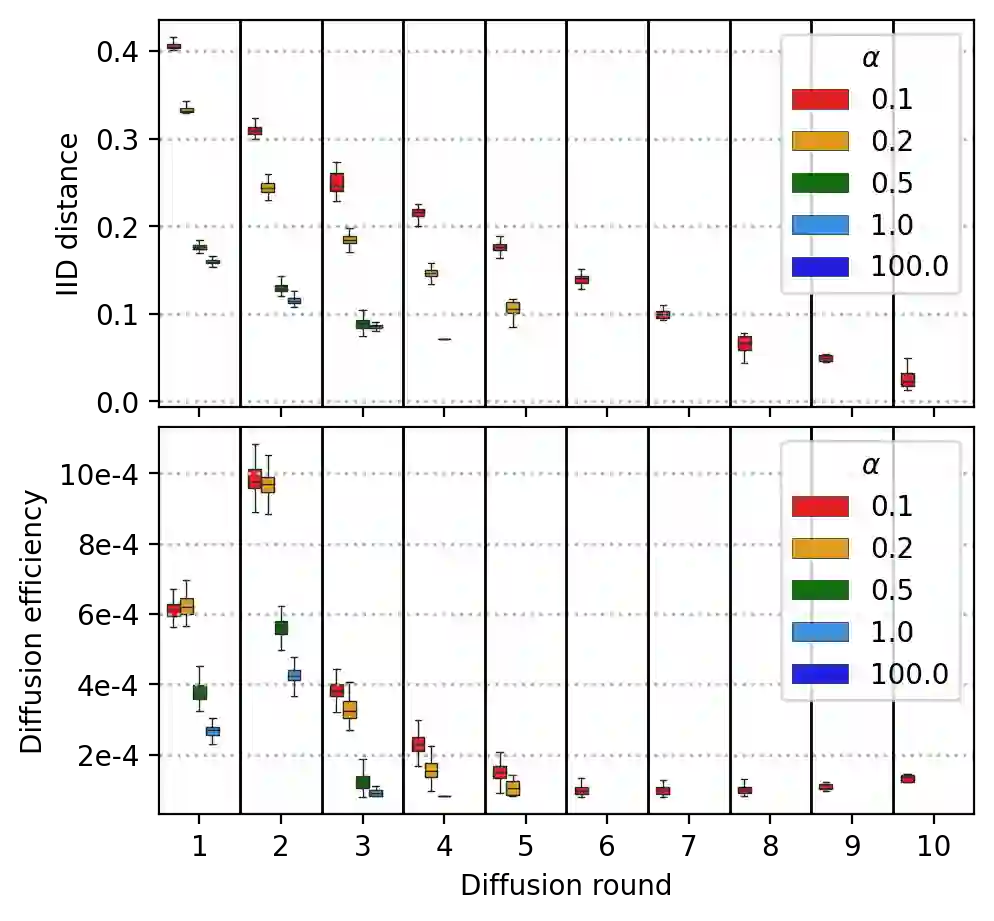

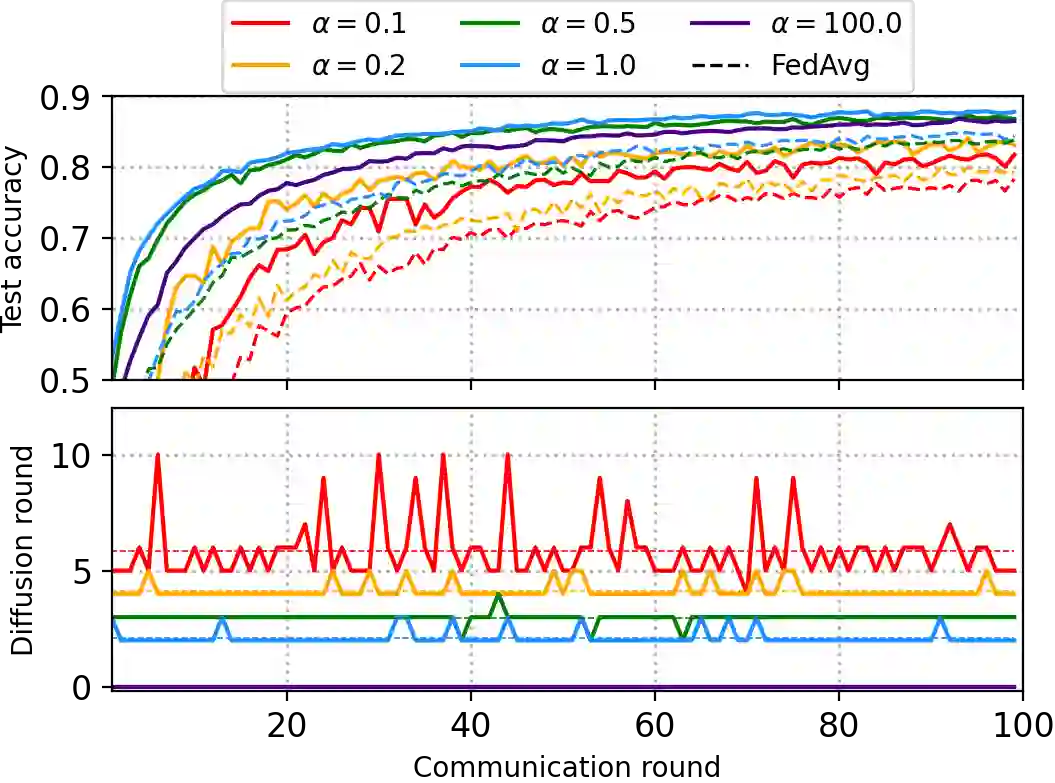

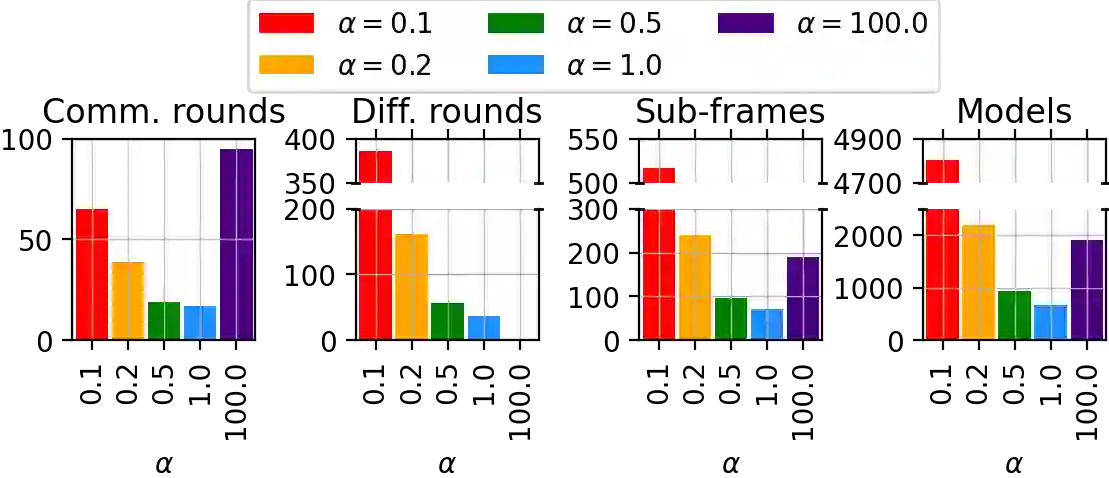

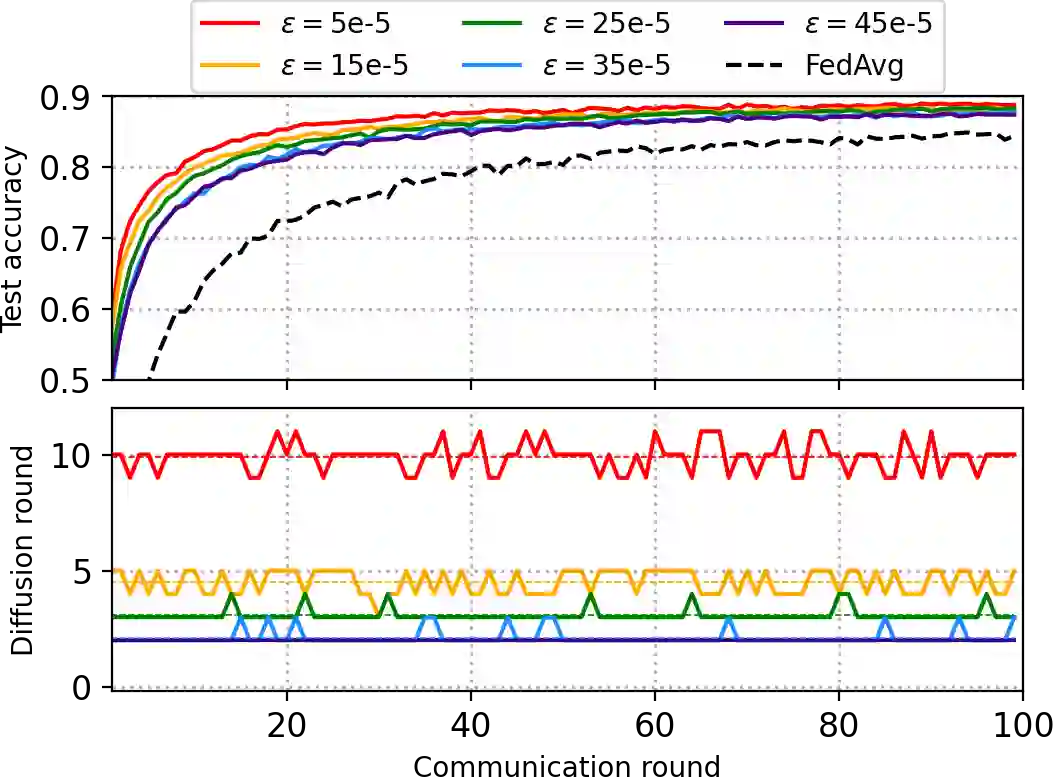

Federated learning (FL) is a novel learning paradigm that addresses the privacy leakage challenge of centralized learning. However, in FL, users with non-independent and identically distributed (non-IID) characteristics can deteriorate the performance of the global model. Specifically, the global model suffers from the weight divergence challenge owing to non-IID data. To address the aforementioned challenge, we propose a novel diffusion strategy of the machine learning (ML) model (FedDif) to maximize the FL performance with non-IID data. In FedDif, users spread local models to neighboring users over D2D communications. FedDif enables the local model to experience different distributions before parameter aggregation. Furthermore, we theoretically demonstrate that FedDif can circumvent the weight divergence challenge. On the theoretical basis, we propose the communication-efficient diffusion strategy of the ML model, which can determine the trade-off between the learning performance and communication cost based on auction theory. The performance evaluation results show that FedDif improves the test accuracy of the global model by 11% compared to the baseline FL with non-IID settings. Moreover, FedDif improves communication efficiency in perspective of the number of transmitted sub-frames and models by 2.77 folds than the latest methods

翻译:联邦学习(FL)是解决中央学习隐私泄露挑战的新型学习模式(FL),是一个解决中央学习隐私泄漏挑战的新学习模式。然而,在FL中,非独立和同样分布(非IID)特性的用户可能会使全球模型的性能恶化。具体地说,全球模型因非IID数据而面临重量差异的挑战。为了应对上述挑战,我们提出了机器学习(ML)模型(FedDif)的新传播战略,以最大限度地利用非IID数据实现FL业绩最大化。在FedDif中,用户将本地模型向邻接用户传播D2D通信。FedDif使本地模型能够在参数汇总之前体验不同的分布。此外,我们理论上证明FedDif可以绕过重量差异挑战。根据理论,我们提出了ML模型的通信效率传播战略,该战略可以根据拍卖理论确定学习业绩和通信成本之间的取舍。业绩评估结果表明,FedDif将全球模型的测试准确性比非IID环境的基线提高了11%。此外,FedDif在2-77次模型中提高了通信效率。