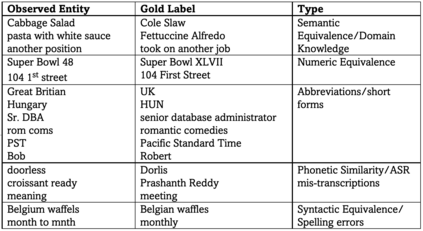

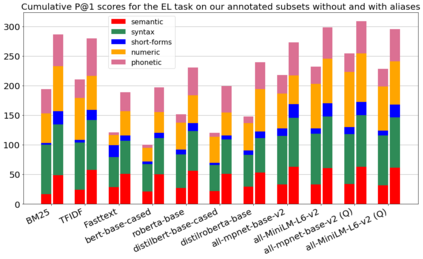

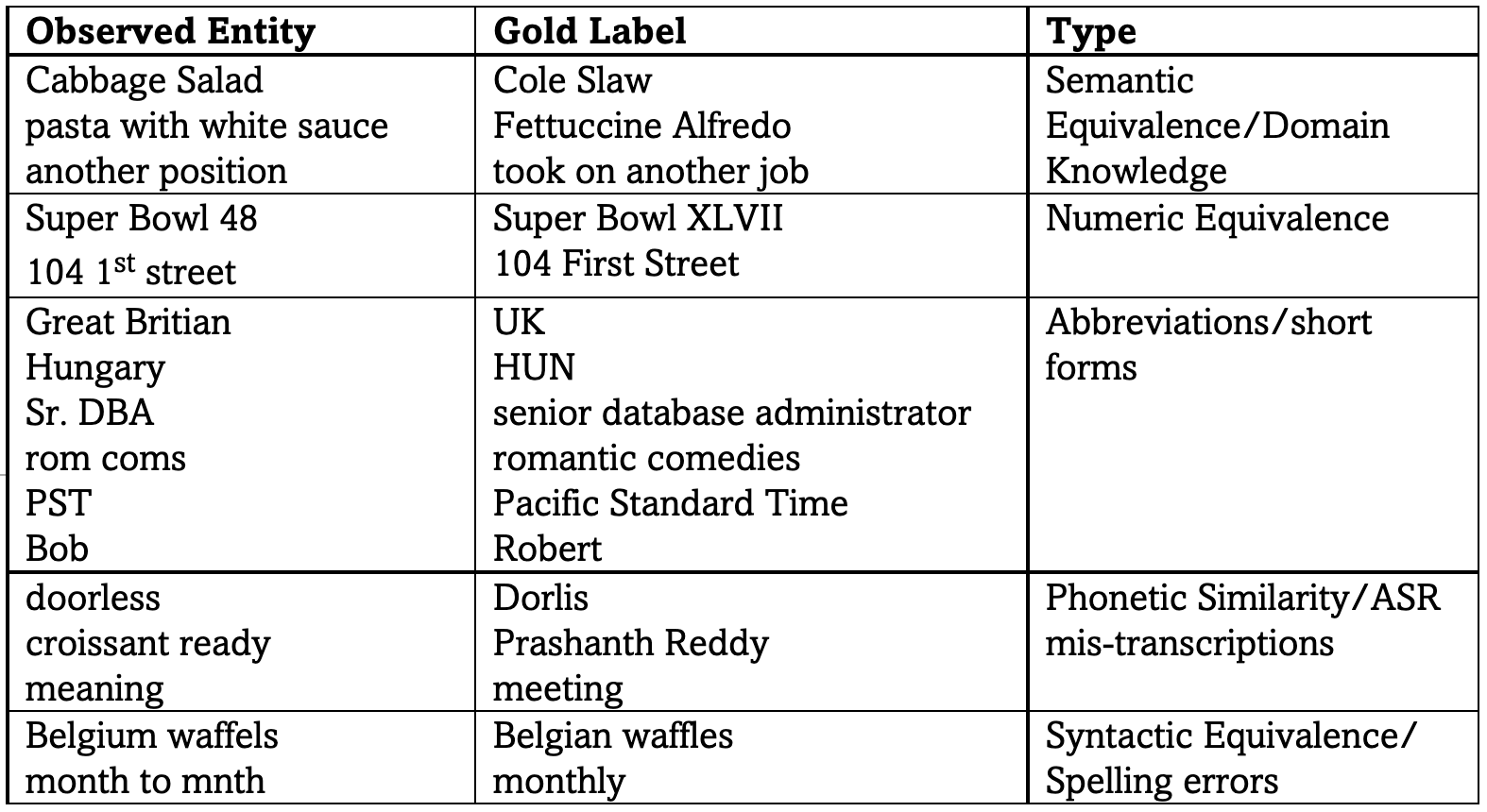

The wide applicability of pretrained transformer models (PTMs) for natural language tasks is well demonstrated, but their ability to comprehend short phrases of text is less explored. To this end, we evaluate different PTMs from the lens of unsupervised Entity Linking in task-oriented dialog across 5 characteristics -- syntactic, semantic, short-forms, numeric and phonetic. Our results demonstrate that several of the PTMs produce sub-par results when compared to traditional techniques, albeit competitive to other neural baselines. We find that some of their shortcomings can be addressed by using PTMs fine-tuned for text-similarity tasks, which illustrate an improved ability in comprehending semantic and syntactic correspondences, as well as some improvements for short-forms, numeric and phonetic variations in entity mentions. We perform qualitative analysis to understand nuances in their predictions and discuss scope for further improvements. Code can be found at https://github.com/murali1996/el_tod

翻译:受过训练的变压器模型(PTMs)对自然语言任务的广泛适用性已得到充分证明,但是它们理解短文本短语的能力没有那么深入探讨。为此,我们从未受监督的实体的镜头上评价不同的PTMs, 将任务导向的对话联系起来,涉及五种特点 -- -- 综合、语义、短形、数字和语音。我们的结果表明,一些PTMs与其他神经基线相比,与传统技术相比产生分级的结果,尽管与其他神经基线相比具有竞争力。我们发现,它们的一些缺点可以通过使用微调的PTMs处理,以适应文本相似性任务,这表明理解语义和综合对应的能力有所提高,以及实体中短格式、数字和语音变化的一些改进。我们进行了定性分析,以了解其预测中的细微之处,并讨论进一步改进的范围。我们可在https://github.com/ murali1996/el_tod查阅代码。