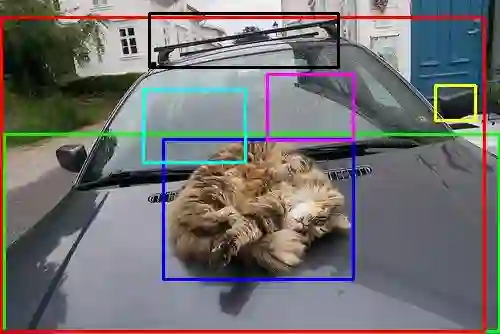

Relationships encode the interactions among individual instances, and play a critical role in deep visual scene understanding. Suffering from the high predictability with non-visual information, existing methods tend to fit the statistical bias rather than ``learning'' to ``infer'' the relationships from images. To encourage further development in visual relationships, we propose a novel method to automatically mine more valuable relationships by pruning visually-irrelevant ones. We construct a new scene-graph dataset named Visually-Relevant Relationships Dataset (VrR-VG) based on Visual Genome. Compared with existing datasets, the performance gap between learnable and statistical method is more significant in VrR-VG, and frequency-based analysis does not work anymore. Moreover, we propose to learn a relationship-aware representation by jointly considering instances, attributes and relationships. By applying the representation-aware feature learned on VrR-VG, the performances of image captioning and visual question answering are systematically improved with a large margin, which demonstrates the gain of our dataset and the features embedding schema. VrR-VG is available via http://vrr-vg.com/.

翻译:将单个实例之间的相互作用编码成,并在深视场景理解中起到关键作用。从非视觉信息的高度可预测性来看,现有方法往往适合统计偏差,而不是“学习”到“从图像推断”关系。为了鼓励视觉关系的进一步发展,我们建议一种新颖的方法,通过剪裁与视觉无关的关系,自动挖掘更有价值的关系。我们根据视觉基因组建立了一个新的景象图谱数据集(VrR-VG),与现有的数据集相比,在VrR-VG中,可学习方法和统计方法之间的性能差距更为明显,而基于频率的分析不再起作用。此外,我们提议通过共同考虑实例、属性和关系来学习一种关系意识。通过应用VrR-VG上所学的表示-觉识特征,图像说明和直观问题解答的性能得到系统的改进,与现有的数据集相比,可学习方法和统计方法之间的性能差距在VrR-VG中更为明显,而且基于频率的分析不再起作用。我们建议通过 http://vrr-com 获得数据集和嵌入 schema.Vr-VG。