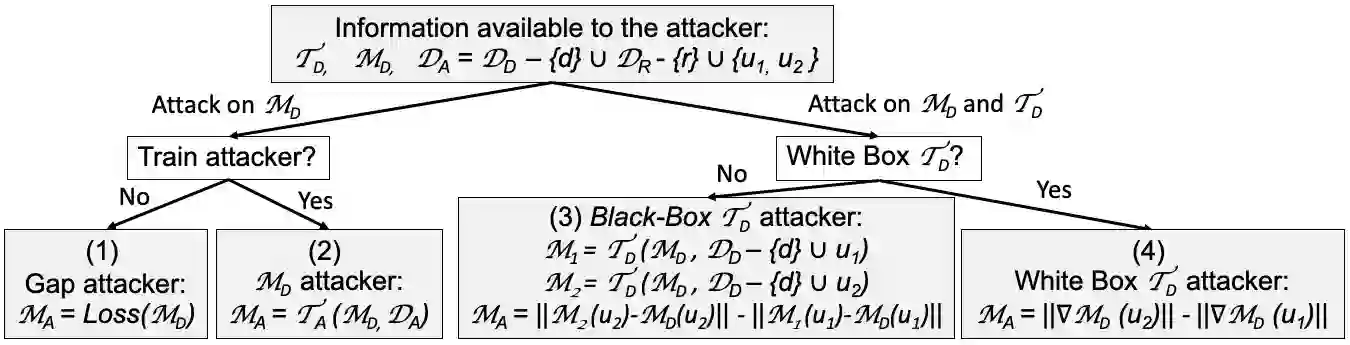

We address the problem of defending predictive models, such as machine learning classifiers (Defender models), against membership inference attacks, in both the black-box and white-box setting, when the trainer and the trained model are publicly released. The Defender aims at optimizing a dual objective: utility and privacy. Both utility and privacy are evaluated with an external apparatus including an Attacker and an Evaluator. On one hand, Reserved data, distributed similarly to the Defender training data, is used to evaluate Utility; on the other hand, Reserved data, mixed with Defender training data, is used to evaluate membership inference attack robustness. In both cases classification accuracy or error rate are used as the metric: Utility is evaluated with the classification accuracy of the Defender model; Privacy is evaluated with the membership prediction error of a so-called "Leave-Two-Unlabeled" LTU Attacker, having access to all of the Defender and Reserved data, except for the membership label of one sample from each. We prove that, under certain conditions, even a "na\"ive" LTU Attacker can achieve lower bounds on privacy loss with simple attack strategies, leading to concrete necessary conditions to protect privacy, including: preventing over-fitting and adding some amount of randomness. However, we also show that such a na\"ive LTU Attacker can fail to attack the privacy of models known to be vulnerable in the literature, demonstrating that knowledge must be complemented with strong attack strategies to turn the LTU Attacker into a powerful means of evaluating privacy. Our experiments on the QMNIST and CIFAR-10 datasets validate our theoretical results and confirm the roles of over-fitting prevention and randomness in the algorithms to protect against privacy attacks.

翻译:我们处理的是保护预测模型,如机器学习分类(Defender 模型),在教练和受过训练的模型公开发布时,在黑箱和白箱设置中,在黑箱和白箱设置中保护会员推断攻击; 辩护人的目标是优化双重目标: 公用事业和隐私; 使用外部机器,包括攻击者和评审员,对公用和隐私进行评价。 一方面, 与辩护人培训数据相似的保密数据用于评估实用性; 另一方面, 储备数据, 与辩护人培训数据混合, 用来评估黑箱和白箱中的成员推断攻击的强度。 在这两种情况下, 使用分类准确性或错误率作为衡量标准: 使用辩护人的分类精确度和隐私; 使用所谓的“Leave-2-OUnlabeled” LTU攻击者的会员预测错误来评价隐私和隐私。 一方面, 使用所有捍卫者培训数据,但每个样本的会员标签除外。 我们证明,在某些条件下, 甚至是“na\ over'LTU attem areview rual rual deview laction rational ration rence ration real real real real real real real real) 也可以化一些攻击行为, 方法可以证明我们的隐私损失。