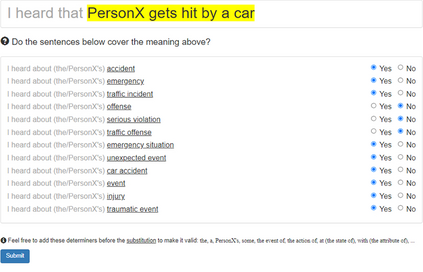

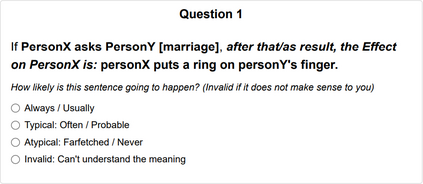

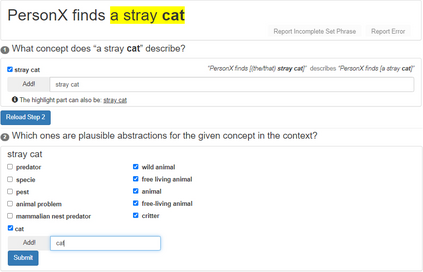

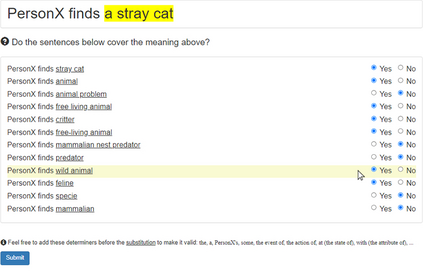

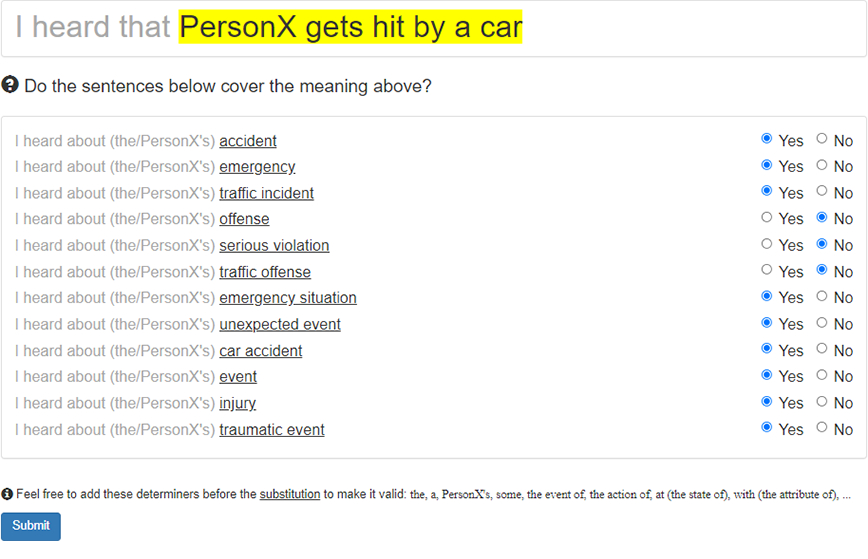

Conceptualization, or viewing entities and situations as instances of abstract concepts in mind and making inferences based on that, is a vital component in human intelligence for commonsense reasoning. Although recent artificial intelligence has made progress in acquiring and modelling commonsense, attributed to large neural language models and commonsense knowledge graphs (CKGs), conceptualization is yet to thoroughly be introduced, making current approaches ineffective to cover knowledge about countless diverse entities and situations in the real world. To address the problem, we thoroughly study the possible role of conceptualization in commonsense reasoning, and formulate a framework to replicate human conceptual induction from acquiring abstract knowledge about abstract concepts. Aided by the taxonomy Probase, we develop tools for contextualized conceptualization on ATOMIC, a large-scale human annotated CKG. We annotate a dataset for the validity of conceptualizations for ATOMIC on both event and triple level, develop a series of heuristic rules based on linguistic features, and train a set of neural models, so as to generate and verify abstract knowledge. Based on these components, a pipeline to acquire abstract knowledge is built. A large abstract CKG upon ATOMIC is then induced, ready to be instantiated to infer about unseen entities or situations. Furthermore, experiments find directly augmenting data with abstract triples to be helpful in commonsense modelling.

翻译:概念化,或将实体和情况视为抽象概念的事例,在思想中视为抽象概念,并在此基础上作出推论,是人类情报中一个至关重要的组成部分,以进行常识推理。虽然最近的人工情报在获取和模拟常识方面取得了进展,这是大型神经语言模型和常识知识图(CKGs)所推算的,但概念化还有待彻底引入,使目前的做法无法有效地涵盖对现实世界无数不同实体和情况的认知。为了解决这个问题,我们彻底研究概念化在常识推理中可能发挥的作用,并制定一个框架,通过获取抽象概念的抽象知识复制人类概念感化。在分类学Probase的帮助下,我们开发了有关ATOMIC概念化背景化的工具,这是大规模人类附加注释的CKGs。我们注意到一个数据集在事件和三个层面都正确性,根据语言特征制定一系列的超自然规则,并训练一套神经模型,从而产生和核查抽象知识。根据这些组成部分,在获得抽象概念化概念学知识的过程中,我们开发了一种管道,在ATO中获取抽象知识,一个大规模的人类概念化概念化概念化工具,然后开始,然后在CMMISB号进行。