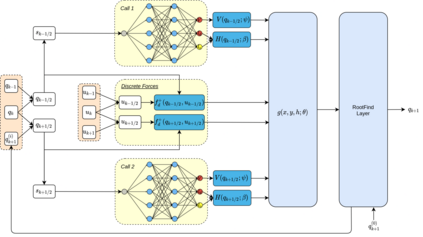

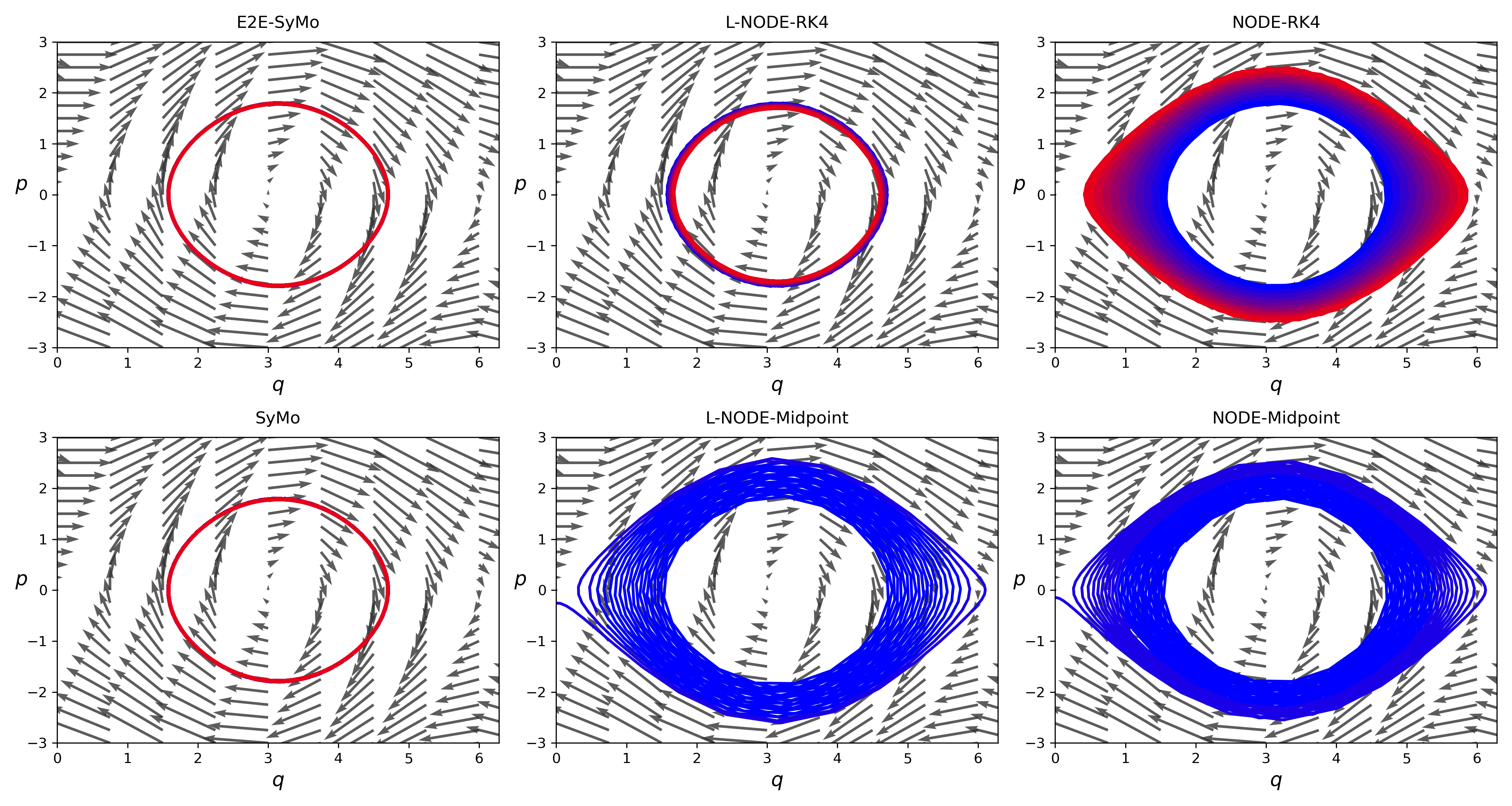

With deep learning being gaining attention from the research community for prediction and control of real physical systems, learning important representations is becoming now more than ever mandatory. It is of extremely importance that deep learning representations are coherent with physics. When learning from discrete data this can be guaranteed by including some sort of prior into the learning, however not all discretization priors preserve important structures from the physics. In this paper we introduce Symplectic Momentum Neural Networks (SyMo) as models from a discrete formulation of mechanics for non-separable mechanical systems. The combination of such formulation leads SyMos to be constrained towards preserving important geometric structures such as momentum and a symplectic form and learn from limited data. Furthermore, it allows to learn dynamics only from the poses as training data. We extend SyMos to include variational integrators within the learning framework by developing an implicit root-find layer which leads to End-to-End Symplectic Momentum Neural Networks (E2E-SyMo). Through experimental results, using the pendulum and cartpole we show that such combination not only allows these models tol earn from limited data but also provides the models with the capability of preserving the symplectic form and show better long-term behaviour.

翻译:随着研究界对实际物理系统的预测和控制的深入学习日益受到研究界的注意,学习重要的表现方式现在比以往任何时候更加具有强制性。深层次的学习形式与物理的一致性极为重要。从离散的数据中学习,这可以通过在学习之前纳入某种种类的数据来保证。但是,并非所有离散的前列前列都保留了物理的重要结构。在本文件中,我们引入了从静脉动力神经神经网络(Symo)作为非分离机械系统机械离散配制的模型。这种配方的结合导致SyMos被限制在保存重要几何结构上,例如动力和静脉形态,并从有限的数据中学习。此外,它只允许通过将某种形态作为培训数据学习动态。我们扩展了SyMos,通过开发一个隐性根定层,导致最终到断脉冲神经网络(E2E-SyMo)作为模型的模型。通过实验结果,使用支架和木板模型,我们通过这种组合不仅能够保存这些形态,而且能够使这些形态的模型产生更好的长期的模型。