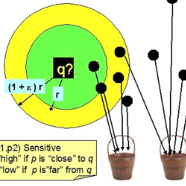

Recently, research on explainable recommender systems (RS) has drawn much attention from both academia and industry, resulting in a variety of explainable models. As a consequence, their evaluation approaches vary from model to model, which makes it quite difficult to compare the explainability of different models. To achieve a standard way of evaluating recommendation explanations, we provide three benchmark datasets for EXplanaTion RAnking (denoted as EXTRA), on which explainability can be measured by ranking-oriented metrics. Constructing such datasets, however, presents great challenges. First, user-item-explanation interactions are rare in existing RS, so how to find alternatives becomes a challenge. Our solution is to identify nearly duplicate or even identical sentences from user reviews. This idea then leads to the second challenge, i.e., how to efficiently categorize the sentences in a dataset into different groups, since it has quadratic runtime complexity to estimate the similarity between any two sentences. To mitigate this issue, we provide a more efficient method based on Locality Sensitive Hashing (LSH) that can detect near-duplicates in sub-linear time for a given query. Moreover, we plan to make our code publicly available, to allow other researchers create their own datasets.

翻译:最近,关于可解释的建议系统的研究引起了学术界和工业界的极大关注,从而产生了各种可解释的模式。因此,它们的评价方法因模式而异,因此很难比较不同模式的解释性。为了实现评价建议解释的标准方法,我们为Explanatiion RAnking(称为EXTRA)提供了三个基准数据集,可按分级取向指标衡量其可解释性。然而,建立这类数据集提出了巨大的挑战。首先,用户-项目-解释性互动在现有的RS是罕见的,因此如何找到替代方法成为挑战。我们的解决办法是从用户审查中找出几乎重复的甚至相同的句子。然后,这个想法导致第二个挑战,即如何有效地将数据集中的句子分类为不同的组,因为对于任何两句子的相似性,它具有四重时间的复杂度来估计。然而,为了减轻这一问题,我们提供了一种更高效的方法,基于地方性敏感哈希(LSH),可以探测近似替代品,从而成为一个挑战。我们的解决办法是,从用户审查中找出几乎重复或甚至相同的句子句子句中找到几乎相同句子句子句子句子的句子句子句子句子句,从而带来第二个挑战,即如何将有效地将句子归为不同的研究人员提供其他数据查询。