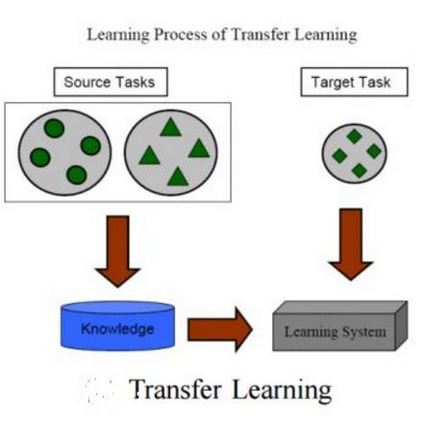

A fundamental problem in manifold learning is to approximate a functional relationship in a data chosen randomly from a probability distribution supported on a low dimensional sub-manifold of a high dimensional ambient Euclidean space. The manifold is essentially defined by the data set itself and, typically, designed so that the data is dense on the manifold in some sense. The notion of a data space is an abstraction of a manifold encapsulating the essential properties that allow for function approximation. The problem of transfer learning (meta-learning) is to use the learning of a function on one data set to learn a similar function on a new data set. In terms of function approximation, this means lifting a function on one data space (the base data space) to another (the target data space). This viewpoint enables us to connect some inverse problems in applied mathematics (such as inverse Radon transform) with transfer learning. In this paper we examine the question of such lifting when the data is assumed to be known only on a part of the base data space. We are interested in determining subsets of the target data space on which the lifting can be defined, and how the local smoothness of the function and its lifting are related.

翻译:暂无翻译