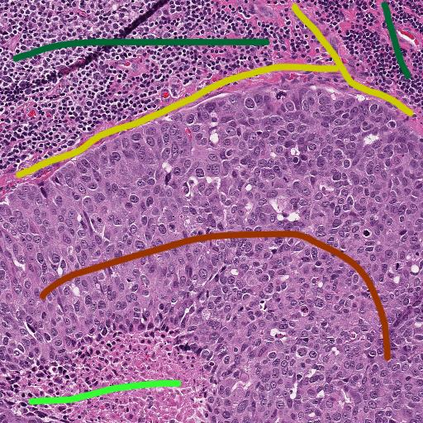

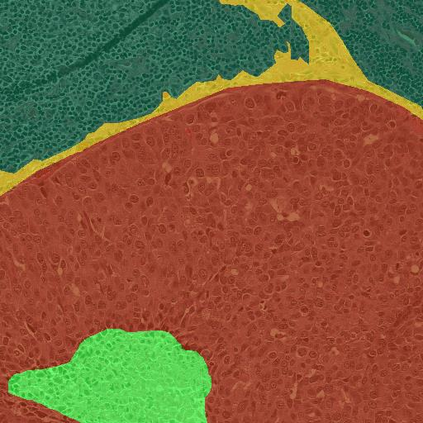

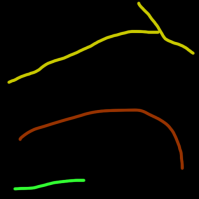

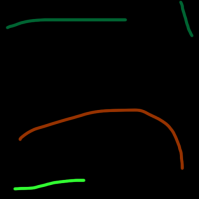

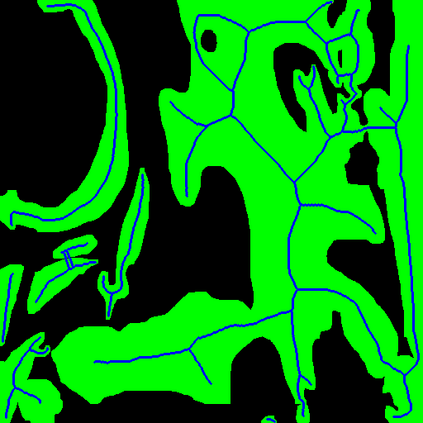

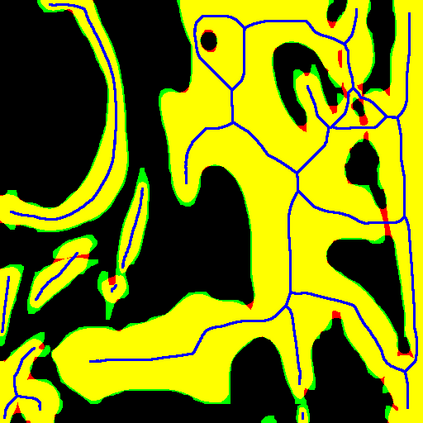

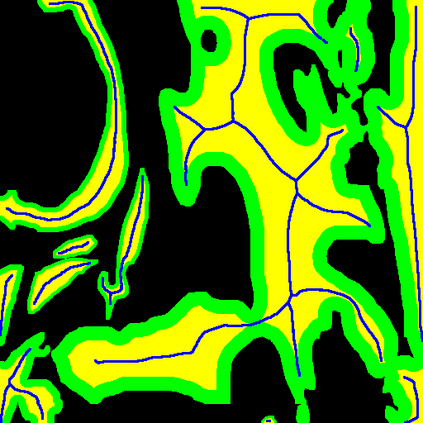

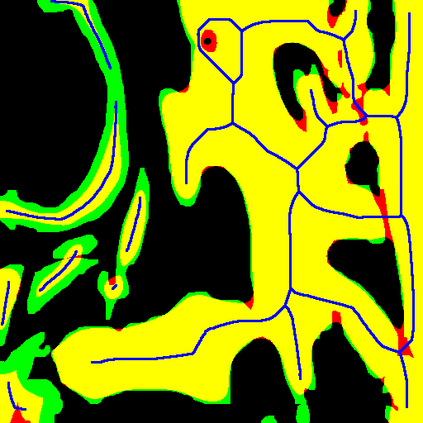

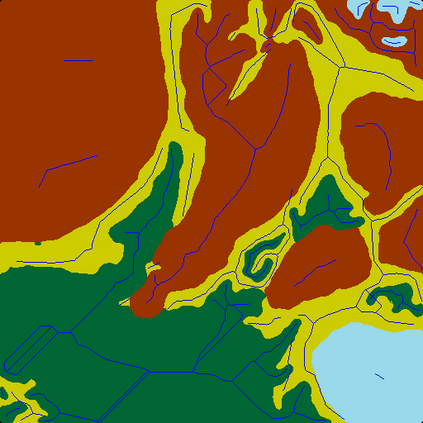

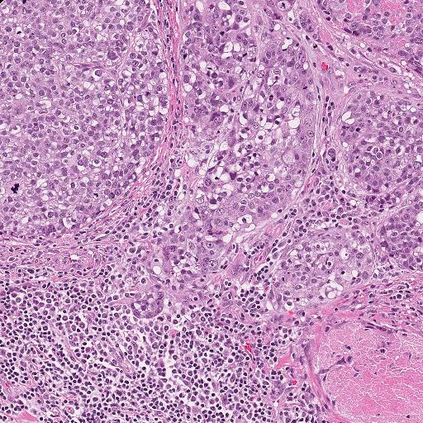

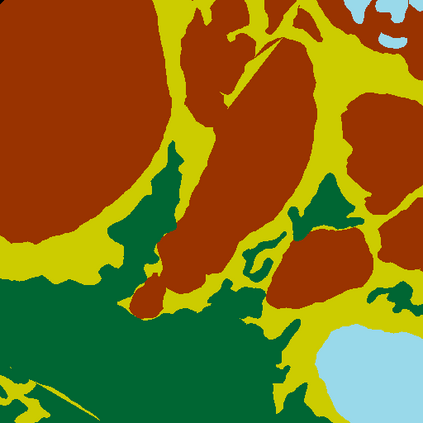

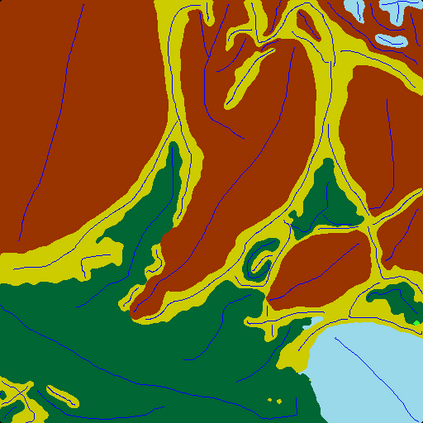

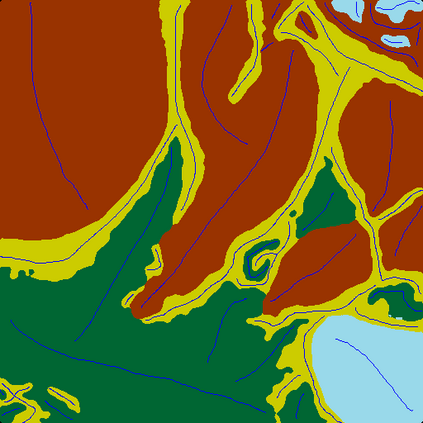

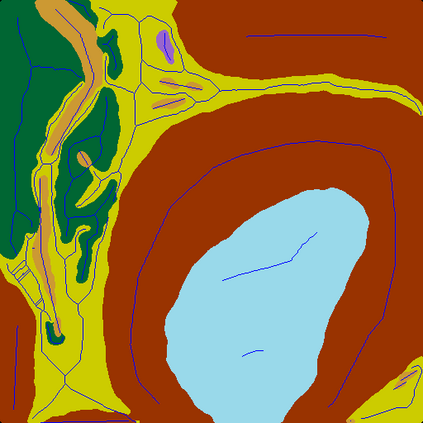

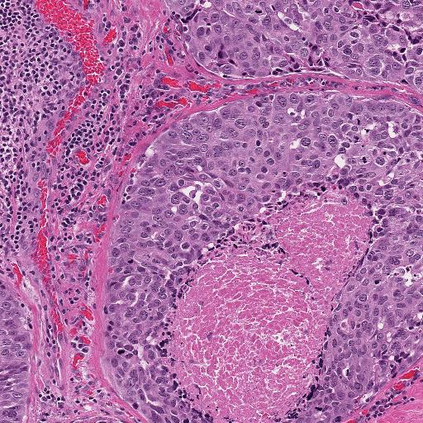

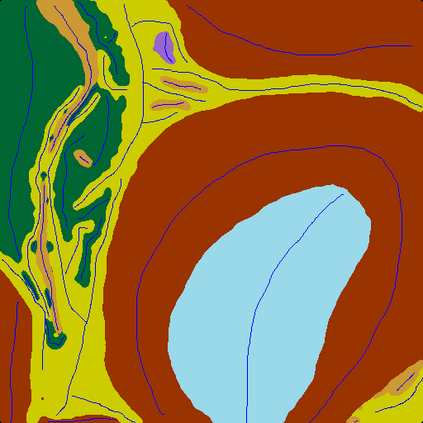

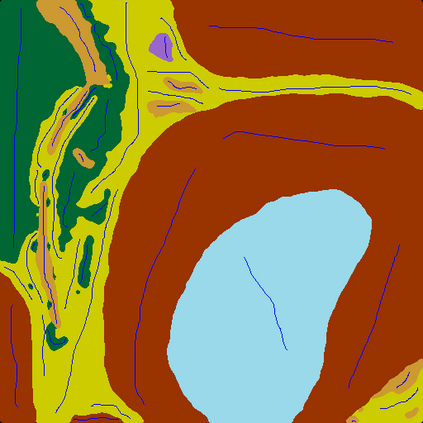

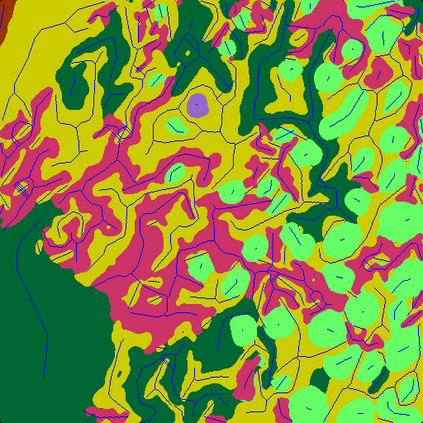

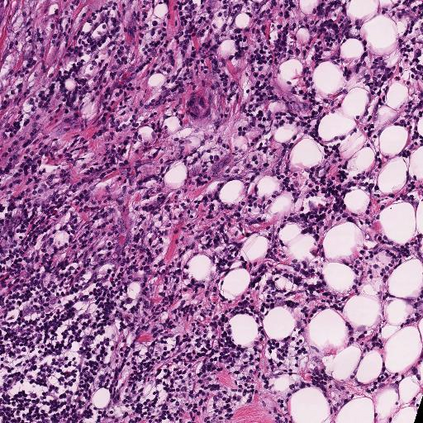

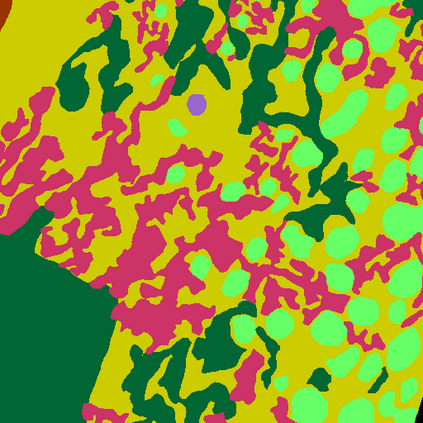

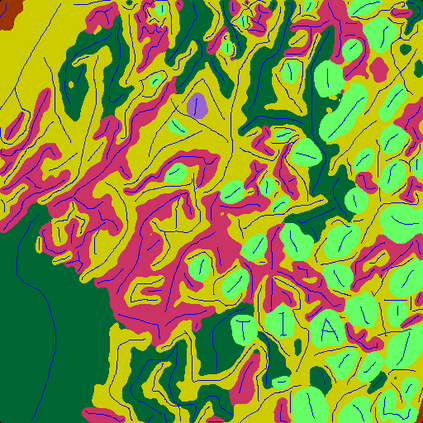

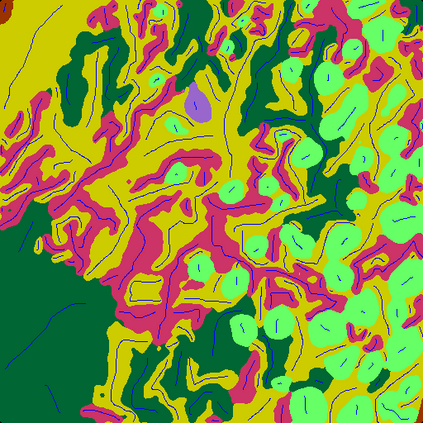

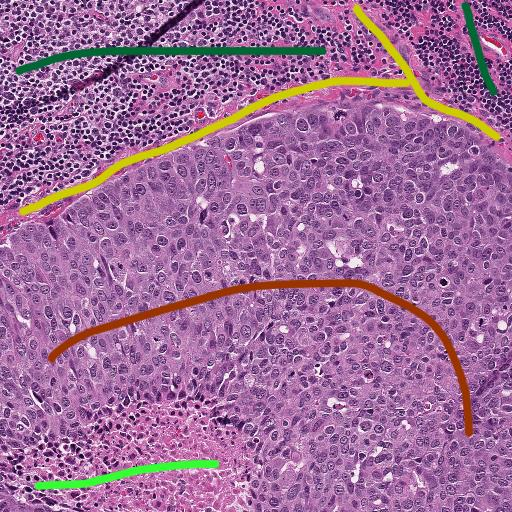

From the simple measurement of tissue attributes in pathology workflow to designing an explainable diagnostic/prognostic AI tool, access to accurate semantic segmentation of tissue regions in histology images is a prerequisite. However, delineating different tissue regions manually is a laborious, time-consuming and costly task that requires expert knowledge. On the other hand, the state-of-the-art automatic deep learning models for semantic segmentation require lots of annotated training data and there are only a limited number of tissue region annotated images publicly available. To obviate this issue in computational pathology projects and collect large-scale region annotations efficiently, we propose an efficient interactive segmentation network that requires minimum input from the user to accurately annotate different tissue types in the histology image. The user is only required to draw a simple squiggle inside each region of interest so it will be used as the guiding signal for the model. To deal with the complex appearance and amorph geometry of different tissue regions we introduce several automatic and minimalistic guiding signal generation techniques that help the model to become robust against the variation in the user input. By experimenting on a dataset of breast cancer images, we show that not only does our proposed method speed up the interactive annotation process, it can also outperform the existing automatic and interactive region segmentation models.

翻译:从简单测量病理学工作流程中的组织属性到设计可解释的诊断/预测性人工智能工具等简单测量组织属性到设计一个可解释的诊断/预测性人工智能工具,都有一个先决条件。然而,手工划分不同组织区域是一项艰巨、耗时和昂贵的任务,需要专家知识。另一方面,最先进的语义分解自动深学习模型需要大量附加说明的培训数据,只有数量有限的组织区域可公开提供附加说明的图像。为了在计算病理学项目中避免这一问题,并有效地收集大比例区域说明,我们建议建立一个高效的交互分解网络,需要用户提供最低限度的投入,以准确说明组织图象中不同的组织类型。用户只需在每个感兴趣的区域内画一个简单的分流即可作为模型的指导信号。为了处理不同组织区域的复杂外观和变形,我们引入了几种自动和微小的指导信号生成技术,帮助模型在用户输入时变得稳健。我们通过实验一种互动的剖面图,我们还可以展示一种互动的剖面图,我们现有的乳腺癌区域,我们还可以展示一种互动的剖面图。