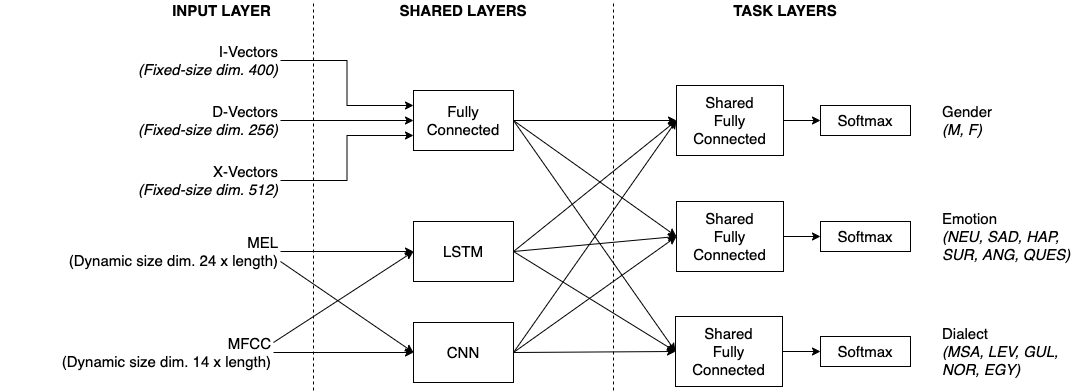

This paper proposes a novel approach to an automatic estimation of three speaker traits from Arabic speech: gender, emotion, and dialect. After showing promising results on different text classification tasks, the multi-task learning (MTL) approach is used in this paper for Arabic speech classification tasks. The dataset was assembled from six publicly available datasets. First, The datasets were edited and thoroughly divided into train, development, and test sets (open to the public), and a benchmark was set for each task and dataset throughout the paper. Then, three different networks were explored: Long Short Term Memory (LSTM), Convolutional Neural Network (CNN), and Fully-Connected Neural Network (FCNN) on five different types of features: two raw features (MFCC and MEL) and three pre-trained vectors (i-vectors, d-vectors, and x-vectors). LSTM and CNN networks were implemented using raw features: MFCC and MEL, where FCNN was explored on the pre-trained vectors while varying the hyper-parameters of these networks to obtain the best results for each dataset and task. MTL was evaluated against the single task learning (STL) approach for the three tasks and six datasets, in which the MTL and pre-trained vectors almost constantly outperformed STL. All the data and pre-trained models used in this paper are available and can be acquired by the public.

翻译:本文建议对阿拉伯语演讲的三个发言者特征:性别、情感和方言进行自动估计的新做法。在展示了不同文本分类任务的有希望结果之后,本文在阿拉伯语语言分类任务中使用了多任务学习(MTL)方法。数据集由6个公开的数据集组成。首先,数据集经过编辑并彻底分为火车、开发和测试集(向公众开放),并为每件任务和文件的数据集设定了基准。随后,探讨了三个不同的网络:长期短期记忆(LSTM)、 Convolucial Neural网络(CNN)和全连结神经网络(FCNNN),在5种不同特征上使用:两个原始特征(MFCC和MEL)和3个预先培训的矢量(i- Vators, d-Vators,x-Vests)。LSTMT和CNN网络使用原始特征执行:MFC和MN可以对预先培训的矢量的矢量进行探索,同时对这些网络的超参数进行修改,以获得用于每项数据任务的最佳结果,在Stad-leglement Areal legal legal 之前,在Settild Stal 和Settreal 中,所有现有任务中,在每项任务中,在Stakedudustratedal 3 leaddaldaldrealdaldaldaldaldaldaldalds 3 之前,在每项任务中,在Settdaldaldaldald 和Stradududududududududududududududududududududustraldaldaldaldddddddaldaldaldddddaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldal 上学习了所有三种任务中,在使用三种任务中,在使用三种任务前学习所有三种任务中,在前学习所有现有三种任务中,在每项任务中,在使用三种任务和前,在前学习所有数据和