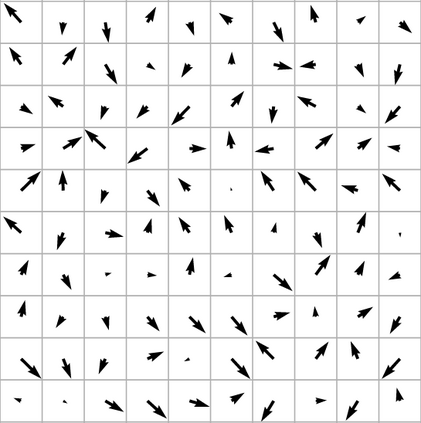

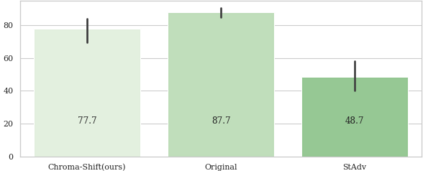

Deep Neural Networks have been shown to be vulnerable to various kinds of adversarial perturbations. In addition to widely studied additive noise based perturbations, adversarial examples can also be created by applying a per pixel spatial drift on input images. While spatial transformation based adversarial examples look more natural to human observers due to absence of additive noise, they still possess visible distortions caused by spatial transformations. Since the human vision is more sensitive to the distortions in the luminance compared to those in chrominance channels, which is one of the main ideas behind the lossy visual multimedia compression standards, we propose a spatial transformation based perturbation method to create adversarial examples by only modifying the color components of an input image. While having competitive fooling rates on CIFAR-10 and NIPS2017 Adversarial Learning Challenge datasets, examples created with the proposed method have better scores with regards to various perceptual quality metrics. Human visual perception studies validate that the examples are more natural looking and often indistinguishable from their original counterparts.

翻译:深神经网络被证明易受各种对抗性扰动的影响。除了广泛研究的添加性噪音扰动外,还可以通过在输入图像上应用每个像素空间漂移来创建对抗性实例。空间转换的对抗性实例对于人类观察者来说,由于缺乏添加性噪音而显得更自然,但它们仍然具有空间变异造成的明显扭曲。由于人类的视觉比色化频道的光谱变异更为敏感,而色化频道是丢失视觉多媒体压缩标准的主要理念之一,因此我们提议以空间变异为基础,仅通过修改输入图像的颜色组成部分来创建对抗性实例。在CIFAR-10和 NIPS-2017 Aversarial学习挑战数据集中具有竞争性的愚弄率的同时,与拟议方法生成的示例相比,在各种感知性质量计量方面得分更好。人类视觉感知研究证实,这些示例比较自然,而且往往无法与原始对立。