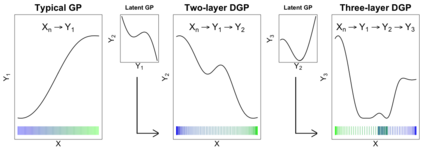

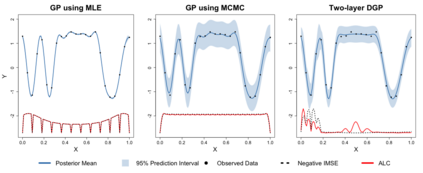

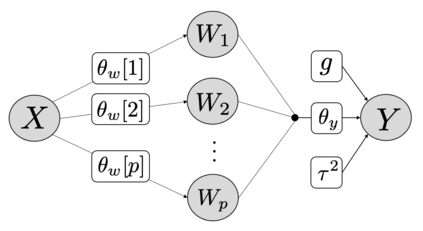

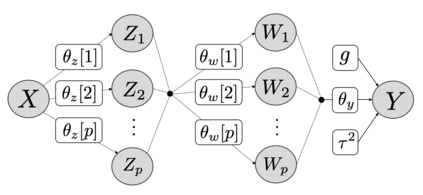

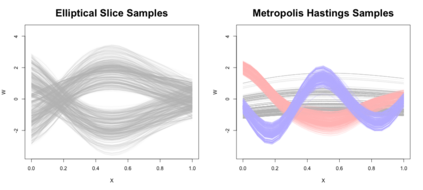

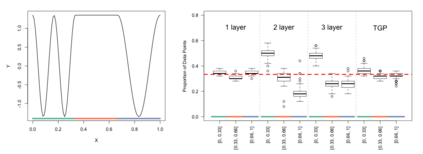

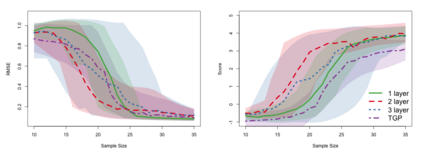

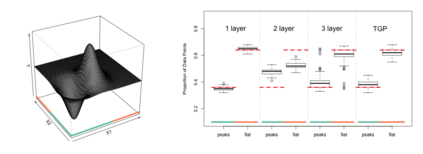

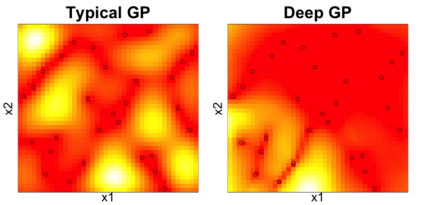

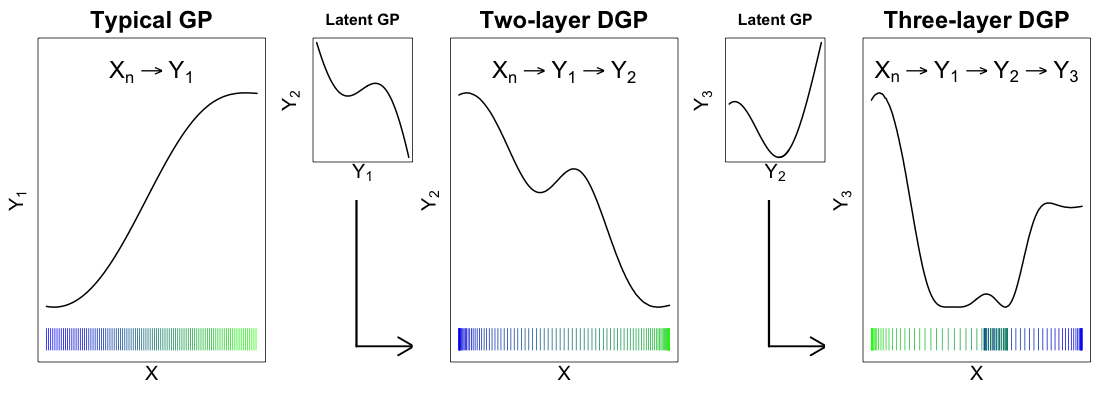

Deep Gaussian processes (DGPs) are increasingly popular as predictive models in machine learning (ML) for their non-stationary flexibility and ability to cope with abrupt regime changes in training data. Here we explore DGPs as surrogates for computer simulation experiments whose response surfaces exhibit similar characteristics. In particular, we transport a DGP's automatic warping of the input space and full uncertainty quantification (UQ), via a novel elliptical slice sampling (ESS) Bayesian posterior inferential scheme, through to active learning (AL) strategies that distribute runs non-uniformly in the input space -- something an ordinary (stationary) GP could not do. Building up the design sequentially in this way allows smaller training sets, limiting both expensive evaluation of the simulator code and mitigating cubic costs of DGP inference. When training data sizes are kept small through careful acquisition, and with parsimonious layout of latent layers, the framework can be both effective and computationally tractable. Our methods are illustrated on simulation data and two real computer experiments of varying input dimensionality. We provide an open source implementation in the "deepgp" package on CRAN.

翻译:深高斯进程(DGPs)越来越受欢迎,作为机器学习(ML)中的预测模型,因为它们具有非固定的灵活性和应对培训数据突变的系统变化的能力。在这里,我们探索DGP作为计算机模拟实验的代孕器,这些计算机模拟实验的反应表面具有相似的特性。特别是,我们通过一种新颖的椭圆切切片取样(ESS)巴耶西亚远地点的推断性计划,将DGP自动扭曲输入空间和全部不确定性量化(UQ)传送到积极学习(AL)战略,在输入空间中不统一地分布输入空间 -- -- 一种普通(静止)GP不能做到。按此方式建立设计可以让更小的一组培训,限制对模拟器代码的昂贵评估和降低DGP推断的立方成本。当培训数据大小通过仔细的获取而保持小一些时,加上潜层的偏差布局,这个框架既有效,又可进行计算。我们的方法在模拟数据和两种不同输入维度的实际计算机实验中作了说明。我们提供了一种开源软件。