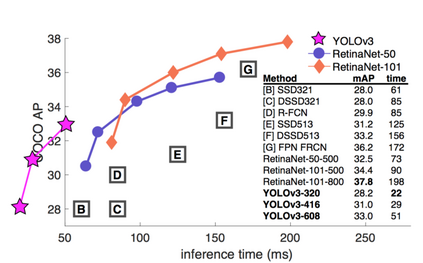

Recognizing actions from a video feed is a challenging task to automate, especially so on older hardware. There are two aims for this project: one is to recognize an action from the front-facing camera on an Android phone, the other is to support as many phones and Android versions as possible. This limits us to using models that are small enough to run on mobile phones with and without GPUs, and only using the camera feed to recognize the action. In this paper we compare performance of the YOLO architecture across devices (with and without dedicated GPUs) using models trained on a custom dataset. We also discuss limitations in recognizing faces and actions from video on limited hardware.

翻译:通过视频传输确认行动是自动化的艰巨任务,特别是老硬件。该项目有两个目的:一是识别安道尔手机上前视相机的动作,另一是支持尽可能多的手机和安达尔多尔版本。这限制了我们使用那些小到可以用移动电话运行的无GPU和无GPU的移动电话的模型,而仅使用相机反馈来识别动作。在本文中,我们使用经过定制数据集培训的模型比较(有或没有专用GPUs)各设备YOLO结构的性能。我们还讨论了在识别面部和有限硬件视频行动方面的局限性。