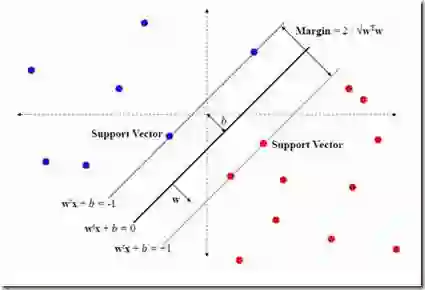

Kernel logistic regression (KLR) is a widely used supervised learning method for binary and multi-class classification, which provides estimates of the conditional probabilities of class membership for the data points. Unlike other kernel methods such as Support Vector Machines (SVMs), KLRs are generally not sparse. Previous attempts to deal with sparsity in KLR include a heuristic method referred to as the Import Vector Machine (IVM) and ad hoc regularizations such as the $\ell_{1/2}$-based one. Achieving a good trade-off between prediction accuracy and sparsity is still a challenging issue with a potential significant impact from the application point of view. In this work, we revisit binary KLR and propose an extension of the training formulation proposed by Keerthi et al., which is able to induce sparsity in the trained model, while maintaining good testing accuracy. To efficiently solve the dual of this formulation, we devise a decomposition algorithm of Sequential Minimal Optimization type which exploits second-order information, and for which we establish global convergence. Numerical experiments conducted on 12 datasets from the literature show that the proposed binary KLR approach achieves a competitive trade-off between accuracy and sparsity with respect to IVM, $\ell_{1/2}$-based regularization for KLR, and SVM while retaining the advantages of providing informative estimates of the class membership probabilities.

翻译:核逻辑回归(KLR)是一种广泛用于二元及多类分类的监督学习方法,它为数据点提供了类别成员条件概率的估计。与支持向量机(SVM)等其他核方法不同,KLR通常不具备稀疏性。以往处理KLR稀疏性的尝试包括一种启发式方法(称为Import Vector Machine, IVM)以及诸如基于$\ell_{1/2}$范数的临时正则化方法。在预测精度与稀疏性之间实现良好权衡仍是一个具有挑战性的问题,从应用角度来看具有潜在的重要影响。在本工作中,我们重新审视二元KLR,并扩展了Keerthi等人提出的训练公式,该扩展能够在保持良好测试精度的同时,诱导训练模型产生稀疏性。为高效求解该公式的对偶问题,我们设计了一种利用二阶信息的序列最小优化(SMO)型分解算法,并证明了其全局收敛性。在文献中的12个数据集上进行的数值实验表明,所提出的二元KLR方法在精度与稀疏性的权衡方面,相较于IVM、基于$\ell_{1/2}$正则化的KLR以及SVM具有竞争力,同时保留了提供类别成员概率信息估计的优势。