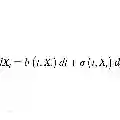

We present a novel kernel-based method for learning multivariate stochastic differential equations (SDEs). The method follows a two-step procedure: we first estimate the drift term function, then the (matrix-valued) diffusion function given the drift. Occupation kernels are integral functionals on a reproducing kernel Hilbert space (RKHS) that aggregate information over a trajectory. Our approach leverages vector-valued occupation kernels for estimating the drift component of the stochastic process. For diffusion estimation, we extend this framework by introducing operator-valued occupation kernels, enabling the estimation of an auxiliary matrix-valued function as a positive semi-definite operator, from which we readily derive the diffusion estimate. This enables us to avoid common challenges in SDE learning, such as intractable likelihoods, by optimizing a reconstruction-error-based objective. We propose a simple learning procedure that retains strong predictive accuracy while using Fenchel duality to promote efficiency. We validate the method on simulated benchmarks and a real-world dataset of Amyloid imaging in healthy and Alzheimer's disease subjects.

翻译:我们提出了一种新颖的基于核的方法,用于学习多元随机微分方程(SDEs)。该方法遵循两步流程:首先估计漂移项函数,然后在给定漂移项的情况下估计(矩阵值)扩散函数。占位核是再生核希尔伯特空间(RKHS)上的积分泛函,可沿轨迹聚合信息。我们的方法利用向量值占位核来估计随机过程的漂移分量。对于扩散估计,我们通过引入算子值占位核扩展了此框架,从而能够将辅助矩阵值函数估计为一个半正定算子,并从中直接推导出扩散估计。这使我们能够通过优化基于重构误差的目标函数,避免SDE学习中常见的挑战,例如难以处理的似然函数。我们提出了一种简单的学习过程,在保持较强预测准确性的同时,利用Fenchel对偶性提升效率。我们在模拟基准测试以及健康受试者和阿尔茨海默病受试者的淀粉样蛋白成像真实世界数据集上验证了该方法。