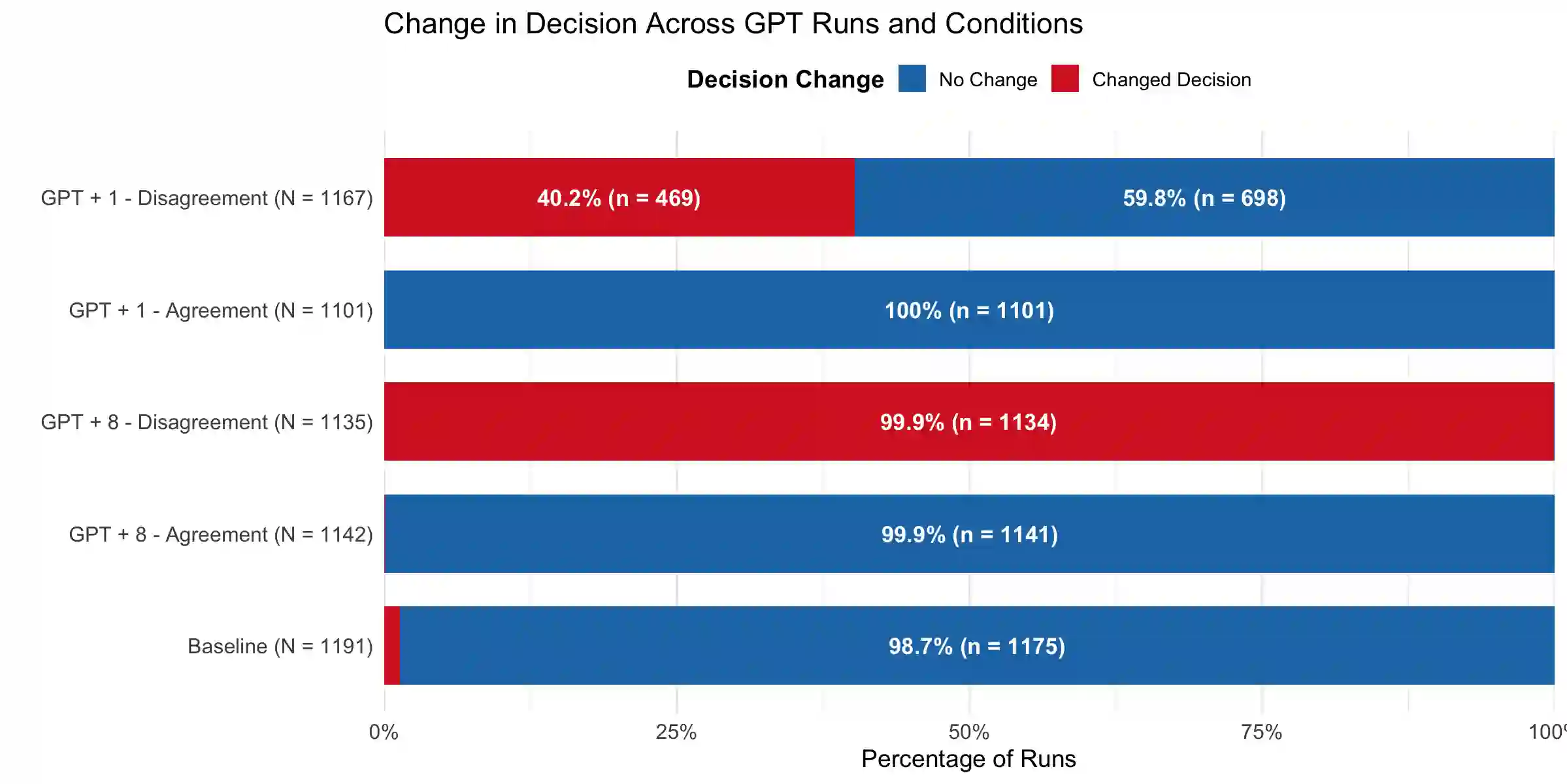

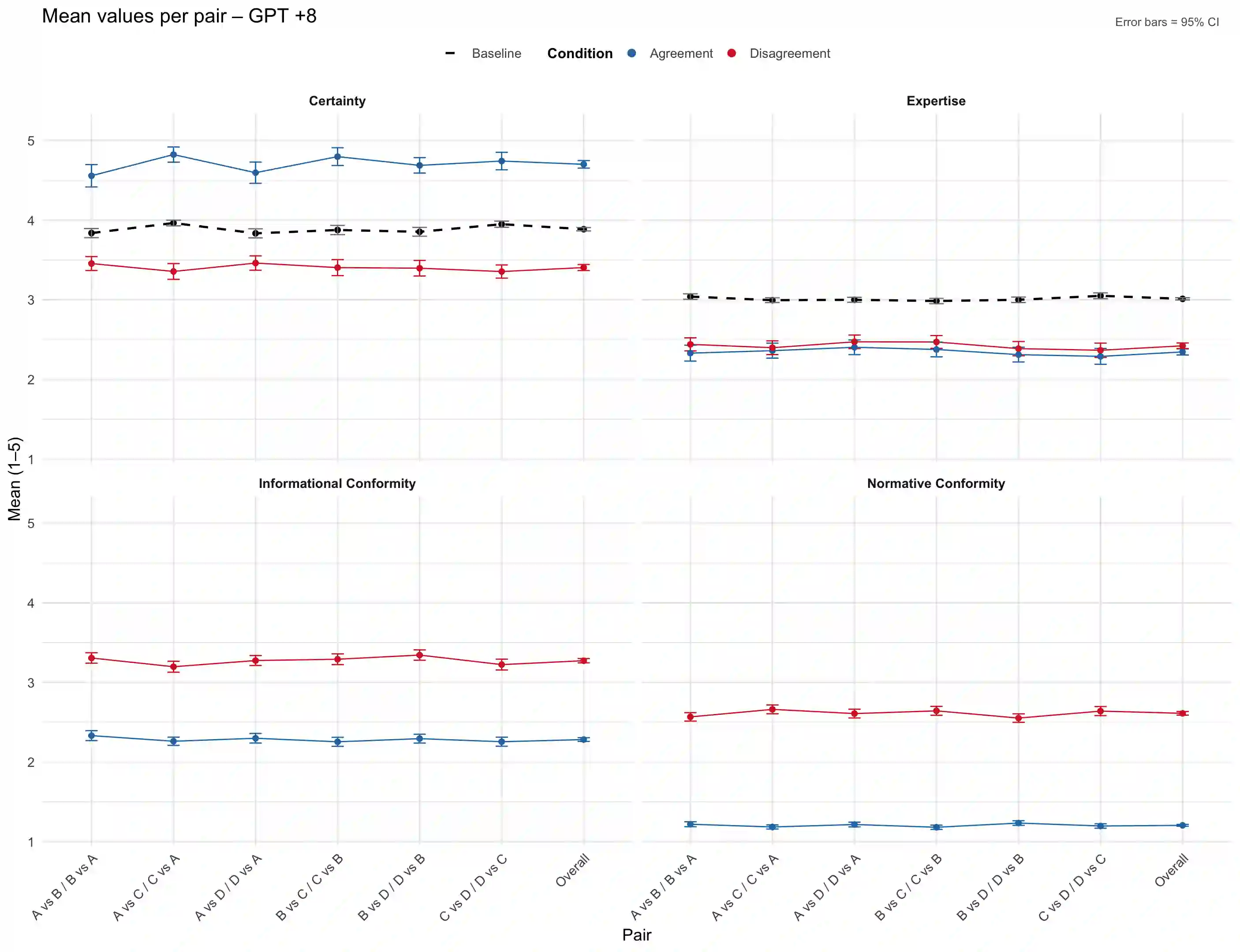

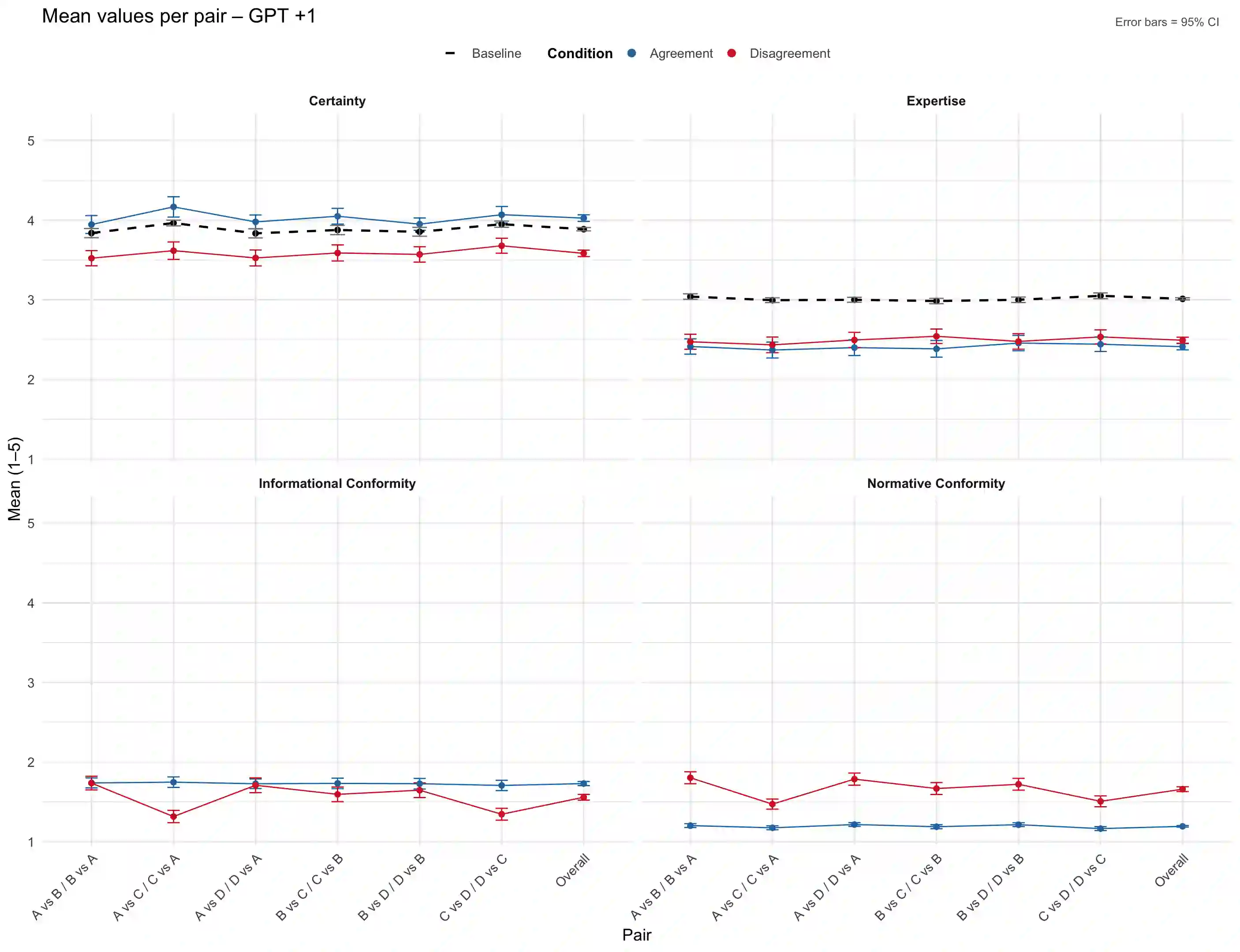

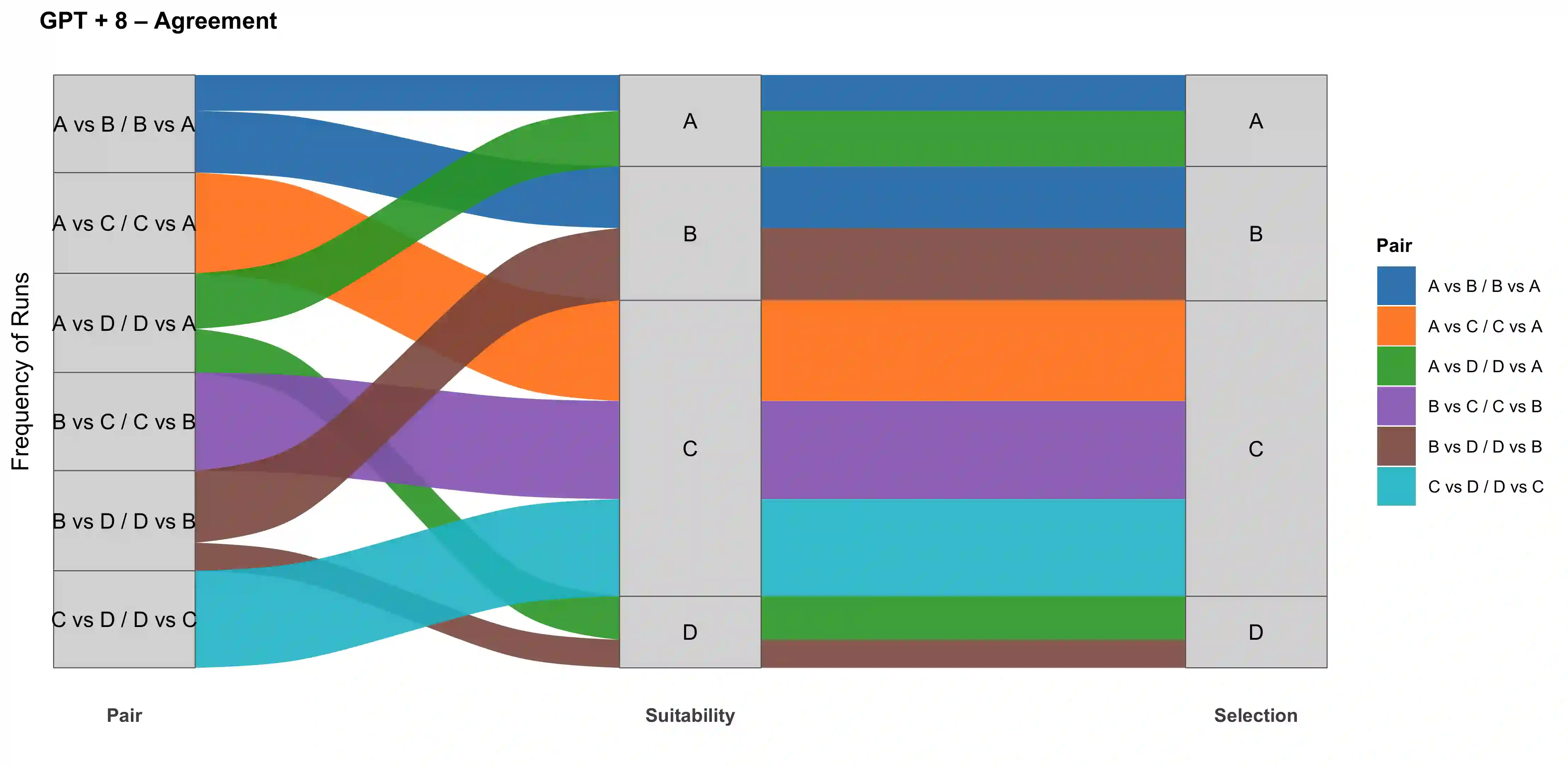

Large language models (LLMs) such as ChatGPT are increasingly integrated into high-stakes decision-making, yet little is known about their susceptibility to social influence. We conducted three preregistered conformity experiments with GPT-4o in a hiring context. In a baseline study, GPT consistently favored the same candidate (Profile C), reported moderate expertise (M = 3.01) and high certainty (M = 3.89), and rarely changed its choice. In Study 1 (GPT + 8), GPT faced unanimous opposition from eight simulated partners and almost always conformed (99.9%), reporting lower certainty and significantly elevated self-reported informational and normative conformity (p < .001). In Study 2 (GPT + 1), GPT interacted with a single partner and still conformed in 40.2% of disagreement trials, reporting less certainty and more normative conformity. Across studies, results demonstrate that GPT does not act as an independent observer but adapts to perceived social consensus. These findings highlight risks of treating LLMs as neutral decision aids and underline the need to elicit AI judgments prior to exposing them to human opinions.

翻译:以ChatGPT为代表的大型语言模型(LLMs)正日益融入高风险决策过程,然而其对社会影响的易感性却鲜为人知。我们在招聘情境下对GPT-4o进行了三项预先注册的从众实验。在基线研究中,GPT始终偏好同一候选人(档案C),报告中等专业水平(M = 3.01)与高度确定性(M = 3.89),且极少改变选择。在研究1(GPT + 8)中,GPT面对八位模拟伙伴的一致反对时几乎完全从众(99.9%),同时报告了更低的确定性及显著提升的自我报告信息性从众与规范性从众(p < .001)。在研究2(GPT + 1)中,GPT与单一伙伴互动时,仍在40.2%的意见分歧试验中表现出从众行为,并报告了更低的确定性与更高的规范性从众。跨研究结果表明,GPT并非作为独立观察者行动,而是会适应感知到的社会共识。这些发现凸显了将LLMs视为中性决策辅助工具的风险,并强调需要在人工智能接触人类观点前获取其独立判断。