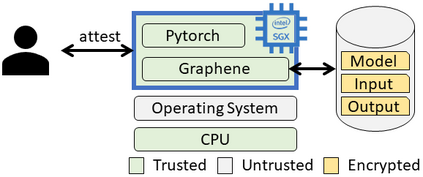

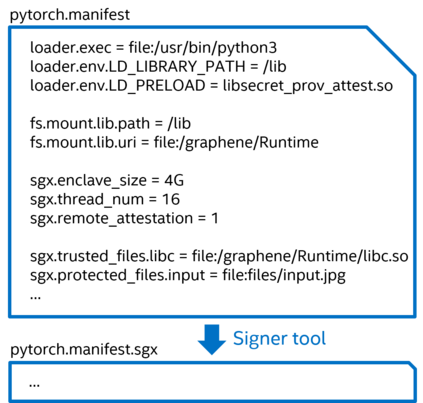

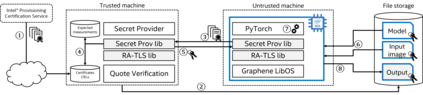

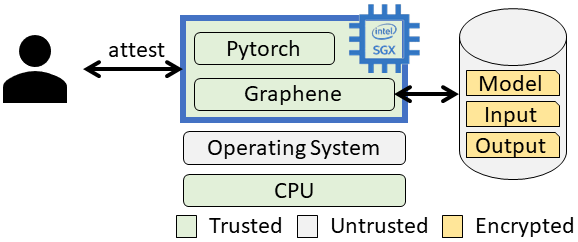

We present a practical framework to deploy privacy-preserving machine learning (PPML) applications in untrusted clouds based on a trusted execution environment (TEE). Specifically, we shield unmodified PyTorch ML applications by running them in Intel SGX enclaves with encrypted model parameters and encrypted input data to protect the confidentiality and integrity of these secrets at rest and during runtime. We use the open-source Graphene library OS with transparent file encryption and SGX-based remote attestation to minimize porting effort and seamlessly provide file protection and attestation. Our approach is completely transparent to the machine learning application: the developer and the end-user do not need to modify the ML application in any way.

翻译:我们提出了一个实用框架,在信任的执行环境(TEE)的基础上,在不信任的云层中应用保护隐私的机器学习(PPML)应用。 具体地说,我们通过在英特尔的SGX飞地使用加密模型参数和加密输入数据,保护这些秘密在休息和运行期间的保密和完整性,来保护未经修改的PyTorrch ML应用。我们使用具有透明文件加密和SGX远程证明的开放源的石墨图书馆OS来尽量减少移植努力,并且无缝地提供文件保护和证明。我们的方法对机器学习应用程序是完全透明的:开发者和终端用户不需要以任何方式修改ML应用。