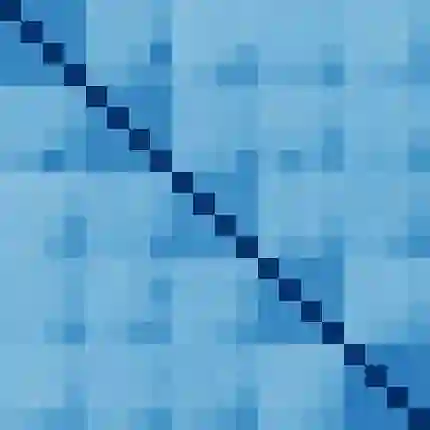

Federated learning (FL) is an outstanding distributed machine learning framework due to its benefits on data privacy and communication efficiency. Since full client participation in many cases is infeasible due to constrained resources, partial participation FL algorithms have been investigated that proactively select/sample a subset of clients, aiming to achieve learning performance close to the full participation case. This paper studies a passive partial client participation scenario that is much less well understood, where partial participation is a result of external events, namely client dropout, rather than a decision of the FL algorithm. We cast FL with client dropout as a special case of a larger class of FL problems where clients can submit substitute (possibly inaccurate) local model updates. Based on our convergence analysis, we develop a new algorithm FL-FDMS that discovers friends of clients (i.e., clients whose data distributions are similar) on-the-fly and uses friends' local updates as substitutes for the dropout clients, thereby reducing the substitution error and improving the convergence performance. A complexity reduction mechanism is also incorporated into FL-FDMS, making it both theoretically sound and practically useful. Experiments on MNIST and CIFAR-10 confirmed the superior performance of FL-FDMS in handling client dropout in FL.

翻译:联邦学习(FL)是一个出色的分布式机器学习框架,因为它有利于数据隐私和通信效率。由于资源有限,许多情况下客户无法充分参与,因此无法充分参加,因此对部分参与FL算法进行了调查,积极主动地选择/抽样一组客户,以达到接近充分参与案例的学习业绩。本文研究的是被动部分客户参与情景,这种被动部分客户参与情景远不那么为人们所熟知,部分参与是外部事件的结果,即客户辍学,而不是FL算法的决定。我们将FL投放客户退出作为较大类别FL问题的特例,客户可以提交替代(可能不准确)当地模型更新。根据我们的趋同分析,我们开发了新的FL-FDMS算法算法算法算法算法算法算法,发现客户的朋友(即数据分布相近的客户)在飞行现场,并利用朋友的当地最新情况替代辍学客户,从而减少替代错误,改善汇合性业绩。我们还将一个复杂减少机制纳入FL-FDMS系统,使客户可以提交(理论上合理和实际上有用的)当地模式更新。在FMIS-LFDMS和CIFARDS的高级操作上试验FMS。