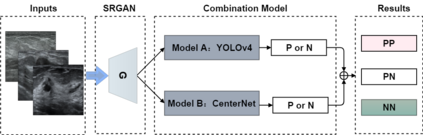

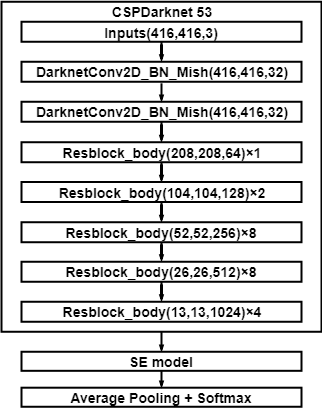

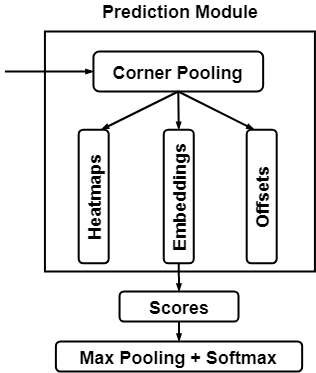

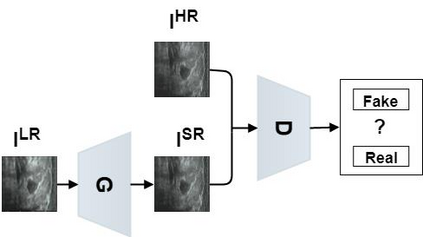

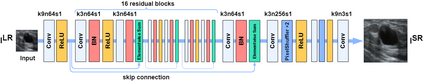

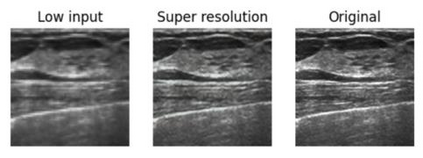

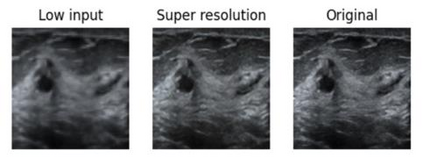

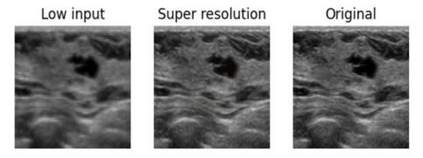

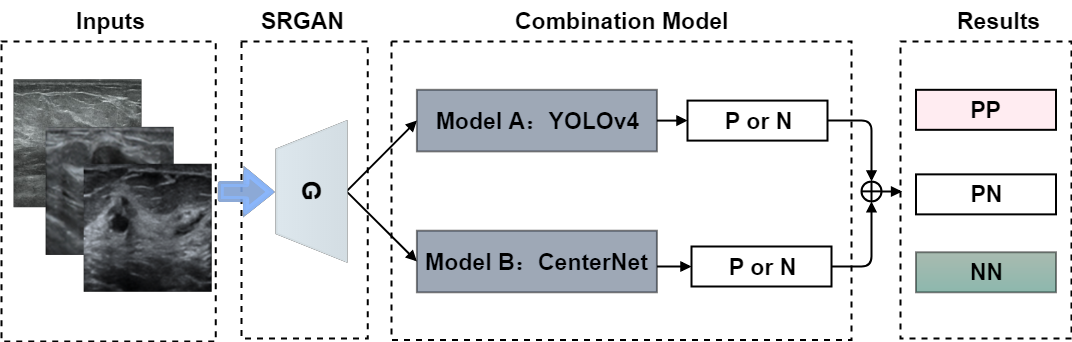

Objective: Breast cancer screening is of great significance in contemporary women's health prevention. The existing machines embedded in the AI system do not reach the accuracy that clinicians hope. How to make intelligent systems more reliable is a common problem. Methods: 1) Ultrasound image super-resolution: the SRGAN super-resolution network reduces the unclearness of ultrasound images caused by the device itself and improves the accuracy and generalization of the detection model. 2) In response to the needs of medical images, we have improved the YOLOv4 and the CenterNet models. 3) Multi-AI model: based on the respective advantages of different AI models, we employ two AI models to determine clinical resuls cross validation. And we accept the same results and refuses others. Results: 1) With the help of the super-resolution model, the YOLOv4 model and the CenterNet model both increased the mAP score by 9.6% and 13.8%. 2) Two methods for transforming the target model into a classification model are proposed. And the unified output is in a specified format to facilitate the call of the molti-AI model. 3) In the classification evaluation experiment, concatenated by the YOLOv4 model (sensitivity 57.73%, specificity 90.08%) and the CenterNet model (sensitivity 62.64%, specificity 92.54%), the multi-AI model will refuse to make judgments on 23.55% of the input data. Correspondingly, the performance has been greatly improved to 95.91% for the sensitivity and 96.02% for the specificity. Conclusion: Our work makes the AI model more reliable in medical image diagnosis. Significance: 1) The proposed method makes the target detection model more suitable for diagnosing breast ultrasound images. 2) It provides a new idea for artificial intelligence in medical diagnosis, which can more conveniently introduce target detection models from other fields to serve medical lesion screening.

翻译:目标:乳腺癌筛查在当代妇女健康预防中具有重大意义。 嵌入AI系统中的现有机器没有达到临床医生希望的准确性。 如何使智能系统更加可靠是一个常见问题。 方法:1 超声图像超分辨率:1 : SRGAN超级分辨率网络减少了超声波图像由设备本身造成的不明性,提高了检测模型的准确性和概括性。 2) 针对医疗图像的需求,我们改进了YOLOv4 和 CentreNet 模型。 3 多种AI模型:根据不同AI模型各自的优势,我们使用两种AI模型来确定临床抗体交叉验证。我们接受同样的结果并拒绝其他结果。 结果:1 在超分辨率模型的帮助下, YOLOv4 模型和CentreNet模型将超声波评分提高了9.6%和13.8%。 2 将提出两种将目标模型转换为分类模型的方法。 并且将一个更精确的数值输出格式用于促进 molti-AI731模型的呼声调。 3 在分类评估中, 将硬性数据分析为 Cal- missionalalal 4, oralalalalalad eximaldal disal disal disal disal disald disal exild exild exideal dis dis disald exild exideald exideald exideald exaldald ex exis ex ex ex exidealdaldaldal 。