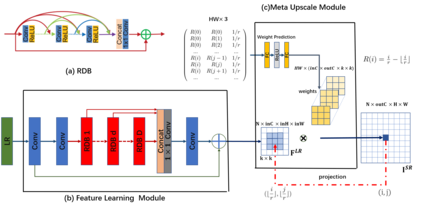

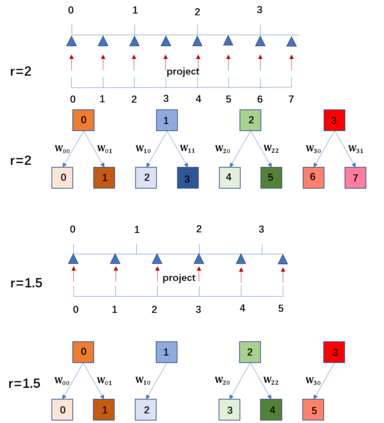

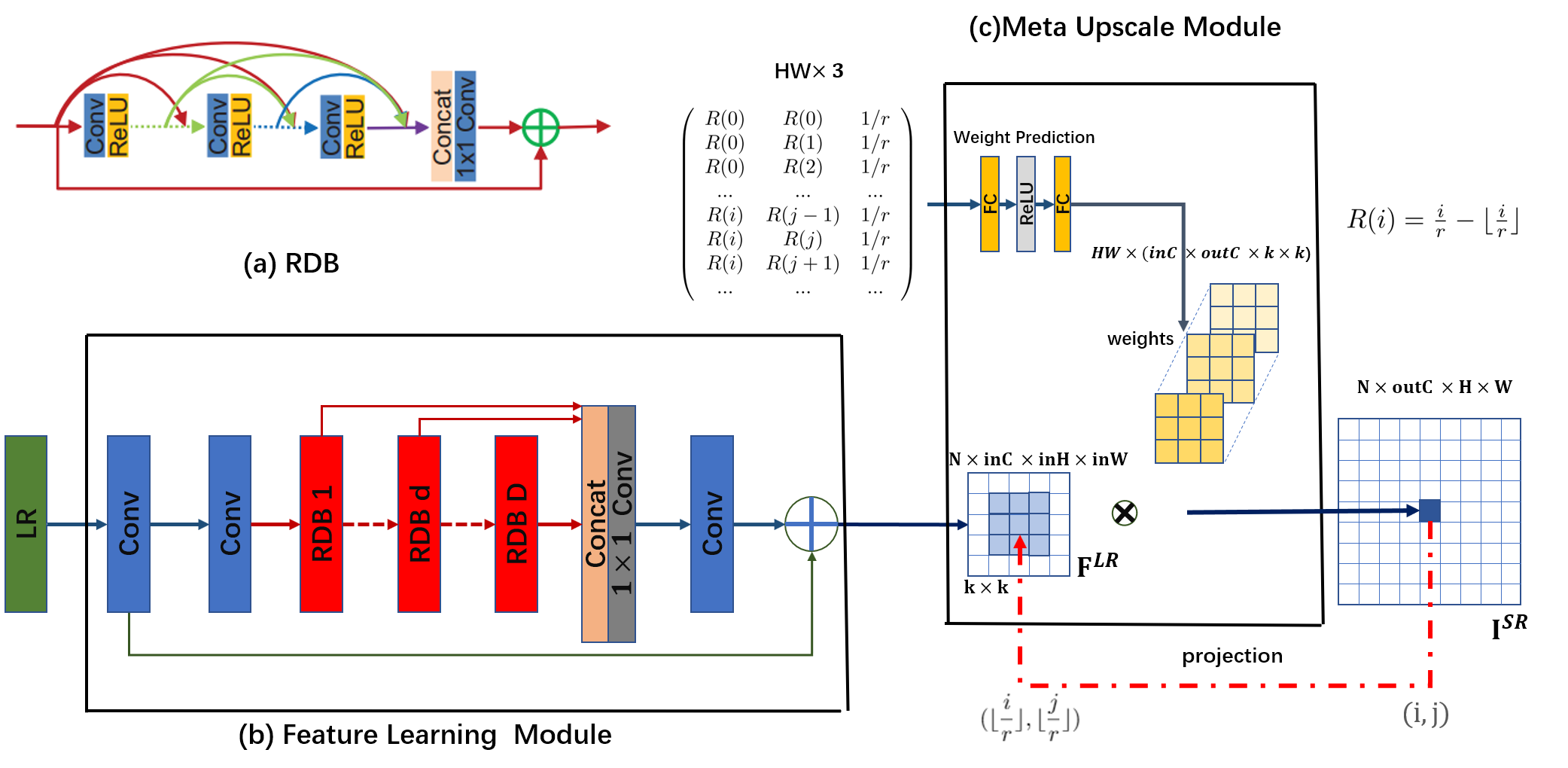

Recent research on super-resolution has achieved great success due to the development of deep convolutional neural networks (DCNNs). However, super-resolution of arbitrary scale factor has been ignored for a long time. Most previous researchers regard super-resolution of different scale factors as independent tasks. They train a specific model for each scale factor which is inefficient in computing, and prior work only take the super-resolution of several integer scale factors into consideration. In this work, we propose a novel method called Meta-SR to firstly solve super-resolution of arbitrary scale factor (including non-integer scale factors) with a single model. In our Meta-SR, the Meta-Upscale Module is proposed to replace the traditional upscale module. For arbitrary scale factor, the Meta-Upscale Module dynamically predicts the weights of the upscale filters by taking the scale factor as input and use these weights to generate the HR image of arbitrary size. For any low-resolution image, our Meta-SR can continuously zoom in it with arbitrary scale factor by only using a single model. We evaluated the proposed method through extensive experiments on widely used benchmark datasets on single image super-resolution. The experimental results show the superiority of our Meta-Upscale.

翻译:最近对超分辨率的研究由于深层神经神经网络(DCNN)的发展而取得了巨大成功。然而,任意比例因素的超分辨率被长期忽略。大多数前研究人员将不同比例因素的超分辨率视为独立的任务。他们为计算效率低下的每个比例因素训练了一种具体模型,而先前的工作只考虑若干整分比例因素的超分辨率。在这项工作中,我们提议了一种叫Meta-SR的新颖方法,先用单一模型解决任意比例因素(包括非整数比例因素)的超分辨率。在我们Meta-SR中,元升级模块建议取代传统的升级模块。对于任意比例因素,Meta-Upass模块动态地预测了升级过滤器的权重,将比例因素作为投入,并利用这些重量生成任意规模的HR图像。对于任何低分辨率图像,我们的Meta-SR只能使用单一模型来持续放大任意比例因素。我们通过广泛使用基准数据集对单一图像分辨率进行的广泛实验来评估拟议方法。