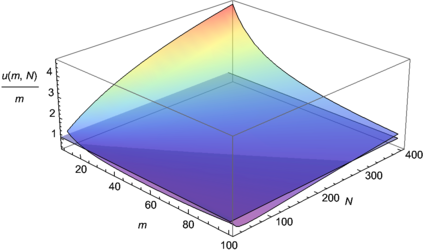

We address the problem of how to achieve optimal inference in distributed quantile regression without stringent scaling conditions. This is challenging due to the non-smooth nature of the quantile regression loss function, which invalidates the use of existing methodology. The difficulties are resolved through a double-smoothing approach that is applied to the local (at each data source) and global objective functions. Despite the reliance on a delicate combination of local and global smoothing parameters, the quantile regression model is fully parametric, thereby facilitating interpretation. In the low-dimensional regime, we discuss and compare several alternative confidence set constructions, based on inversion of Wald and score-type tests and resam-pling techniques, detailing an improvement that is effective for more extreme quantile coefficients. In high dimensions, a sparse framework is adopted, where the proposed doubly-smoothed objective function is complemented with an $\ell_1$-penalty. A thorough simulation study further elucidates our findings. Finally, we provide estimation theory and numerical studies for sparse quantile regression in the high-dimensional setting.

翻译:我们处理如何在没有严格缩放条件的情况下在分布式四分位回归中实现最佳推断的问题。由于四分位回归损失功能的非移动性,使现有方法的使用无效,这具有挑战性。困难通过适用于当地(每个数据源)和全球目标功能的双向移动方法来解决。尽管依赖当地和全球平滑参数的微妙组合,但四分位回归模型是完全的参数,从而便于解释。在低维系统中,我们讨论并比较了几种基于瓦尔德和分数类型测试的替代信心结构,并详细说明了对于更极端的四分位系数有效的改进。在高维中,采用了一个稀疏的框架,其中拟议的双向平衡目标功能以1美元作为补充。一项彻底的模拟研究进一步阐明了我们的调查结果。最后,我们为高维环境中的稀少的四分位回归提供了估算理论和数字研究。