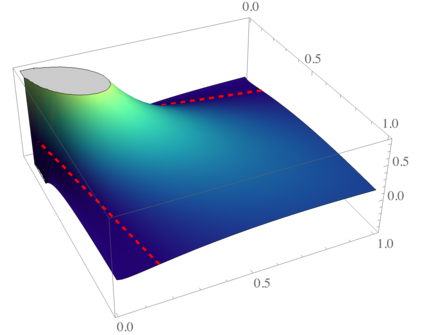

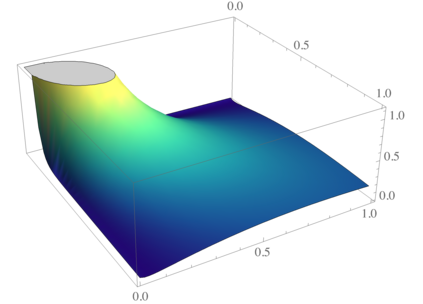

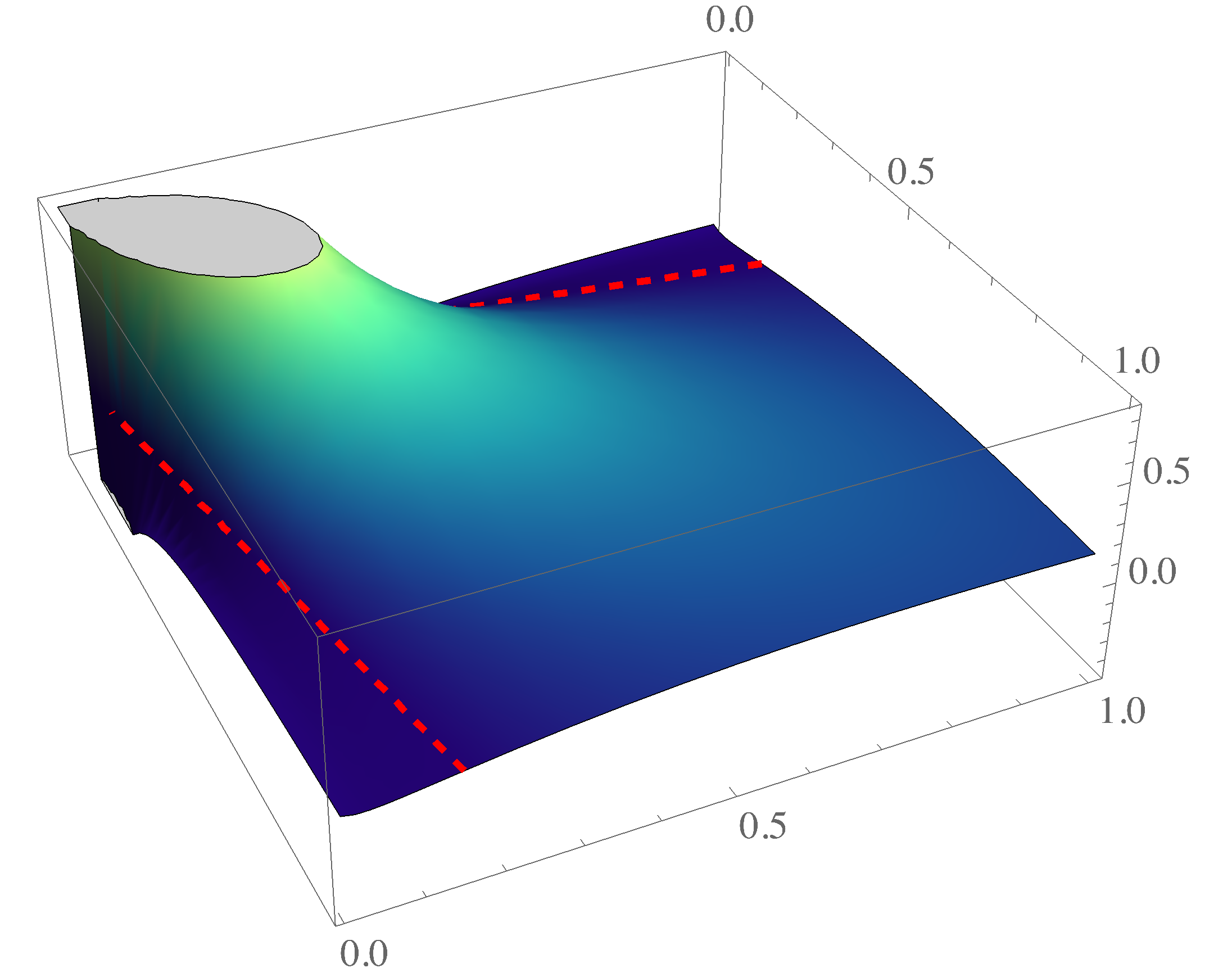

We study the online learning with feedback graphs framework introduced by Mannor and Shamir (2011), in which the feedback received by the online learner is specified by a graph $G$ over the available actions. We develop an algorithm that simultaneously achieves regret bounds of the form: $\smash{\mathcal{O}(\sqrt{\theta(G) T})}$ with adversarial losses; $\mathcal{O}(\theta(G)\operatorname{polylog}{T})$ with stochastic losses; and $\mathcal{O}(\theta(G)\operatorname{polylog}{T} + \smash{\sqrt{\theta(G) C})}$ with stochastic losses subject to $C$ adversarial corruptions. Here, $\theta(G)$ is the clique covering number of the graph $G$. Our algorithm is an instantiation of Follow-the-Regularized-Leader with a novel regularization that can be seen as a product of a Tsallis entropy component (inspired by Zimmert and Seldin (2019)) and a Shannon entropy component (analyzed in the corrupted stochastic case by Amir et al. (2020)), thus subtly interpolating between the two forms of entropies. One of our key technical contributions is in establishing the convexity of this regularizer and controlling its inverse Hessian, despite its complex product structure.

翻译:我们用曼诺尔和沙米尔(2011年)推出的反馈图表框架来研究在线学习,曼诺尔和沙米尔(2011年)引入了反馈图框架,其中在线学习者收到的反馈用一张G$G$对可用行动进行指定。我们开发了一种算法,同时实现表格的遗憾界限:$\smash_mathcal{O}(Sqrt=theta(G)T)}(美元),有对抗性亏损;$mathcal{O}(theta(G)\opatorname{polylog_T}),在线学习者收到的反馈用一张G$GG$指定。我们开发了一个算法,它同时实现了表格的遗憾界限:$\smash_sqrt_thalca{(G}}(sqrqrat)} $C$(G), 有对抗性亏损的亏损; $thta}(G) $\(G) 是包含G$G$G$$(G) 的数。 我们的“Regalal-alizal-leard-leder-leard {Oard ” 和“nal” Incal rucal rucal rucal rucal” rucal) 。这可以将一个固定的“20 和“cal” 和“cal” 和“cal ” 的“cal” 和“cal”两个“cal 的“cal”的“cal ”的“cal ” ” 。通过”的“cal 和“cal”的”的“cal”的“cal” 和“cal”的“cal”的“20 ”的” ” ” 的“cal 和“cal ” ”的“cal 和“cal ”的”的“cal 和“cal 和“cal ”的“cal ”的“cal 和“cal ”的”的”的”的”的“Sal 和“Sal 和“cal 和“S”的“S”两个“S”的“S”的“S”