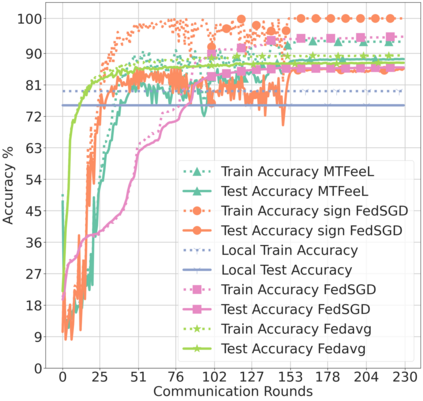

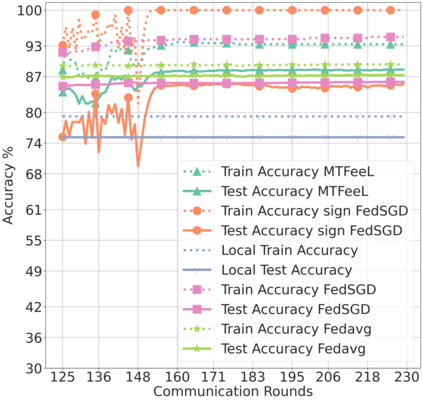

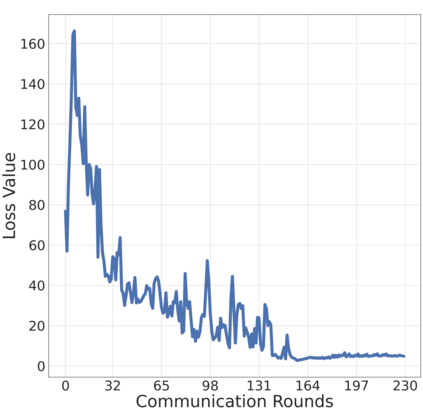

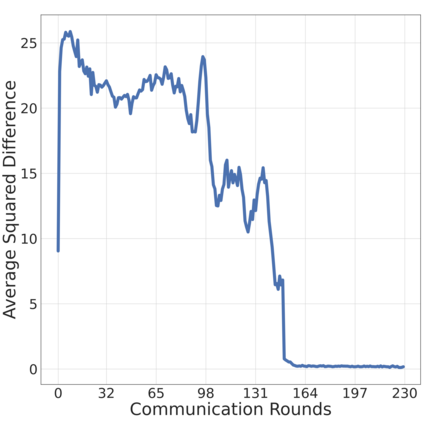

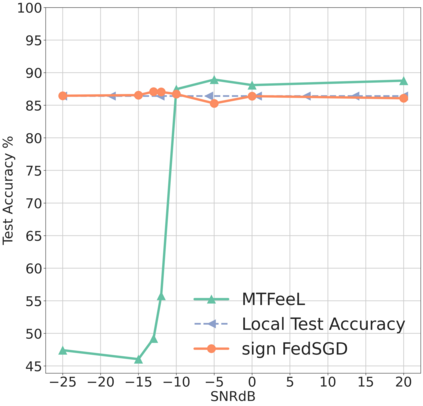

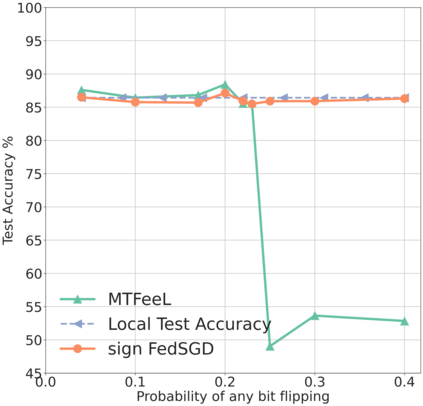

Federated Learning (FL) has evolved as a promising technique to handle distributed machine learning across edge devices. A single neural network (NN) that optimises a global objective is generally learned in most work in FL, which could be suboptimal for edge devices. Although works finding a NN personalised for edge device specific tasks exist, they lack generalisation and/or convergence guarantees. In this paper, a novel communication efficient FL algorithm for personalised learning in a wireless setting with guarantees is presented. The algorithm relies on finding a ``better`` empirical estimate of losses at each device, using a weighted average of the losses across different devices. It is devised from a Probably Approximately Correct (PAC) bound on the true loss in terms of the proposed empirical loss and is bounded by (i) the Rademacher complexity, (ii) the discrepancy, (iii) and a penalty term. Using a signed gradient feedback to find a personalised NN at each device, it is also proven to converge in a Rayleigh flat fading (in the uplink) channel, at a rate of the order max{1/SNR,1/sqrt(T)} Experimental results show that the proposed algorithm outperforms locally trained devices as well as the conventionally used FedAvg and FedSGD algorithms under practical SNR regimes.

翻译:联邦学习联合会(FL)已经发展成为处理分布式机器跨边缘设备学习的一种有希望的技术。一个单一神经网络(NN),它是一个全球性目标的优化,通常在FL的大多数工作中都学习,对于边缘设备来说,它可能并不最理想。虽然在为边缘设备特定任务寻找一个个人化的NNT工作上存在,但它们缺乏一般化和(或)趋同的保证。在本文中,提出了一个新的通信高效FL算法,用于在有保障的无线环境中进行个性化学习。算法依赖于利用不同设备损失的加权平均值,找到每个设备损失的“最精确”的经验性估计。这个算法可能来自一个与拟议经验性损失的真正损失相联系的大约大约“Rich”(PAC),并且受(i) Rademacher复杂的工作, (ii) 差异, (iii) 和惩罚性术语的约束。使用经签名的梯度反馈,在每个设备中找到个性化的NNCW, 也证明在Rayleilef flaf flad fal fal assal rags (在上) as prealgalalation rausgal ragalation raft raft raft ragaldaldaldaldaldald raps raft 中, as as as laviolviolviolviolviolviold.