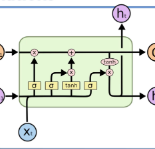

Translating between languages where certain features are marked morphologically in one but absent or marked contextually in the other is an important test case for machine translation. When translating into English which marks (in)definiteness morphologically, from Yor\`ub\'a which uses bare nouns but marks these features contextually, ambiguities arise. In this work, we perform fine-grained analysis on how an SMT system compares with two NMT systems (BiLSTM and Transformer) when translating bare nouns in Yor\`ub\'a into English. We investigate how the systems what extent they identify BNs, correctly translate them, and compare with human translation patterns. We also analyze the type of errors each model makes and provide a linguistic description of these errors. We glean insights for evaluating model performance in low-resource settings. In translating bare nouns, our results show the transformer model outperforms the SMT and BiLSTM models for 4 categories, the BiLSTM outperforms the SMT model for 3 categories while the SMT outperforms the NMT models for 1 category.

翻译:将某些特征在一种语言中以形态标记,但在另一种语言中则没有或根据背景标记,这是机器翻译的一个重要测试案例。在将使用光名词但根据背景标记这些特征的Yor ⁇ ub\'a从使用光名词但根据背景标记这些特征的Yor ⁇ uub\'a译成英文时,将某些特征以形态标记为一种语言翻译为一种语言,但在另一种语言中则没有或根据背景标记为一种语言。在这项工作中,我们对将Yor ⁇ ub\'a的光名词转换为英语时,SMT系统与两个NMT系统(BILSTM和变异器)相比如何进行细微分析。我们调查了这些系统如何辨别出BNN、正确翻译和与人类翻译模式进行比较。我们还分析了每个模型的错误类型,并提供了这些错误的语言描述。我们在低资源环境中评估模型性能时,我们收集了洞察。在翻译光名词时,我们的结果表明变式模型比4类的SMT和BILSTM模型高出3类模型的模型。