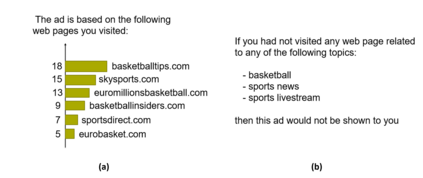

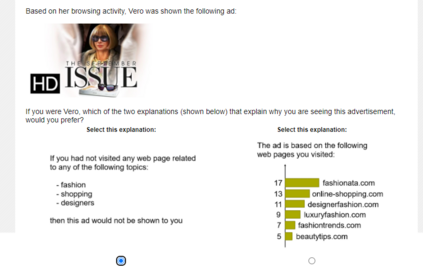

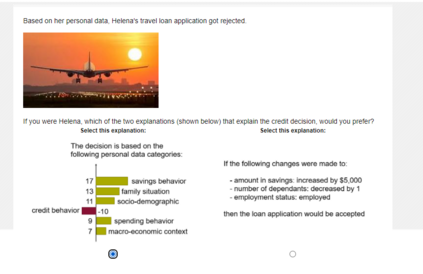

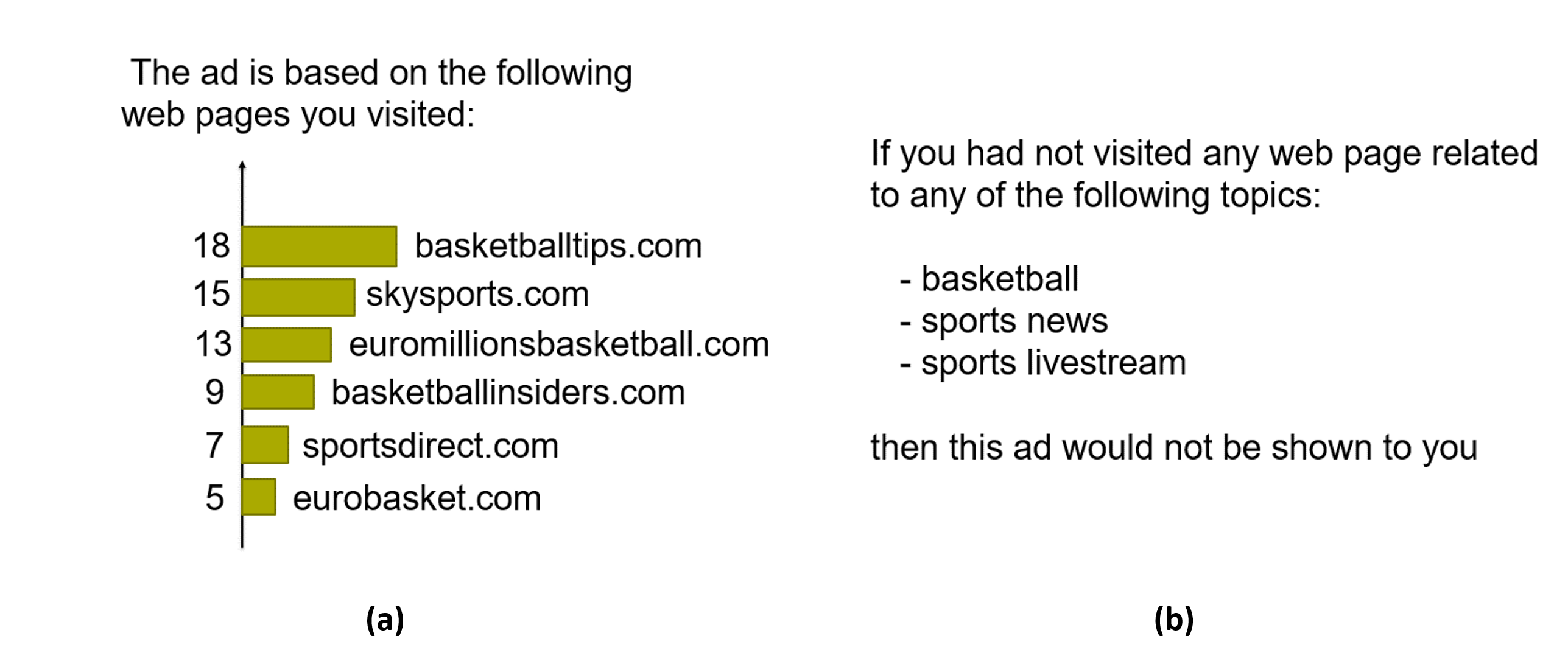

Explaining firm decisions made by algorithms in customer-facing applications is increasingly required by regulators and expected by customers. While the emerging field of Explainable Artificial Intelligence (XAI) has mainly focused on developing algorithms that generate such explanations, there has not yet been sufficient consideration of customers' preferences for various types and formats of explanations. We discuss theoretically and study empirically people's preferences for explanations of algorithmic decisions. We focus on three main attributes that describe automatically-generated explanations from existing XAI algorithms (format, complexity, and specificity), and capture differences across contexts (online targeted advertising vs. loan applications) as well as heterogeneity in users' cognitive styles. Despite their popularity among academics, we find that counterfactual explanations are not popular among users, unless they follow a negative outcome (e.g., loan application was denied). We also find that users are willing to tolerate some complexity in explanations. Finally, our results suggest that preferences for specific (vs. more abstract) explanations are related to the level at which the decision is construed by the user, and to the deliberateness of the user's cognitive style.

翻译:监管者日益要求并期望客户能够对客户应用的算法做出坚定的决定。虽然新出现的可解释的人工智能(XAI)领域主要侧重于发展产生这种解释的算法,但尚未充分考虑客户对各种解释类型和格式的偏好。我们从理论上和从经验上讨论人们对解释算法决定的偏好。我们侧重于三个主要属性,这些属性描述现有XAI算法(格式、复杂性和特殊性)自动产生的解释,并捕捉各种背景的差异(在线定向广告对贷款应用)以及用户认知风格的异质性。尽管在学术界很受欢迎,但我们发现反事实解释在用户中并不受到欢迎,除非它们遵循负面的结果(例如贷款申请被拒绝)。我们还发现用户愿意容忍解释的某些复杂性。最后,我们的结果表明,对特定(v. 更抽象的)解释的偏好与用户解释决定的层次有关,也与用户认知风格的审慎性有关。