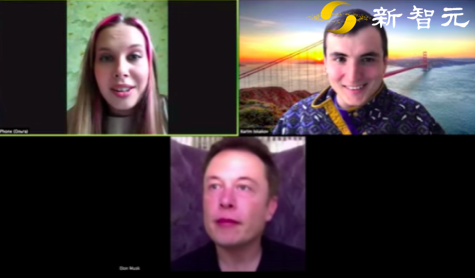

邀请马斯克加入我的Zoom会议,一键实时换脸再也不怕安全漏洞!

新智元报道

新智元报道

来源:GitHub

编辑:白峰

【新智元导读】最近,为应对Zoom的隐私泄漏问题,国外一名开发者在GitHub开源了自己的替身项目代码,让你在Zoom视频通话应用中使用替身变成可能,项目使用了NIPS2019一篇论文中的模型作为核心组件,实现了视频流中的动态图像迁移,让你实时切换替身。「新智元急聘主笔、高级主任编辑,添加HR微信(Dr-wly)或扫描文末二维码了解详情。」

让马斯克加入你的Zoom会议

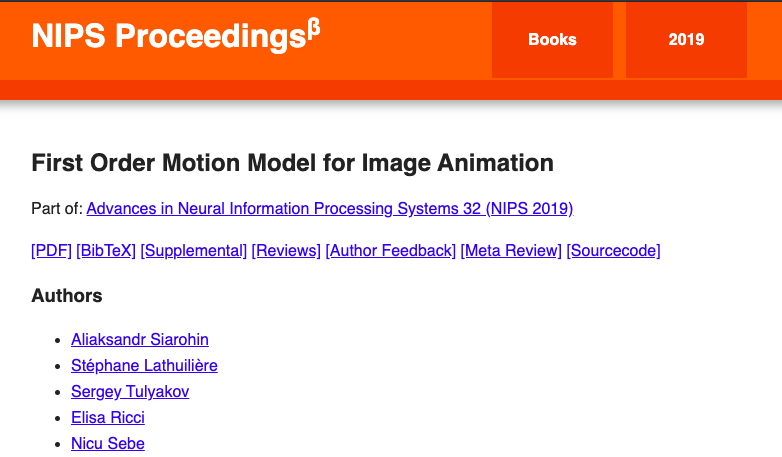

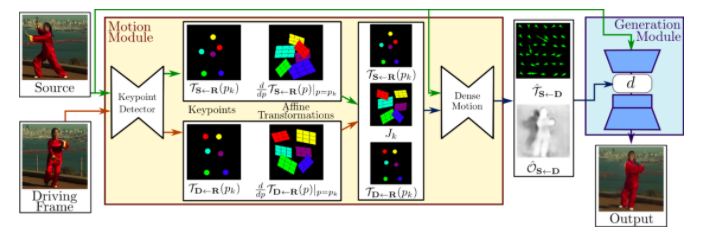

项目核心组件:一阶运动模型

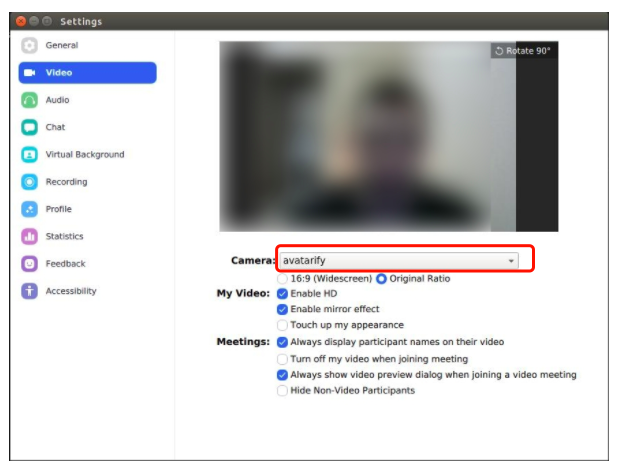

如何在Zoom会议中使用替身

def load_checkpoints(config_path, checkpoint_path, device='cuda'):

with open(config_path) as f:

config = yaml.load(f)

generator = OcclusionAwareGenerator(**config['model_params']['generator_params'],

**config['model_params']['common_params'])

generator.to(device)

kp_detector = KPDetector(**config['model_params']['kp_detector_params'],

**config['model_params']['common_params'])

kp_detector.to(device)

checkpoint = torch.load(checkpoint_path, map_location=device)

generator.load_state_dict(checkpoint['generator'])

kp_detector.load_state_dict(checkpoint['kp_detector'])

generator = DataParallelWithCallback(generator)

kp_detector = DataParallelWithCallback(kp_detector)

generator.eval()

kp_detector.eval()

return generator, kp_detector

def normalize_alignment_kp(kp):

kp = kp - kp.mean(axis=0, keepdims=True)

area = ConvexHull(kp[:, :2]).volume

area = np.sqrt(area)

kp[:, :2] = kp[:, :2] / area

return kp

def crop(img, p=0.7):

h, w = img.shape[:2]

x = int(min(w, h) * p)

l = (w - x) // 2

r = w - l

u = (h - x) // 2

d = h - u

return img[u:d, l:r], (l,r,u,d)

def pad_img(img, orig):

h, w = orig.shape[:2]

pad = int(256 * (w / h) - 256)

out = np.pad(img, [[0,0], [pad//2, pad//2], [0,0]], 'constant')

out = cv2.resize(out, (w, h))

return out

def predict(driving_frame, source_image, relative, adapt_movement_scale, fa, device='cuda'):

global start_frame

global start_frame_kp

global kp_driving_initial

with torch.no_grad():

source = torch.tensor(source_image[np.newaxis].astype(np.float32)).permute(0, 3, 1, 2).to(device)

driving = torch.tensor(driving_frame[np.newaxis].astype(np.float32)).permute(0, 3, 1, 2).to(device)

kp_source = kp_detector(source)

if kp_driving_initial is None:

kp_driving_initial = kp_detector(driving)

start_frame = driving_frame.copy()

start_frame_kp = get_frame_kp(fa, driving_frame)

kp_driving = kp_detector(driving)

kp_norm = normalize_kp(kp_source=kp_source, kp_driving=kp_driving,

kp_driving_initial=kp_driving_initial, use_relative_movement=relative,

use_relative_jacobian=relative, adapt_movement_scale=adapt_movement_scale)

out = generator(source, kp_source=kp_source, kp_driving=kp_norm)

out = np.transpose(out['prediction'].data.cpu().numpy(), [0, 2, 3, 1])[0]

out = out[..., ::-1]

out = (np.clip(out, 0, 1) * 255).astype(np.uint8)

return out

http://papers.nips.cc/paper/8935-first-order-motion-model-for-image-animation

https://aliaksandrsiarohin.github.io/first-order-model-website/

https://github.com/alievk/avatarify

登录查看更多

相关内容

专知会员服务

34+阅读 · 2020年1月15日

专知会员服务

38+阅读 · 2019年12月26日

Arxiv

4+阅读 · 2018年8月17日

Arxiv

6+阅读 · 2018年7月16日