博客 | 常见32项NLP任务及其评价指标和对应达到SOTA的paper

本文原载于微信公众号:AI部落联盟(AI_Tribe),AI研习社经授权转载。欢迎关注 AI部落联盟 微信公众号、知乎专栏 AI部落、及 AI研习社博客专栏。

社长提醒:本文的相关链接请点击文末【阅读原文】进行查看

对于初学NLP的人,了解NLP的各项技术非常重要;对于想进阶的人,了解各项技术的评测指标、数据集很重要;对于想做学术和研究的人,了解各项技术在对应的评测数据集上达到SOTA效果的Paper非常重要,因为了解评测数据集、评测指标和目前最好的结果是NLP研究工作的基础。因此,本文整理了常见的32项NLP任务以及对应的评测数据、评测指标、目前的SOTA结果以及对应的Paper。

1. 先来看下按粒度对NLP任务进行划分:词粒度、短语粒度、句子粒度、篇章粒度以及对应的一些主要任务。以便于初学者能明确这些NLP基础任务之间的关系。

2. 再来看看周明老师按基础任务、核心任务对NLP的划分(http://zhigu.news.cn/2017-06/08/c_129628590.htm)。

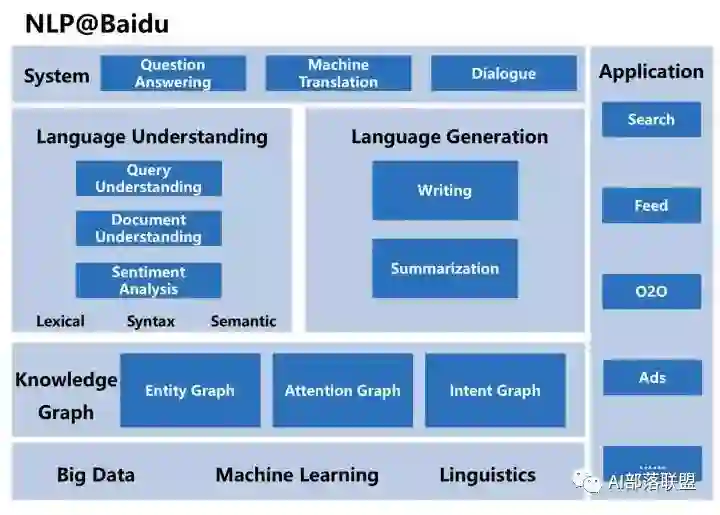

3. 再来看看王海峰老师在AAAI2017的关于百度NLP的keynote(http://www.aaai.org/Conferences/AAAI/2017/aaai17inpractice.php),主要是让大家明白NLP的基础、技术、应用之间的关系。

4. 常见的32项NLP任务以及对应的评测数据、评测指标、目前的SOTA结果以及对应的Paper。

任务 |

描述 |

corpus/dataset |

评价指标 |

SOTA 结果 |

Papers |

Chunking |

组块分析 |

Penn Treebank |

F1 |

95.77 |

A Joint Many-Task Model: Growing a Neural Network for Multiple NLP Tasks |

Common sense reasoning |

常识推理 |

Event2Mind |

cross-entropy |

4.22 |

Event2Mind: Commonsense Inference on Events, Intents, and Reactions |

Parsing |

句法分析 |

Penn Treebank |

F1 |

95.13 |

Constituency Parsing with a Self-Attentive Encoder |

Coreference resolution |

指代消解 |

CoNLL 2012 |

average F1 |

73 |

Higher-order Coreference Resolution with Coarse-to-fine Inference |

Dependency parsing |

依存句法分析 |

Penn Treebank |

POS UAS LAS |

97.3 95.44 93.76 |

Deep Biaffine Attention for Neural Dependency Parsing |

Task-Oriented Dialogue/Intent Detection |

任务型对话/意图识别 |

ATIS/Snips |

accuracy |

94.1 97.0 |

Slot-Gated Modeling for Joint Slot Filling and Intent Prediction |

Task-Oriented Dialogue/Slot Filling |

任务型对话/槽填充 |

ATIS/Snips |

F1 |

95.2 88.8 |

Slot-Gated Modeling for Joint Slot Filling and Intent Prediction |

Task-Oriented Dialogue/Dialogue State Tracking |

任务型对话/状态追踪 |

DSTC2 |

Area Food Price Joint |

90 84 92 72 |

Dialogue Learning with Human Teaching and Feedback in End-to-End Trainable Task-Oriented Dialogue Systems |

Domain adaptation |

领域适配 |

Multi-Domain Sentiment Dataset |

average accuracy |

79.15 |

Strong Baselines for Neural Semi-supervised Learning under Domain Shift |

Entity Linking |

实体链接 |

AIDA CoNLL-YAGO |

Micro-F1-strong Macro-F1-strong |

86.6 89.4 |

End-to-End Neural Entity Linking |

Information Extraction |

信息抽取 |

ReVerb45K |

Precision Recall F1 |

62.7 84.4 81.9 |

CESI: Canonicalizing Open Knowledge Bases using Embeddings and Side Information |

Grammatical Error Correction |

语法错误纠正 |

JFLEG |

GLEU |

61.5 |

Near Human-Level Performance in Grammatical Error Correction with Hybrid Machine Translation |

Language modeling |

语言模型 |

Penn Treebank |

Validation perplexity Test perplexity |

48.33 47.69 |

Breaking the Softmax Bottleneck: A High-Rank RNN Language Model |

Lexical Normalization |

词汇规范化 |

LexNorm2015 |

F1 Precision Recall |

86.39 93.53 80.26 |

MoNoise: Modeling Noise Using a Modular Normalization System |

Machine translation |

机器翻译 |

WMT 2014 EN-DE |

BLEU |

35.0 |

Understanding Back-Translation at Scale |

Multimodal Emotion Recognition |

多模态情感识别 |

IEMOCAP |

Accuracy |

76.5 |

Multimodal Sentiment Analysis using Hierarchical Fusion with Context Modeling |

Multimodal Metaphor Recognition |

多模态隐喻识别 |

verb-noun pairs adjective-noun pairs |

F1 |

0.75 0.79 |

Black Holes and White Rabbits: Metaphor Identification with Visual Features |

Multimodal Sentiment Analysis |

多模态情感分析 |

MOSI |

Accuracy |

80.3 |

Context-Dependent Sentiment Analysis in User-Generated Videos |

Named entity recognition |

命名实体识别 |

CoNLL 2003 |

F1 |

93.09 |

Contextual String Embeddings for Sequence Labeling |

Natural language inference |

自然语言推理 |

SciTail |

Accuracy |

88.3 |

Improving Language Understanding by Generative Pre-Training |

Part-of-speech tagging |

词性标注 |

Penn Treebank |

Accuracy |

97.96 |

Morphosyntactic Tagging with a Meta-BiLSTM Model over Context Sensitive Token Encodings |

Question answering |

问答 |

CliCR |

F1 |

33.9 |

CliCR: A Dataset of Clinical Case Reports for Machine Reading Comprehension |

Word segmentation |

分词 |

VLSP 2013 |

F1 |

97.90 |

A Fast and Accurate Vietnamese Word Segmenter |

Word Sense Disambiguation |

词义消歧 |

SemEval 2015 |

F1 |

67.1 |

Word Sense Disambiguation: A Unified Evaluation Framework and Empirical Comparison |

Text classification |

文本分类 |

AG News |

Error rate |

5.01 |

Universal Language Model Fine-tuning for Text Classification |

Summarization |

摘要 |

Gigaword |

ROUGE-1 ROUGE-2 ROUGE-L |

37.04 19.03 34.46 |

Retrieve, Rerank and Rewrite: Soft Template Based Neural Summarization |

Sentiment analysis |

情感分析 |

IMDb |

Accuracy |

95.4 |

Universal Language Model Fine-tuning for Text Classification |

Semantic role labeling |

语义角色标注 |

OntoNotes |

F1 |

85.5 |

Jointly Predicting Predicates and Arguments in Neural Semantic Role Labeling |

Semantic parsing |

语义解析 |

LDC2014T12 |

F1 Newswire F1 Full |

0.71 0.66 |

AMR Parsing with an Incremental Joint Model |

Semantic textual similarity |

语义文本相似度 |

SentEval |

MRPC SICK-R SICK-E STS |

78.6/84.4 0.888 87.8 78.9/78.6 |

Learning General Purpose Distributed Sentence Representations via Large Scale Multi-task Learning |

Relationship Extraction |

关系抽取 |

New York Times Corpus |

P@10% P@30% |

73.6 59.5 |

RESIDE: Improving Distantly-Supervised Neural Relation Extraction using Side Information |

Relation Prediction |

关系预测 |

WN18RR |

H@10 H@1 MRR |

59.02 45.37 49.83 |

Predicting Semantic Relations using Global Graph Properties |

点击 阅读原文 ,查看本文更多内容↙