【脑洞大开】假如BERT系列论文变成Commit History

点击上方,选择星标或置顶,每天给你送干货

阅读大概需要7分钟

跟随小博主,每天进步一丢丢

来自:NewNeeNLP

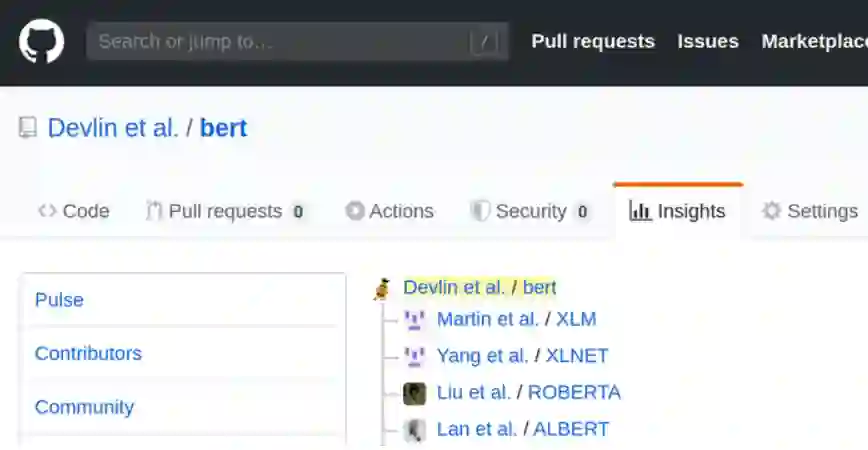

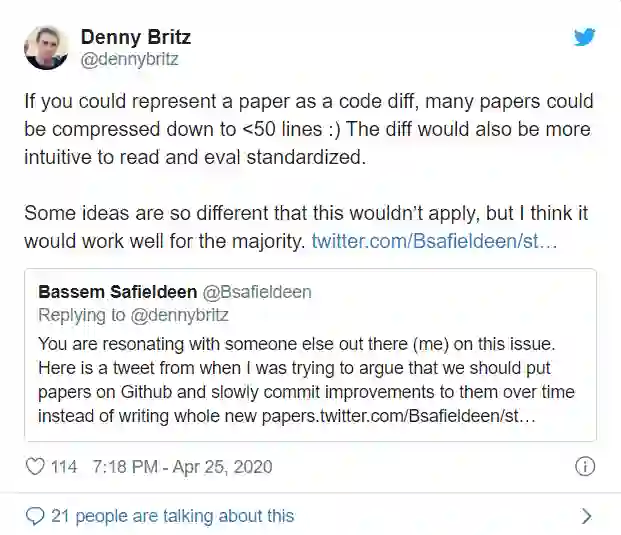

最近在Twitter上发现了一个有趣的话题,假设有这样一个场景,论文研究在GitHub上发布,而后续论文则会提交与原始论文不同之处。在人工智能机器学习领域,信息过载一直是一个大问题,每个月都有大量新论文发表,这样的通过commit history展示方式或许会给你带来眼前一亮。

commit arXiv:1810.04805

Author: Devlin et al.

Date: Thu Oct 11 00:50:01 2018 +0000Initial Commit: BERT

-Transformer Decoder

+Masked Language Modeling

+Next Sentence Prediction

+WordPiece 30K

commit arXiv:1901.07291

Author: Lample et al.

Date: Sun Nov 10 10:46:37 2019 +0000Cross-lingual Language Model Pretraining

+Translation Language Modeling(TLM)

+Causal Language Modeling(CLM)

commit arXiv:1906.08237

Author: Yang et al.

Date: Wed Jun 19 17:35:48 2019 +0000XLNet: Generalized Autoregressive Pretraining for Language Understanding

-Masked Language Modeling

-BERT Transformer

+Permutation Language Modeling

+Transformer-XL

+Two-stream self-attention

commit arXiv:1907.10529

Author: Joshi et al.

Date: Wed Jul 24 15:43:40 2019 +0000SpanBERT: Improving Pre-training by Representing and Predicting Spans

-Random Token Masking

-Next Sentence Prediction

-Bi-sequence Training

+Continuous Span Masking

+Span-Boundary Objective(SBO)

+Single-Sequence Training

commit arXiv:1907.11692

Author: Liu et al.

Date: Fri Jul 26 17:48:29 2019 +0000RoBERTa: A Robustly Optimized BERT Pretraining Approach

-Next Sentence Prediction

-Static Masking of Tokens

+Dynamic Masking of Tokens

+Byte Pair Encoding(BPE) 50K

+Large batch size

+CC-NEWS dataset

commit arXiv:1908.10084

Author: Reimers et al.

Date: Tue Aug 27 08:50:17 2019 +0000Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks

+Siamese Network Structure

+Finetuning on SNLI and MNLI

commit arXiv:1909.11942

Author: Lan et al.

Date: Thu Sep 26 07:06:13 2019 +0000ALBERT: A Lite BERT for Self-supervised Learning of Language Representations

-Next Sentence Prediction

+Sentence Order Prediction

+Cross-layer Parameter Sharing

+Factorized Embeddings

commit arXiv:1910.01108

Author: Sanh et al.

Date: Wed Oct 2 17:56:28 2019 +0000DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter

-Next Sentence Prediction

-Token-Type Embeddings

-[CLS] pooling

+Knowledge Distillation

+Cosine Embedding Loss

+Dynamic Masking

commit arXiv:1911.03894

Author: Martin et al.

Date: Sun Nov 10 10:46:37 2019 +0000CamemBERT: a Tasty French Language Model

-BERT

-English

+ROBERTA

+French OSCAR dataset(138GB)

+Whole-word Masking(WWM)

+SentencePiece Tokenizer

commit arXiv:1912.05372

Author: Le et al.

Date: Wed Dec 11 14:59:32 2019 +0000FlauBERT: Unsupervised Language Model Pre-training for French

-BERT

-English

+ROBERTA

+fastBPE

+Stochastic Depth

+French dataset(71GB)

+FLUE(French Language Understanding Evaluation) benchmark