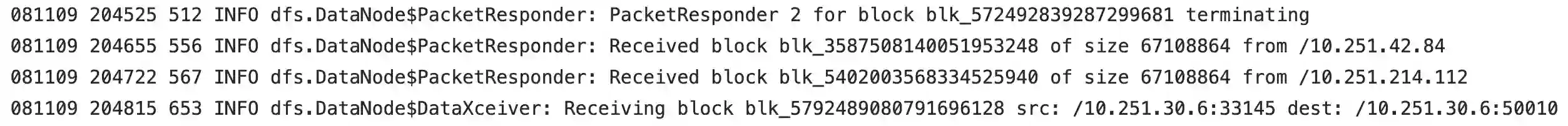

Trust, privacy, and interpretability have emerged as significant concerns for experts deploying deep learning models for security monitoring. Due to their back-box nature, these models cannot provide an intuitive understanding of the machine learning predictions, which are crucial in several decision-making applications, like anomaly detection. Security operations centers have a number of security monitoring tools that analyze logs and generate threat alerts which security analysts inspect. The alerts lack sufficient explanation on why it was raised or the context in which they occurred. Existing explanation methods for security also suffer from low fidelity and low stability and ignore privacy concerns. However, explanations are highly desirable; therefore, we systematize this knowledge on explanation models so they can ensure trust and privacy in security monitoring. Through our collaborative study of security operation centers, security monitoring tools, and explanation techniques, we discuss the strengths of existing methods and concerns vis-a-vis applications, such as security log analysis. We present a pipeline to design interpretable and privacy-preserving system monitoring tools. Additionally, we define and propose quantitative metrics to evaluate methods in explainable security. Finally, we discuss challenges and enlist exciting research directions for explorations.

翻译:安全行动中心有一些安全监测工具,用于分析日志,并产生安全分析员视察的威胁警报。警报没有充分解释提出这些警告的原因或发生危机的背景。现有的安全解释方法也因不忠、不稳定和忽视隐私问题而受到影响。然而,解释非常可取;因此,我们非常需要将这种解释模型的知识系统化,以确保安全监测方面的信任和隐私。最后,我们通过合作研究安全行动中心、安全监测工具和解释技术,讨论现有方法的优点和关切,如安全记录分析。我们提出了一个设计可解释和隐私保护系统监测工具的管道。此外,我们界定并提出量化指标,以评价可解释的安全方法。我们讨论各种挑战,并争取令人振奋的探索研究方向。