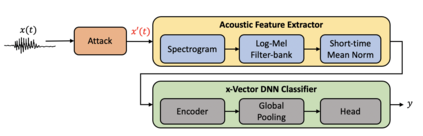

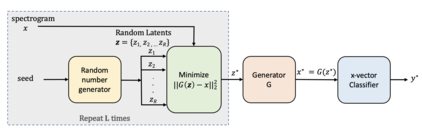

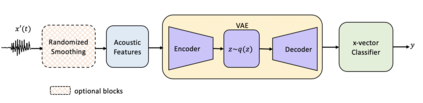

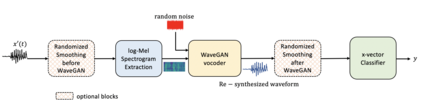

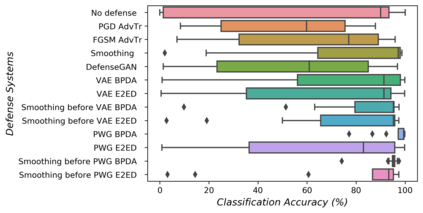

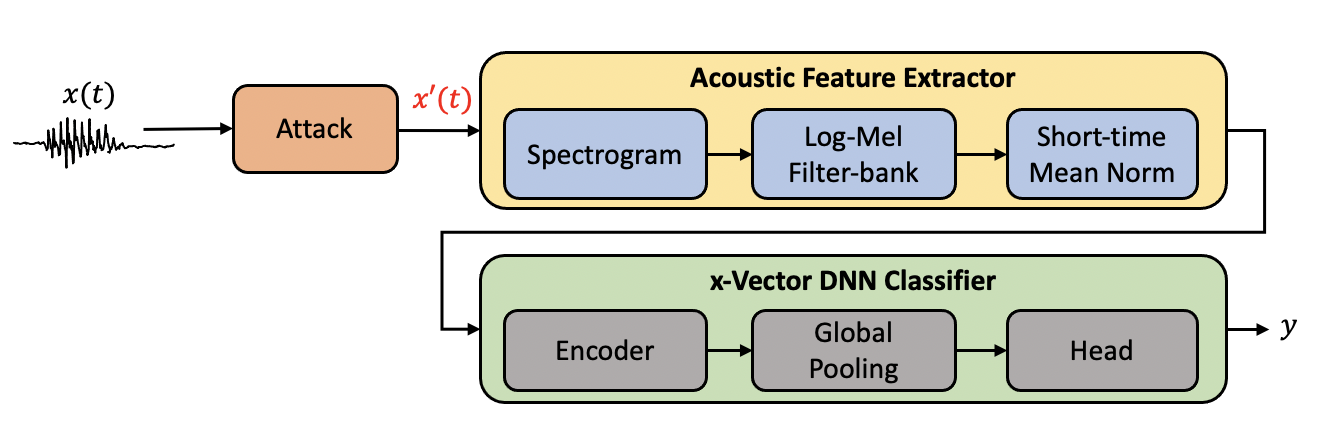

Adversarial examples to speaker recognition (SR) systems are generated by adding a carefully crafted noise to the speech signal to make the system fail while being imperceptible to humans. Such attacks pose severe security risks, making it vital to deep-dive and understand how much the state-of-the-art SR systems are vulnerable to these attacks. Moreover, it is of greater importance to propose defenses that can protect the systems against these attacks. Addressing these concerns, this paper at first investigates how state-of-the-art x-vector based SR systems are affected by white-box adversarial attacks, i.e., when the adversary has full knowledge of the system. x-Vector based SR systems are evaluated against white-box adversarial attacks common in the literature like fast gradient sign method (FGSM), basic iterative method (BIM)--a.k.a. iterative-FGSM--, projected gradient descent (PGD), and Carlini-Wagner (CW) attack. To mitigate against these attacks, the paper proposes four pre-processing defenses. It evaluates them against powerful adaptive white-box adversarial attacks, i.e., when the adversary has full knowledge of the system, including the defense. The four pre-processing defenses--viz. randomized smoothing, DefenseGAN, variational autoencoder (VAE), and Parallel WaveGAN vocoder (PWG) are compared against the baseline defense of adversarial training. Conclusions indicate that SR systems were extremely vulnerable under BIM, PGD, and CW attacks. Among the proposed pre-processing defenses, PWG combined with randomized smoothing offers the most protection against the attacks, with accuracy averaging 93% compared to 52% in the undefended system and an absolute improvement >90% for BIM attacks with $L_\infty>0.001$ and CW attack.

翻译:通过在语音信号上添加一个精心设计的噪音,使系统在人类无法察觉的情况下失灵,从而产生声频识别系统。这些攻击构成了严重的安全风险,使得对深海而言至关重要,并了解最先进的SR系统在多大程度上容易受到这些攻击。此外,提出能够保护这些系统免遭这些攻击的防御系统更为重要。针对这些关切,本文件首先调查了如何受到白箱对抗性攻击的影响,即当对手完全了解系统时,使系统失灵。XVC基于SR的白箱对抗性攻击,例如快速梯度标志法(FGSM)、基本迭代方法(BIM)-a.k.a.