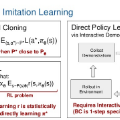

Generative model-based imitation learning methods have recently achieved strong results in learning high-complexity motor skills from human demonstrations. However, imitation learning of interactive policies that coordinate with humans in shared spaces without explicit communication remains challenging, due to the significantly higher behavioral complexity in multi-agent interactions compared to non-interactive tasks. In this work, we introduce a structured imitation learning framework for interactive policies by combining generative single-agent policy learning with a flexible yet expressive game-theoretic structure. Our method explicitly separates learning into two steps: first, we learn individual behavioral patterns from multi-agent demonstrations using standard imitation learning; then, we structurally learn inter-agent dependencies by solving an inverse game problem. Preliminary results in a synthetic 5-agent social navigation task show that our method significantly improves non-interactive policies and performs comparably to the ground truth interactive policy using only 50 demonstrations. These results highlight the potential of structured imitation learning in interactive settings.

翻译:基于生成模型的模仿学习方法近年来在从人类演示中学习高复杂度运动技能方面取得了显著成果。然而,在无显式通信的共享空间中与人类协调的交互策略模仿学习仍然具有挑战性,这是由于多智能体交互的行为复杂度远高于非交互任务。本研究提出了一种结构化模仿学习框架,通过将生成式单智能体策略学习与灵活且表达能力强的博弈论结构相结合,用于学习交互策略。我们的方法明确地将学习过程分为两个步骤:首先,使用标准模仿学习方法从多智能体演示中学习个体行为模式;然后,通过求解逆博弈问题来结构化地学习智能体间的依赖关系。在合成五智能体社交导航任务中的初步结果表明,我们的方法显著优于非交互策略,并且仅使用50个演示样本即可达到与真实交互策略相当的性能。这些结果凸显了结构化模仿学习在交互场景中的潜力。