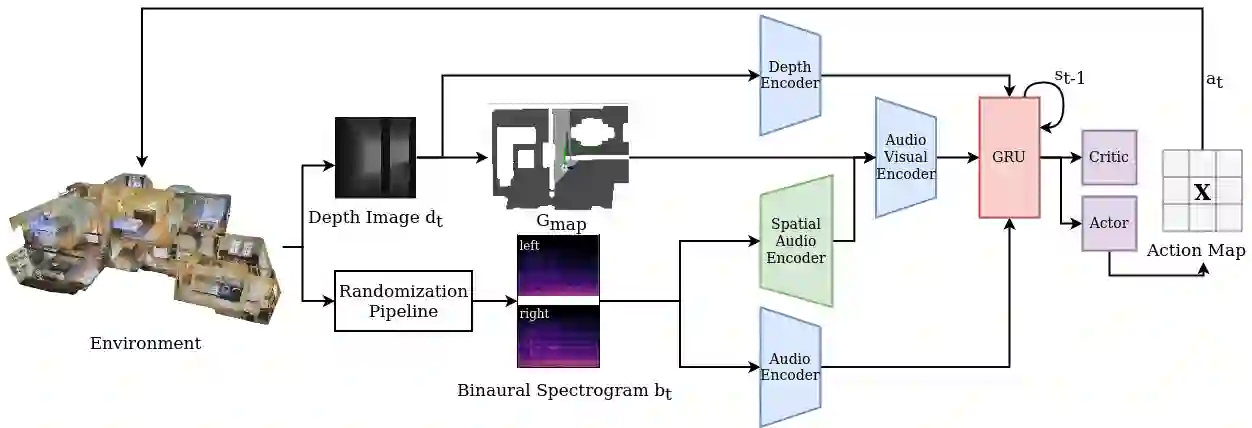

Audio-visual navigation combines sight and hearing to navigate to a sound-emitting source in an unmapped environment. While recent approaches have demonstrated the benefits of audio input to detect and find the goal, they focus on clean and static sound sources and struggle to generalize to unheard sounds. In this work, we propose the novel dynamic audio-visual navigation benchmark which requires catching a moving sound source in an environment with noisy and distracting sounds, posing a range of new challenges. We introduce a reinforcement learning approach that learns a robust navigation policy for these complex settings. To achieve this, we propose an architecture that fuses audio-visual information in the spatial feature space to learn correlations of geometric information inherent in both local maps and audio signals. We demonstrate that our approach consistently outperforms the current state-of-the-art by a large margin across all tasks of moving sounds, unheard sounds, and noisy environments, on two challenging 3D scanned real-world environments, namely Matterport3D and Replica. The benchmark is available at http://dav-nav.cs.uni-freiburg.de.

翻译:视听导航将视觉和听觉结合起来,在一个未绘制地图的环境中,将视觉和听觉结合起来,向一个发声源航行。虽然最近的方法展示了音频输入对探测和找到目标的好处,但它们侧重于清洁和静态的声源,并努力对未听到的声音加以概括。在这项工作中,我们提出了新的动态视听导航基准,要求在一个噪音和分散声音的环境中捕捉一个移动的声源,这带来了一系列新的挑战。我们采用了强化学习方法,为这些复杂环境学习了强有力的导航政策。为了实现这一点,我们提议了一个结构,将空间地貌空间的视听信息结合起来,以了解本地地图和音频信号所固有的几何信息的相关性。我们表明,我们的方法始终在移动声音、听不到的声音和噪音环境等所有任务中,大大超越了目前的状况,在两个充满挑战的3D扫描的全球环境环境中,即Mealport3D和Replic。该基准可在http://dav-nav.cs.uni-freiburg.de查阅。