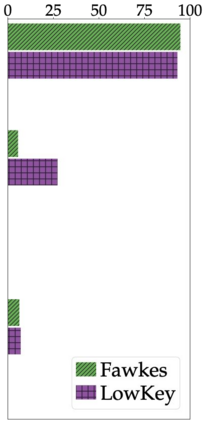

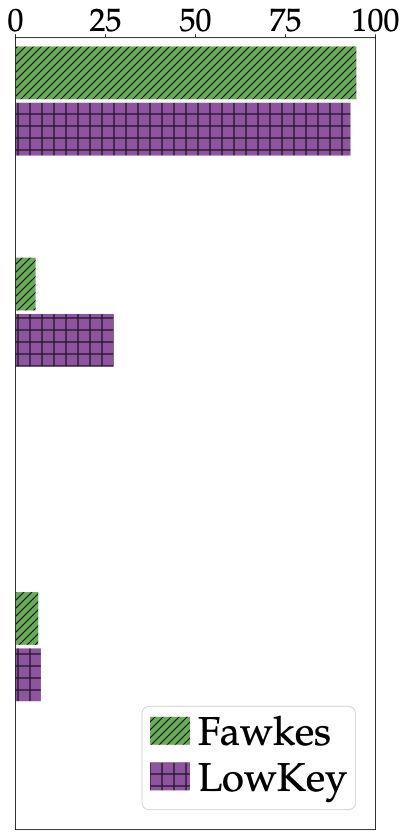

Data poisoning has been proposed as a compelling defense against facial recognition models trained on Web-scraped pictures. By perturbing the images they post online, users can fool models into misclassifying future (unperturbed) pictures. We demonstrate that this strategy provides a false sense of security, as it ignores an inherent asymmetry between the parties: users' pictures are perturbed once and for all before being published (at which point they are scraped) and must thereafter fool all future models -- including models trained adaptively against the users' past attacks, or models that use technologies discovered after the attack. We evaluate two systems for poisoning attacks against large-scale facial recognition, Fawkes (500,000+ downloads) and LowKey. We demonstrate how an "oblivious" model trainer can simply wait for future developments in computer vision to nullify the protection of pictures collected in the past. We further show that an adversary with black-box access to the attack can (i) train a robust model that resists the perturbations of collected pictures and (ii) detect poisoned pictures uploaded online. We caution that facial recognition poisoning will not admit an "arms race" between attackers and defenders. Once perturbed pictures are scraped, the attack cannot be changed so any future successful defense irrevocably undermines users' privacy.

翻译:数据中毒是针对在网上剪切照片上训练的面部识别模型的有力防御建议。 用户通过在网上张贴图像,可以欺骗模型,将未来( 不受干扰的)照片错误分类。 我们证明这一战略提供了一种虚假的安全感,因为它忽视了双方之间固有的不对称性: 用户的照片在公布之前( 在哪一点被刮掉)就一劳永逸地受到干扰, 其后必须愚弄所有未来的模型 -- 包括针对用户过去攻击的适应性培训的模型, 或使用袭击后发现的技术的模型。 我们评估了两个系统, 用来对大规模面部识别、 Fawkes (500 000+下载) 和 LowKey 进行中毒袭击。 我们证明“ 明显” 模型培训者如何在计算机愿景的未来发展中等待取消对过去所收集的图片的保护? 我们还进一步表明, 黑盒访问的对手可以( i) 训练一个强大的模型, 抵制所收集的图片的干扰, 以及(ii) 检测上传的有毒图片。 我们警告说, 面中毒中毒中毒的中毒不会承认“ 武器袭击不会破坏未来攻击者和捍卫者之间的隐私。