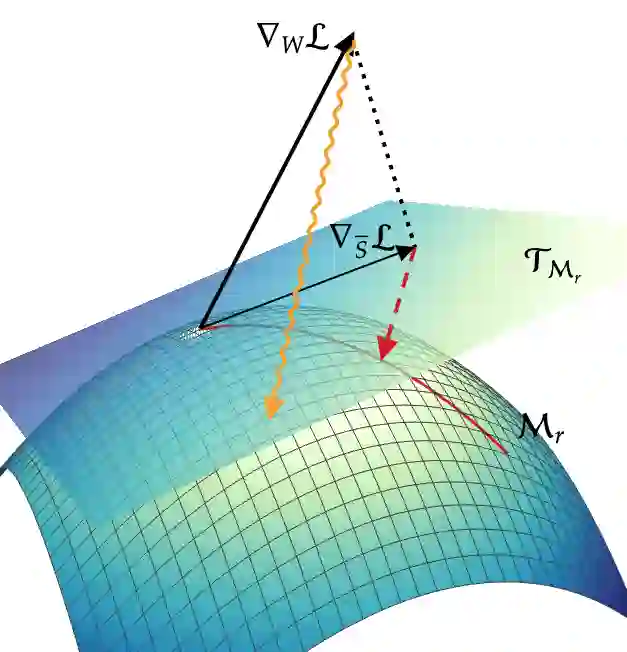

Low-rank pre-training and fine-tuning have recently emerged as promising techniques for reducing the computational and storage costs of large neural networks. Training low-rank parameterizations typically relies on conventional optimizers such as heavy ball momentum methods or Adam. In this work, we identify and analyze potential difficulties that these training methods encounter when used to train low-rank parameterizations of weights. In particular, we show that classical momentum methods can struggle to converge to a local optimum due to the geometry of the underlying optimization landscape. To address this, we introduce novel training strategies derived from dynamical low-rank approximation, which explicitly account for the underlying geometric structure. Our approach leverages and combines tools from dynamical low-rank approximation and momentum-based optimization to design optimizers that respect the intrinsic geometry of the parameter space. We validate our methods through numerical experiments, demonstrating faster convergence, and stronger validation metrics at given parameter budgets.

翻译:低秩预训练与微调技术近期已成为降低大型神经网络计算与存储开销的有效方法。训练低秩参数化模型通常依赖于传统优化器,如重球动量方法或Adam。本研究识别并分析了这些训练方法在用于权重低秩参数化时可能遇到的困难。特别地,我们证明经典动量方法可能因底层优化空间的几何特性而难以收敛至局部最优解。为解决此问题,我们提出了基于动态低秩近似的新型训练策略,其显式考虑了底层几何结构。该方法融合动态低秩近似与动量优化的工具,设计了尊重参数空间本征几何特性的优化器。通过数值实验验证,我们的方法在给定参数预算下实现了更快的收敛速度与更强的验证指标。