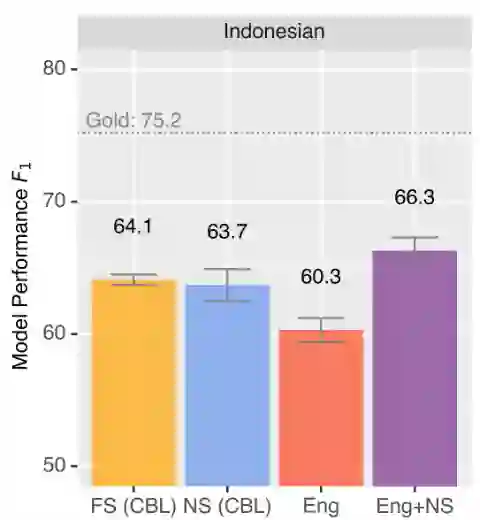

In low-resource natural language processing (NLP), the key problems are a lack of target language training data, and a lack of native speakers to create it. Cross-lingual methods have had notable success in addressing these concerns, but in certain common circumstances, such as insufficient pre-training corpora or languages far from the source language, their performance suffers. In this work we propose a complementary approach to building low-resource Named Entity Recognition (NER) models using ``non-speaker'' (NS) annotations, provided by annotators with no prior experience in the target language. We recruit 30 participants in a carefully controlled annotation experiment with Indonesian, Russian, and Hindi. We show that use of NS annotators produces results that are consistently on par or better than cross-lingual methods built on modern contextual representations, and have the potential to outperform with additional effort. We conclude with observations of common annotation patterns and recommended implementation practices, and motivate how NS annotations can be used in addition to prior methods for improved performance. For more details, http://cogcomp.org/page/publication_view/941

翻译:在低资源自然语言处理(NLP)中,关键问题是缺乏目标语言培训数据,以及缺乏当地语言使用者来创建这种数据。跨语言方法在解决这些问题上取得了显著的成功,但在某些共同的情况下,例如培训前社团或远离原始语言的语言不够充分,其业绩受到影响。在这项工作中,我们建议采用补充方法,用“non-speaker''(NS)”说明来建立低资源命名实体识别模型,由在目标语言方面没有经验的注解员提供。我们征聘了30名参与者,与印度尼西亚、俄罗斯和印地语进行了仔细控制的注解试验。我们表明,使用NS说明者所产生的结果始终处于同等水平或优于建立在现代背景表述上的跨语言方法,并且有可能超越额外的努力。我们最后对通用注解模式和建议实施做法进行了观察,并激励除了先前改进绩效的方法之外如何使用NS说明。关于改进绩效的方法的更多细节,见http://cogcomp.org/page/publication_view/941。