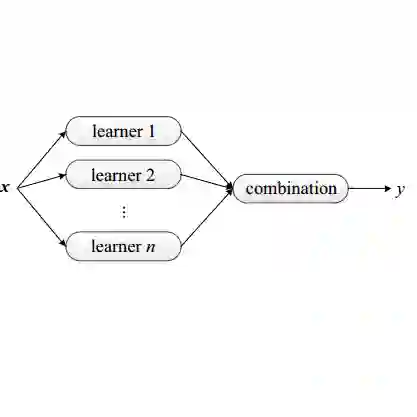

Ensemble learning is traditionally justified as a variance-reduction strategy, explaining its strong performance for unstable predictors such as decision trees. This explanation, however, does not account for ensembles constructed from intrinsically stable estimators-including smoothing splines, kernel ridge regression, Gaussian process regression, and other regularized reproducing kernel Hilbert space (RKHS) methods whose variance is already tightly controlled by regularization and spectral shrinkage. This paper develops a general weighting theory for ensemble learning that moves beyond classical variance-reduction arguments. We formalize ensembles as linear operators acting on a hypothesis space and endow the space of weighting sequences with geometric and spectral constraints. Within this framework, we derive a refined bias-variance approximation decomposition showing how non-uniform, structured weights can outperform uniform averaging by reshaping approximation geometry and redistributing spectral complexity, even when variance reduction is negligible. Our main results provide conditions under which structured weighting provably dominates uniform ensembles, and show that optimal weights arise as solutions to constrained quadratic programs. Classical averaging, stacking, and recently proposed Fibonacci-based ensembles appear as special cases of this unified theory, which further accommodates geometric, sub-exponential, and heavy-tailed weighting laws. Overall, the work establishes a principled foundation for structure-driven ensemble learning, explaining why ensembles remain effective for smooth, low-variance base learners and setting the stage for distribution-adaptive and dynamically evolving weighting schemes developed in subsequent work.

翻译:集成学习传统上被解释为一种方差缩减策略,这解释了其对于决策树等不稳定预测器的强大性能。然而,这种解释并不适用于由本质稳定的估计器构建的集成——包括平滑样条、核岭回归、高斯过程回归以及其他正则化再生核希尔伯特空间(RKHS)方法,这些方法的方差已通过正则化和谱收缩得到严格控制。本文发展了一种超越经典方差缩减论证的集成学习一般加权理论。我们将集成形式化为作用于假设空间的线性算子,并为加权序列空间赋予几何与谱约束。在此框架内,我们推导出一种精细的偏差-方差-近似分解,表明即使方差缩减可忽略不计,非均匀、结构化的权重如何通过重塑近似几何和重新分配谱复杂度来优于均匀平均。我们的主要结果提供了结构化加权在理论上优于均匀集成的条件,并证明最优权重作为约束二次规划的解出现。经典平均法、堆叠法以及近期提出的基于斐波那契数列的集成均作为此统一理论的特例出现,该理论进一步容纳了几何、次指数和重尾加权律。总体而言,这项工作为结构驱动的集成学习建立了原则性基础,解释了集成为何对平滑、低方差的基础学习器依然有效,并为后续工作中发展的分布自适应和动态演化的加权方案奠定了基础。