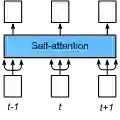

Recent advancements in video generation have seen a shift towards unified, transformer-based foundation models that can handle multiple conditional inputs in-context. However, these models have primarily focused on modalities like text, images, and depth maps, while strictly time-synchronous signals like audio have been underexplored. This paper introduces In-Context Audio Control of video diffusion transformers (ICAC), a framework that investigates the integration of audio signals for speech-driven video generation within a unified full-attention architecture, akin to FullDiT. We systematically explore three distinct mechanisms for injecting audio conditions: standard cross-attention, 2D self-attention, and unified 3D self-attention. Our findings reveal that while 3D attention offers the highest potential for capturing spatio-temporal audio-visual correlations, it presents significant training challenges. To overcome this, we propose a Masked 3D Attention mechanism that constrains the attention pattern to enforce temporal alignment, enabling stable training and superior performance. Our experiments demonstrate that this approach achieves strong lip synchronization and video quality, conditioned on an audio stream and reference images.

翻译:近期视频生成领域的研究趋势正转向统一的、基于Transformer的基础模型,这些模型能够在上下文中处理多种条件输入。然而,这些模型主要集中于文本、图像和深度图等模态,而严格时间同步的信号(如音频)尚未得到充分探索。本文提出了视频扩散Transformer的上下文音频控制框架,该框架在一个统一的全注意力架构(类似于FullDiT)中研究音频信号在语音驱动视频生成中的集成。我们系统地探索了三种不同的音频条件注入机制:标准交叉注意力、2D自注意力以及统一的3D自注意力。研究发现,虽然3D注意力在捕捉时空视听相关性方面具有最高潜力,但其训练面临显著挑战。为克服此问题,我们提出了一种掩码3D注意力机制,通过约束注意力模式以强制时间对齐,从而实现稳定训练和更优性能。实验表明,该方法在给定音频流和参考图像条件下,能够实现出色的唇部同步效果与视频质量。