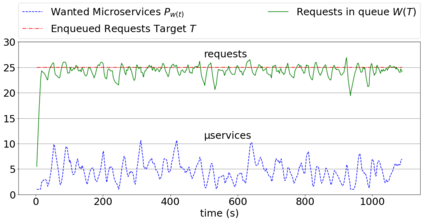

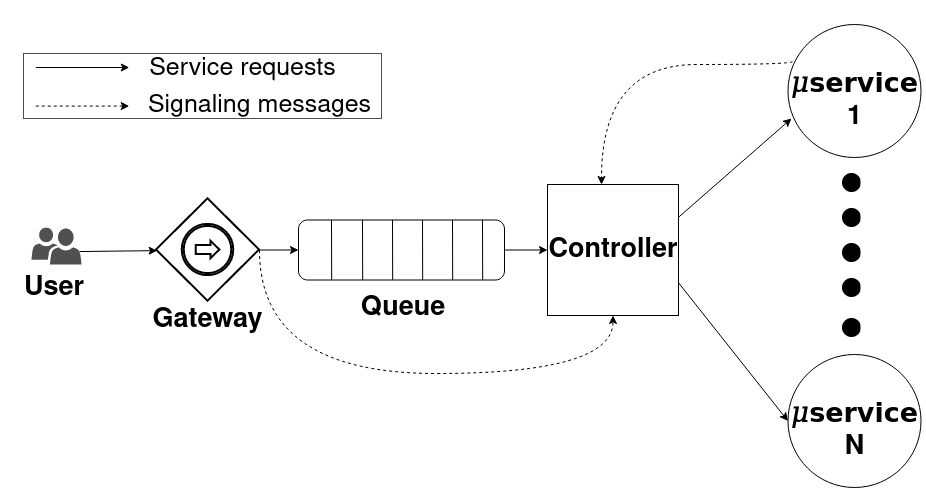

Cloud Computing (CC) is the most prevalent paradigm under which services are provided over the Internet. The most relevant feature for its success is its capability to promptly scale service based on user demand. When scaling, the main objective is to maximize as much as possible service performance. Moreover, resources in the Cloud are usually so abundant, that they can be assumed infinite from the service point of view: an application provider can have as many servers it wills, as long it pays for it. This model has some limitations. First, energy efficiency is not among the first criteria for scaling decisions, which has raised concerns about the environmental effects of today's wild computations in the Cloud. Moreover, it is not viable for Edge Computing (EC), a paradigm in which computational resources are distributed up to the very edge of the network, i.e., co-located with base stations or access points. In edge nodes, resources are limited, which imposes different parsimonious scaling strategies to be adopted. In this work, we design a scaling strategy aimed to instantiate, parsimoniously, a number of microservices sufficient to guarantee a certain Quality of Service (QoS) target. We implement such a strategy in a Kubernetes/Docker environment. The strategy is based on a simple Proportional-Integrative-Derivative (PID) controller. In this paper we describe the system design and a preliminary performance evaluation.

翻译:云计算(CC)是互联网上提供服务的最普遍模式。 其成功最相关的特征是其根据用户需求迅速扩大服务规模的能力。 当缩放时, 主要目标是尽可能最大限度地扩大服务性能。 此外, 云中的资源通常非常丰富, 从服务的角度可以假定这些资源是无限的: 应用程序提供者可以拥有尽可能多的服务器, 只要它愿意, 只要它能支付费用。 这个模式有一些局限性。 首先, 能源效率并不是决定规模化的首要标准之一, 它引起了人们对当今云中野生计算对环境的影响的关切。 此外, 电算(EC) 无法采用这样的模式: 计算资源分布到网络的最边缘, 即与基地站或接入点合用。 在边缘节点, 资源是有限的, 它要求采用不同的偏差的缩放战略。 在这项工作中, 我们设计了一个规模化战略, 旨在即刻意, 微缩缩服务的数量足以保证某种质量的系统质量。 (QSON-QAS) 初步战略, 我们执行一个基于简单的环境设计战略。