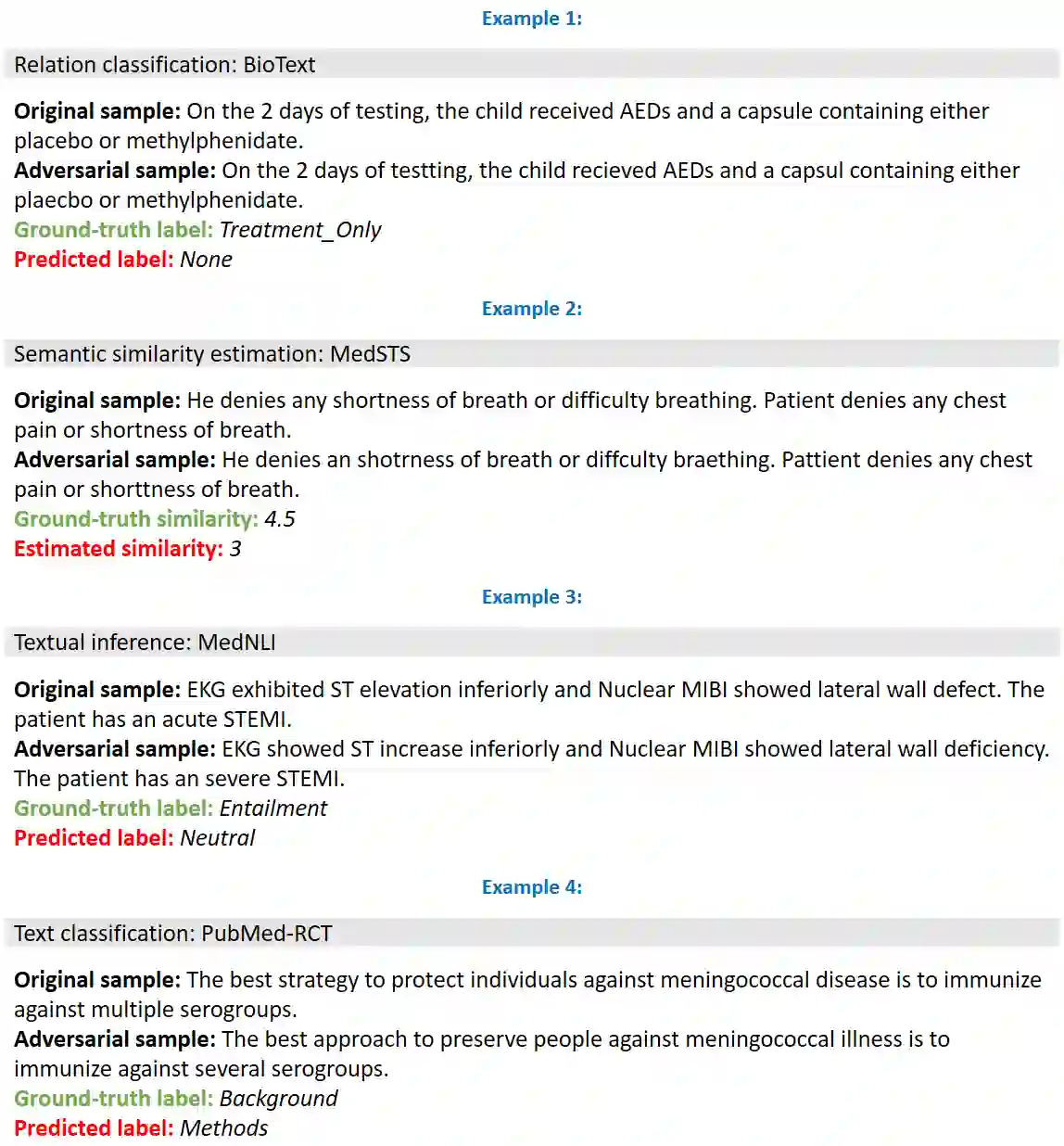

Deep transformer neural network models have improved the predictive accuracy of intelligent text processing systems in the biomedical domain. They have obtained state-of-the-art performance scores on a wide variety of biomedical and clinical Natural Language Processing (NLP) benchmarks. However, the robustness and reliability of these models has been less explored so far. Neural NLP models can be easily fooled by adversarial samples, i.e. minor changes to input that preserve the meaning and understandability of the text but force the NLP system to make erroneous decisions. This raises serious concerns about the security and trust-worthiness of biomedical NLP systems, especially when they are intended to be deployed in real-world use cases. We investigated the robustness of several transformer neural language models, i.e. BioBERT, SciBERT, BioMed-RoBERTa, and Bio-ClinicalBERT, on a wide range of biomedical and clinical text processing tasks. We implemented various adversarial attack methods to test the NLP systems in different attack scenarios. Experimental results showed that the biomedical NLP models are sensitive to adversarial samples; their performance dropped in average by 21 and 18.9 absolute percent on character-level and word-level adversarial noise, respectively. Conducting extensive adversarial training experiments, we fine-tuned the NLP models on a mixture of clean samples and adversarial inputs. Results showed that adversarial training is an effective defense mechanism against adversarial noise; the models robustness improved in average by 11.3 absolute percent. In addition, the models performance on clean data increased in average by 2.4 absolute present, demonstrating that adversarial training can boost generalization abilities of biomedical NLP systems.

翻译:深度变压器神经网络模型提高了生物医学领域智能文本处理系统的预测准确性,在生物医学和临床自然语言处理(NLP)的广泛各种基准中获得了最先进的性能评分,然而,这些模型的坚固性和可靠性迄今探索得较少,神经NLP模型很容易被对抗性样本所蒙骗,即对投入的微小变化可以保持文本的含义和可理解性,但迫使NLP系统做出错误的决定。这引起了对生物医学国家实验室系统绝对性能和信任度的严重关切,特别是当它们打算用于现实世界使用的案例时。我们调查了数种变压器神经语言模型的坚固性,即生物实验室、SciBERT、BioMERD-ROBERTA和生物临床文本处理任务范围很广。我们应用了各种对抗性攻击性攻击性攻击方法来测试NLP的系统。 实验结果表明,NLP模型的绝对性性性能,特别是在实际使用现实世界使用的情况下。