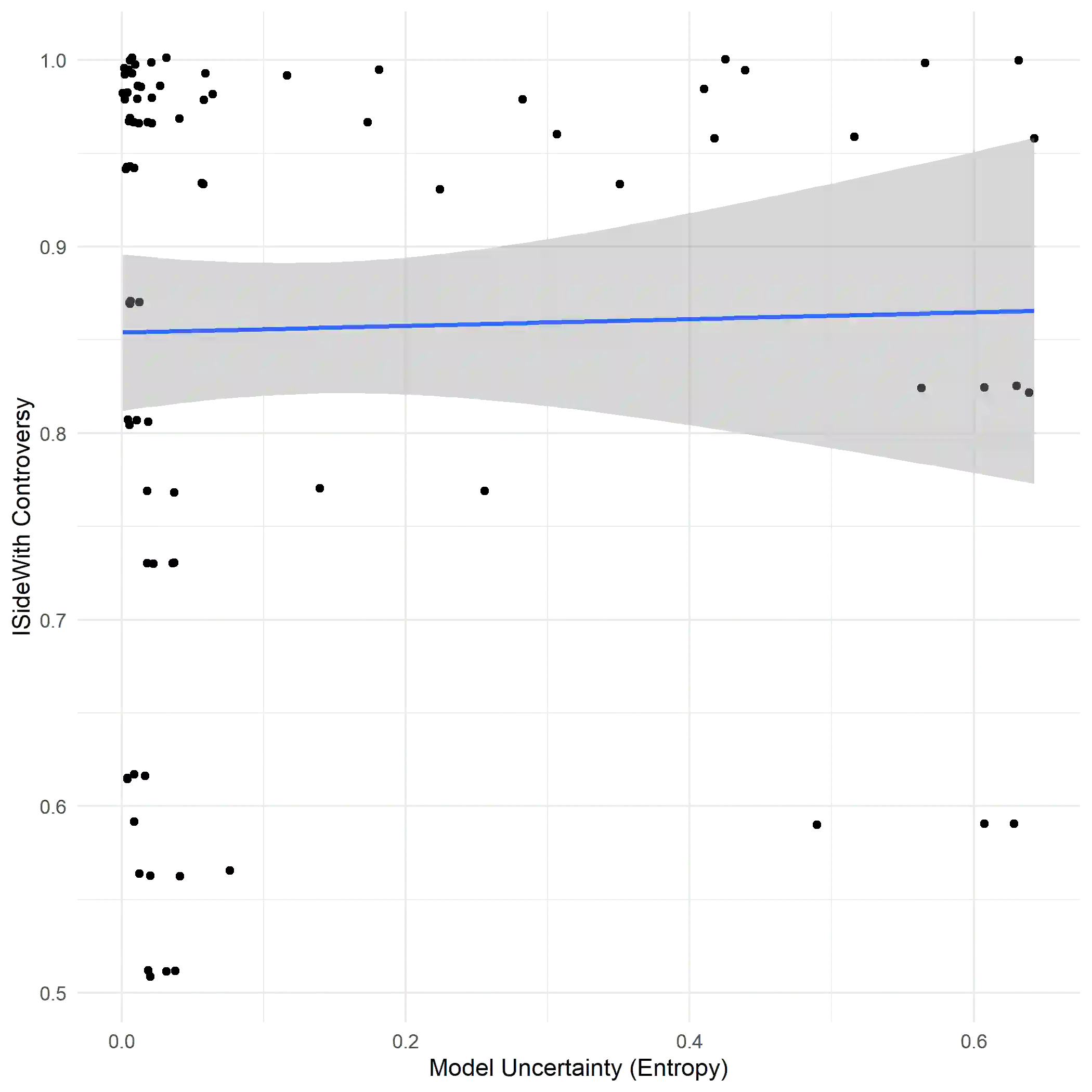

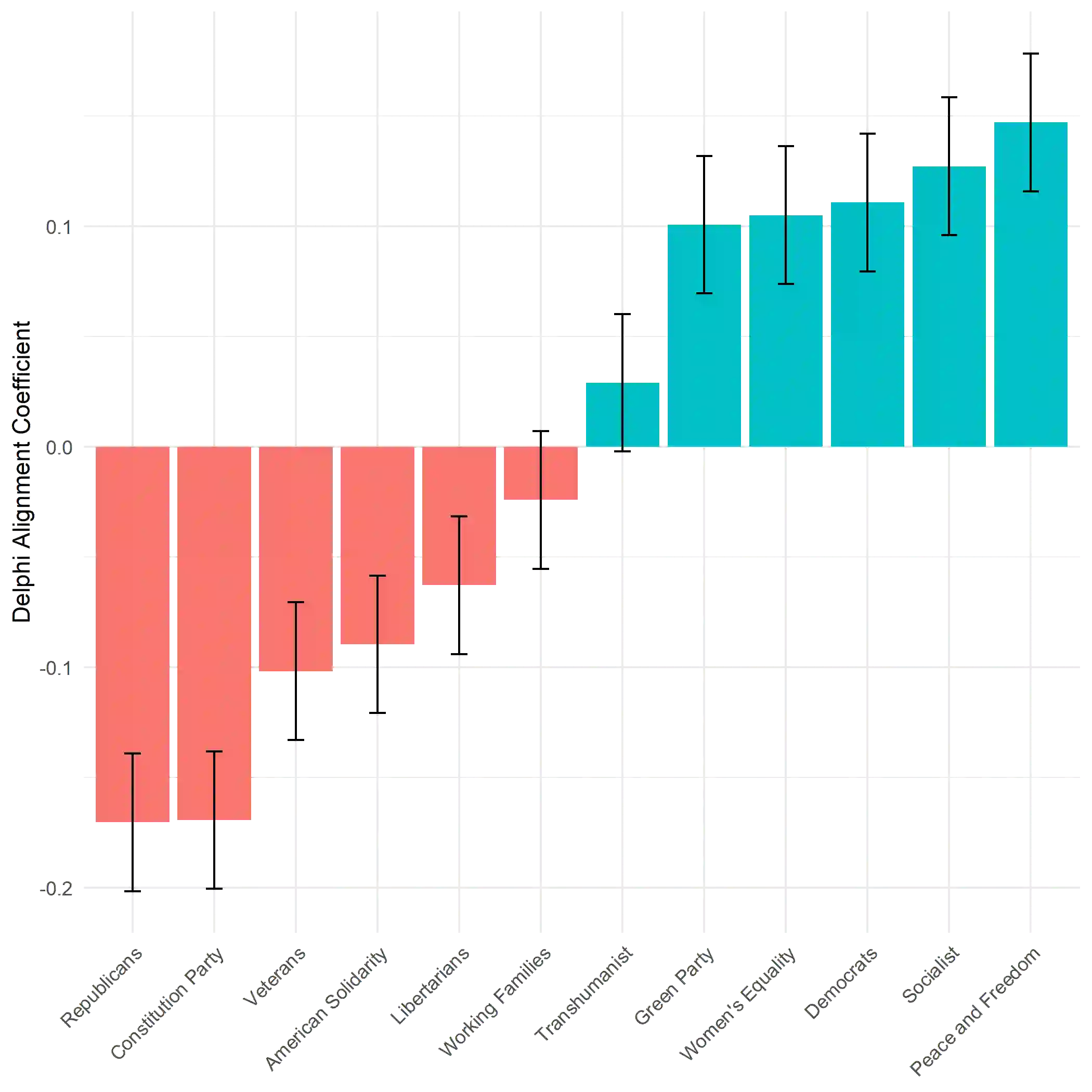

As generative language models are deployed in ever-wider contexts, concerns about their political values have come to the forefront with critique from all parts of the political spectrum that the models are biased and lack neutrality. However, the question of what neutrality is and whether it is desirable remains underexplored. In this paper, I examine neutrality through an audit of Delphi [arXiv:2110.07574], a large language model designed for crowdsourced ethics. I analyse how Delphi responds to politically controversial questions compared to different US political subgroups. I find that Delphi is poorly calibrated with respect to confidence and exhibits a significant political skew. Based on these results, I examine the question of neutrality from a data-feminist lens, in terms of how notions of neutrality shift power and further marginalise unheard voices. These findings can hopefully contribute to a more reflexive debate about the normative questions of alignment and what role we want generative models to play in society.

翻译:暂无翻译