7 Papers & Radios |1.6万亿参数语言模型;IJCAI 2020奖项公布

机器之心 & ArXiv Weekly Radiostation

参与:杜伟、楚航、罗若天

本周的重要论文包括谷歌大脑提出的拥有 1.6 万亿参数的语言模型 Switch Transformer,以及 IJCAI 2020 各奖项论文。

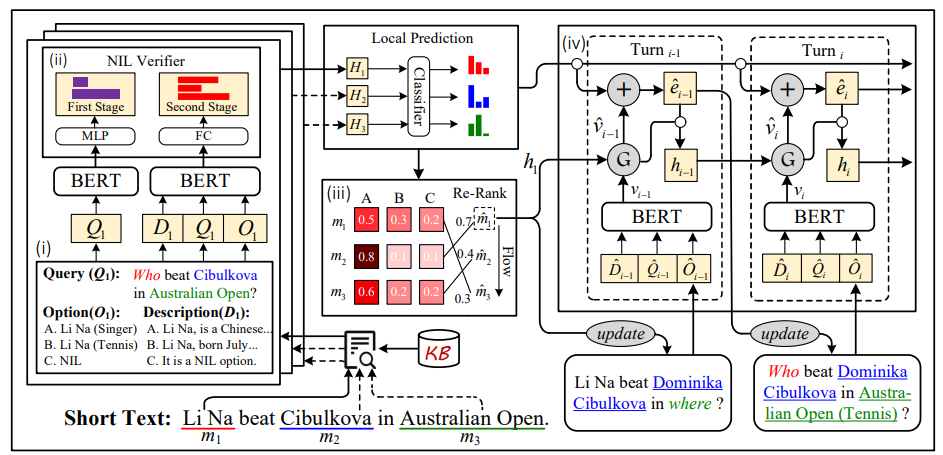

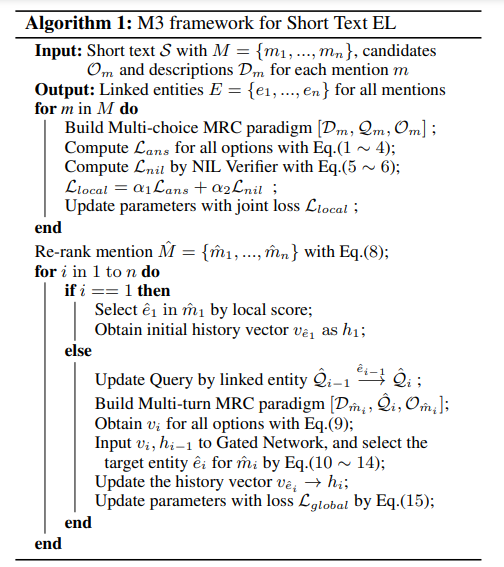

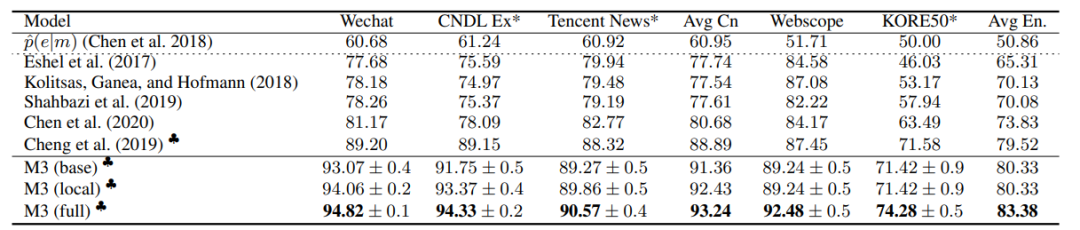

Read, Retrospect, Select: An MRC Framework to Short Text Entity Linking

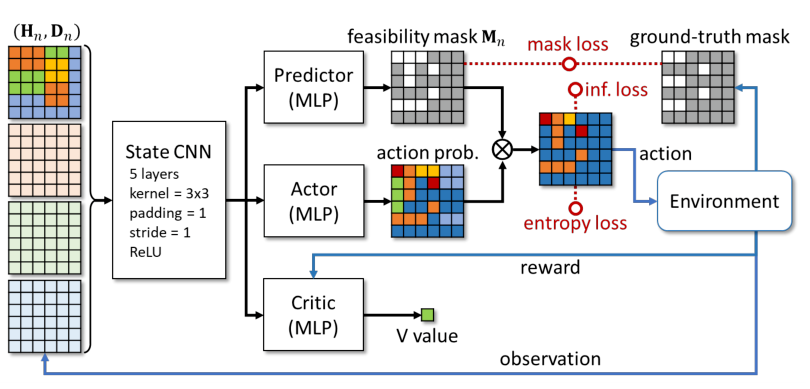

Online 3D Bin Packing with Constrained Deep Reinforcement Learning

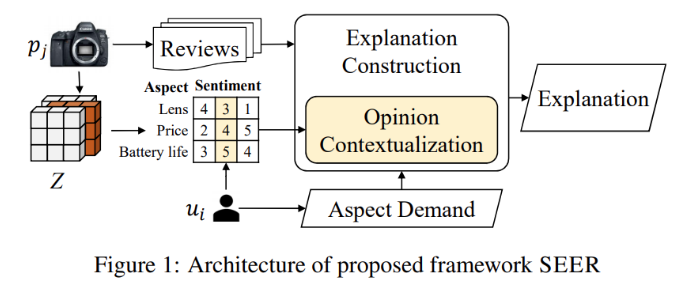

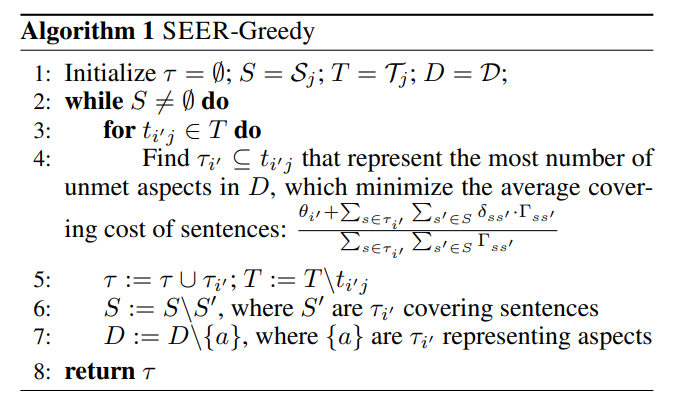

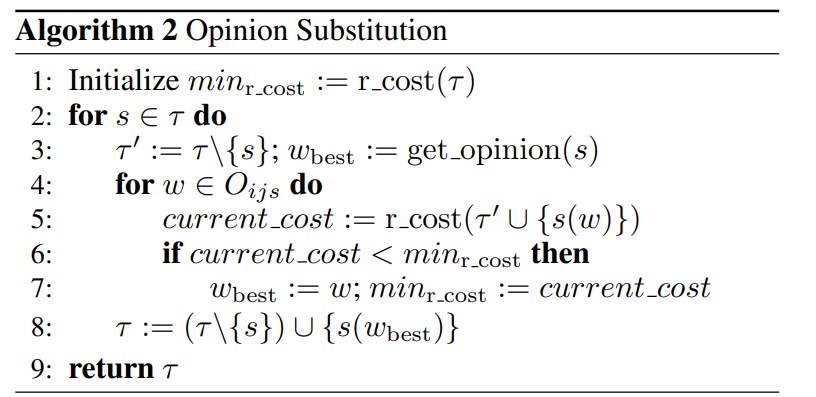

Synthesizing Aspect-Driven Recommendation Explanations from Reviews

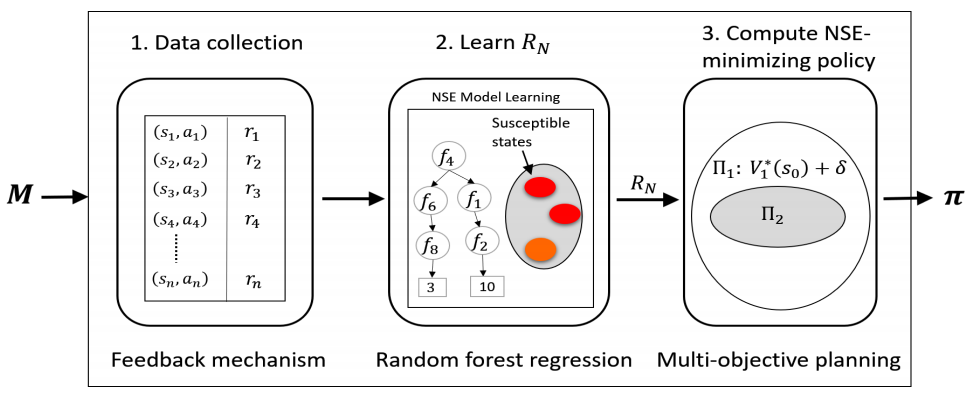

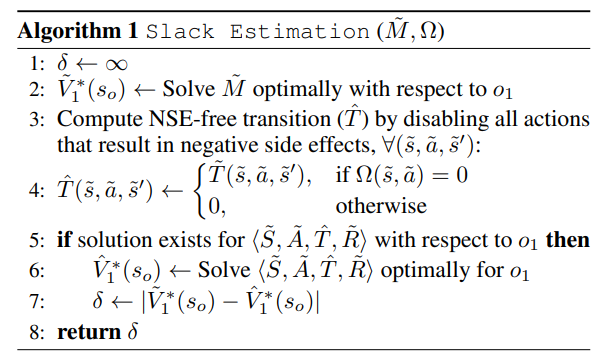

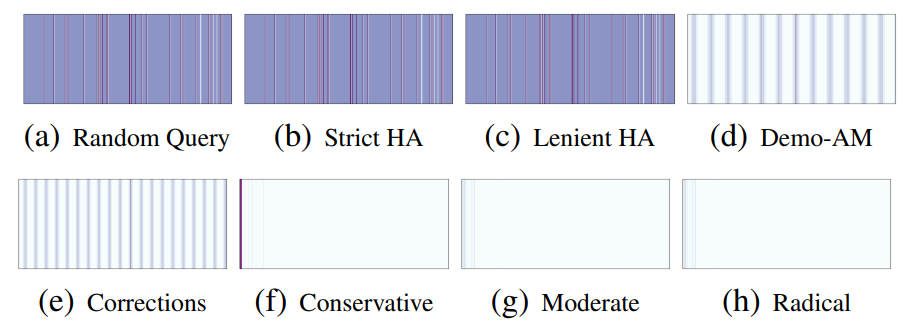

A Multi-Objective Approach to Mitigate Negative Side Effects

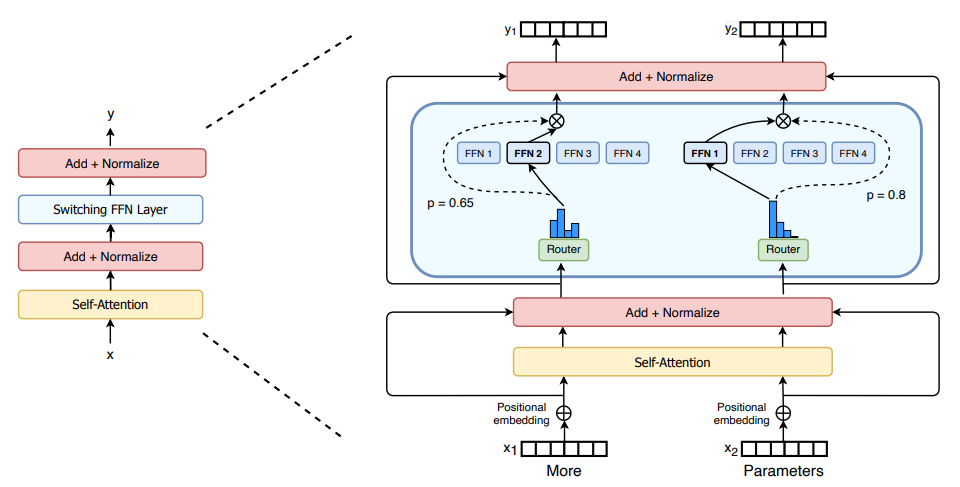

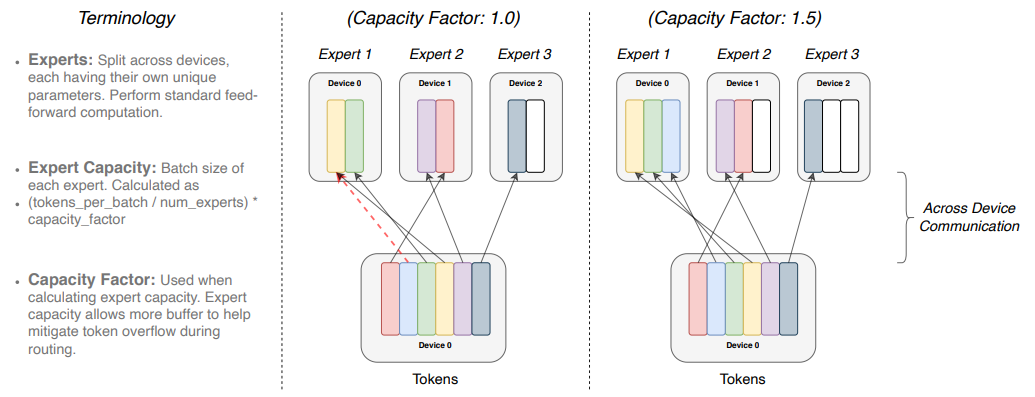

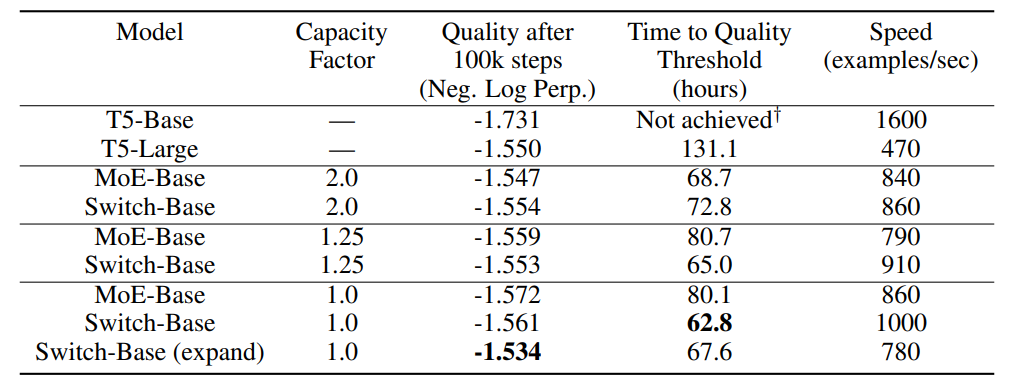

SWITCH TRANSFORMERS: SCALING TO TRILLION PARAMETER MODELS WITH SIMPLE AND EFFICIENT SPARSITY

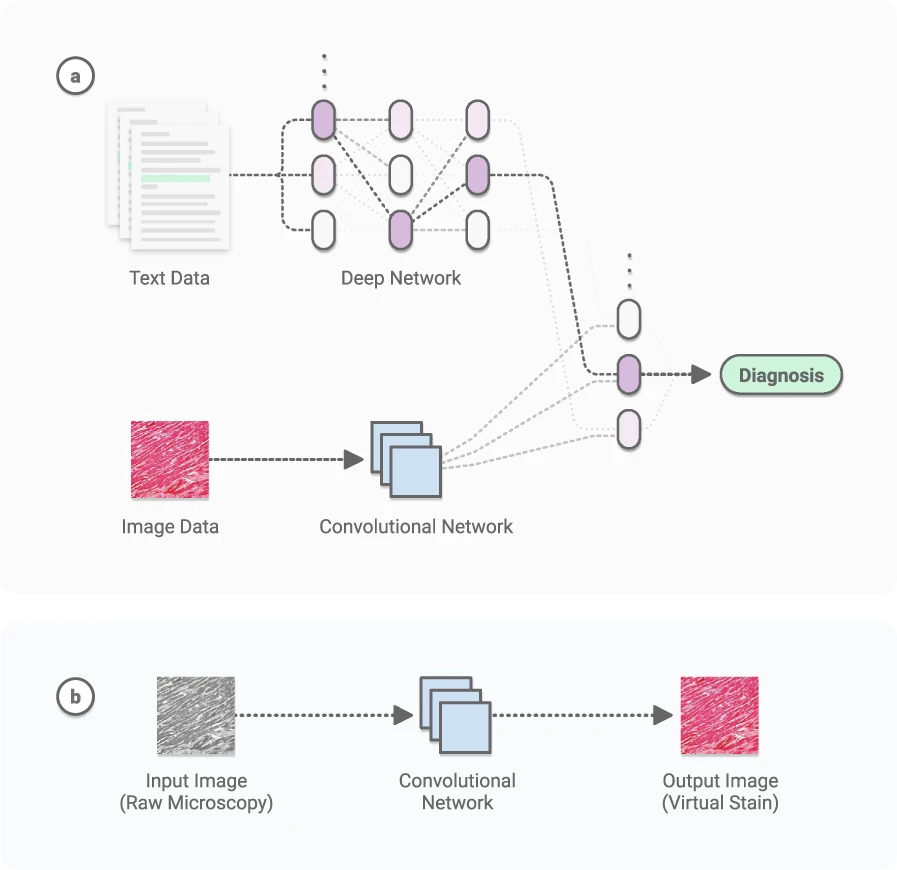

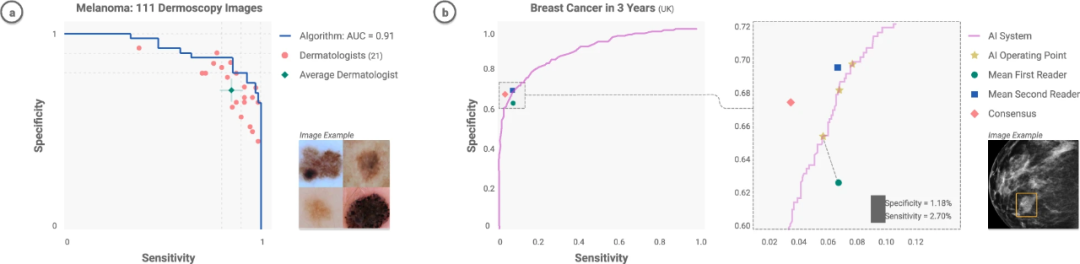

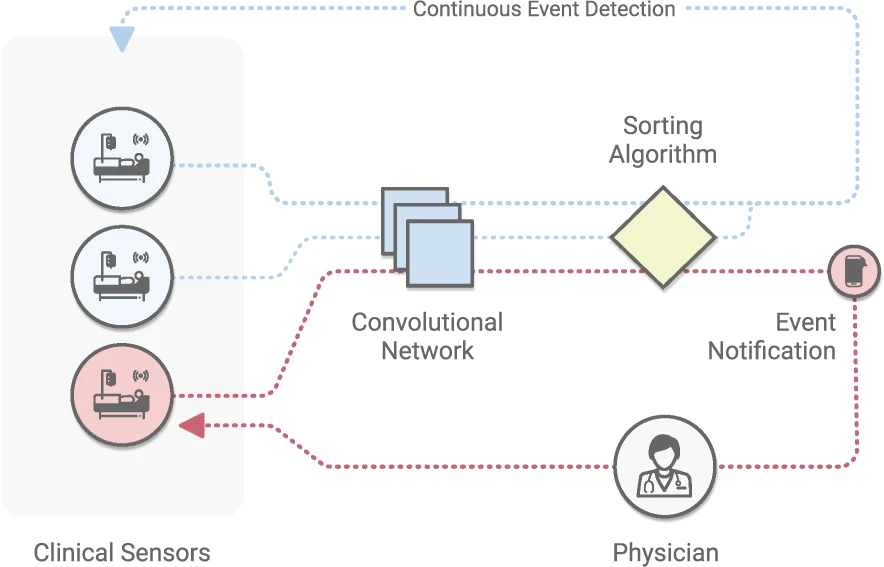

Deep learning-enabled medical computer vision

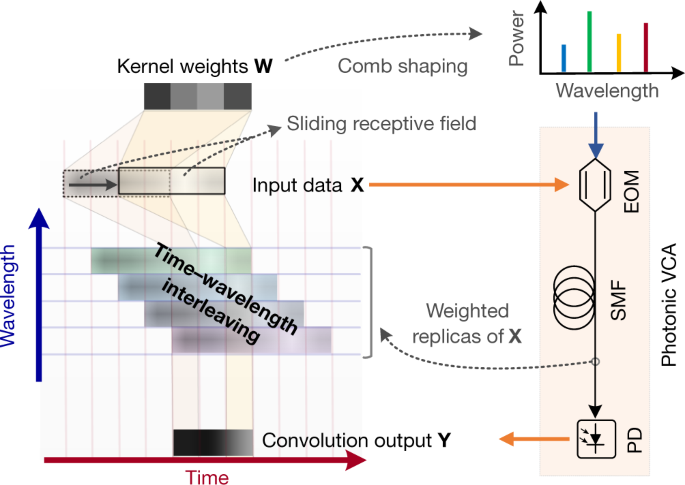

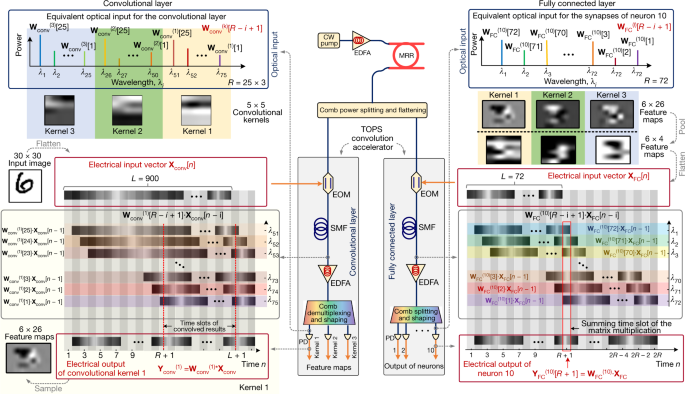

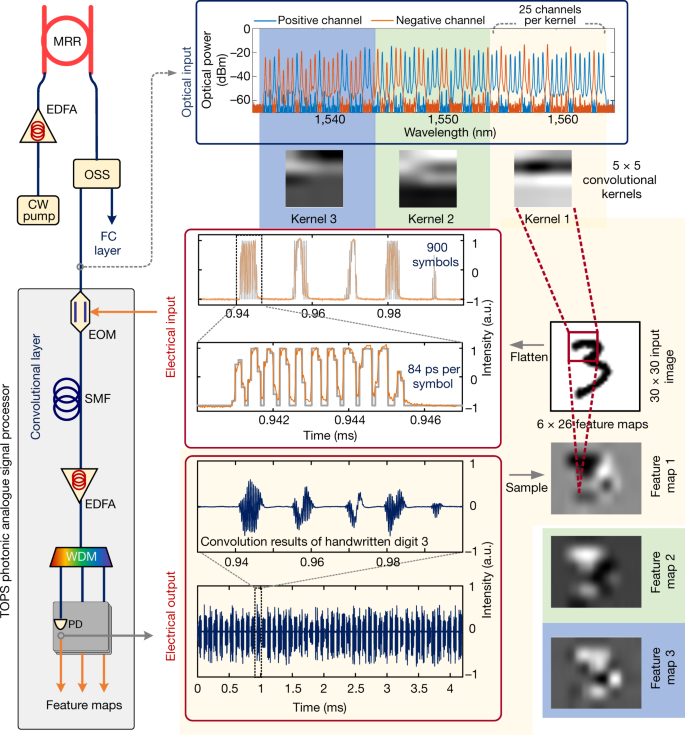

11 TOPS photonic convolutional accelerator for optical neural networks

ArXiv Weekly Radiostation:NLP、CV、ML 更多精选论文(附音频)

作者:Yingjie Gu、Xiaoye Qu、Zhefeng Wang 等

论文链接:https://arxiv.org/abs/2101.02394

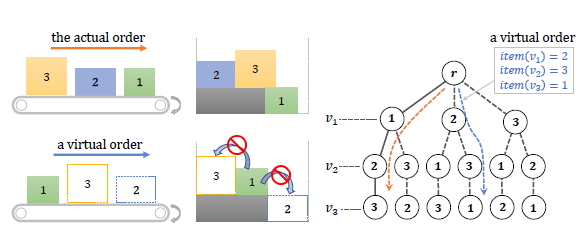

作者:Hang Zhao、Qijin She、Chenyang Zhu 等

论文链接:https://arxiv.org/abs/2006.14978

作者:Trung-Hoang Le 和 Hady W. Lauw

论文链接:https://www.ijcai.org/Proceedings/2020/0336.pdf

作者:Sandhya Saisubramanian、Ece Kamar、Shlomo Zilberstein

论文链接:https://www.ijcai.org/Proceedings/2020/0050.pdf

作者:William Fedus、Barret Zoph、Noam Shazeer

论文链接:https://arxiv.org/pdf/2101.03961.pdf

作者:Andre Esteva、Katherine Chou、Serena Yeung 等

论文链接:https://www.nature.com/articles/s41746-020-00376-2#Sec6

作者:Xingyuan Xu、Mengxi Tan、Bill Corcoran 等

论文链接:https://www.nature.com/articles/s41586-020-03063-0